...making Linux just a little more fun!

News Bytes

By Howard Dyckoff

|

Contents:

|

Please submit your News Bytes items in

plain text; other formats may be rejected without reading.

[You have been warned!] A one- or two-paragraph summary plus a URL has a

much higher chance of being published than an entire press release. Submit

items to [email protected].

News in General

A higher standard [of security]

A higher standard [of security]

At the RSA2006 Security Conference, both Red Hat's Enterprise Linux 4

and Novell's SUSE LINUX Enterprise Server 9 [on IBM eServers] were cited

for achieving the Controlled Access Protection Profile under the Common

Criteria for Information Security Evaluation, known as CAPP/EAL4+. Sun also

revealed plans to apply for CAPP/EAL4 and also for the Labeled Security

Protection Profile (LSPP).

Rather than release another Trusted Solaris compilation, Sun will

leverage its Solaris 10 OS with the release Solaris Trusted Extensions [a

beta is due in April or May], which enhance the security features of 10 to

EAL4 levels.

Scott McNealy to HP's Mark Hurd: Merge HP-UX with Solaris 10?

Scott McNealy to HP's Mark Hurd: Merge HP-UX with Solaris 10?

In a March 1st memo to HP CEO Mark Hurd [and sent by Sun to the media

and posted on its web site], Sun CEO Scott McNealy said HP should commit to

converging HP-UX with Sun's Solaris 10 Unix. This would allow HP customers

to use X86 servers with Intel's Xeon and AMD's Opteron processors.

HP currently uses Linux and Windows on its industry-standard X86 servers

but has only committed to supporting HP-UX on the 64-bit Itanium

architecture. McNealy pointed out that HP supports 64-bit Solaris 10 on

its Proliant servers.

McNealy called the move of HP-UX to Intel's Itanium system "...an

expensive and risky transition with an uncertain future."

See the full text at: http://www.sun.com/aboutsun/media/features/converge.html.

DB Wars...

DB Wars...

Oracle recently bought up its second Open Source DB engine by acquiring

the SleepCat Software and its Berkeley DB barely 3 months after snapping

up InnoDB, the innovative and highly touted transactional engine in MySQL

5. Broad reaction in the MySQL user community was down, dour, and

damning [see links].

In response, MySQL is closing a deal to purchase Netfrastructure, the

company behind the famed Firebird DB. This means DB guru Jim Starkey,

creater and developer of Interbase and co-creator of Firebird, will become

a MySQL employee. MySQL will continue to support Netfrastructure customers

during a transition period. Prior to this, Oracle held purchase discussions

with MySQL officials. [specifics were not released]

The Oracle purchase deftly controls key components of MySQL's offerings and

introduces uncertainty about future offerings from MySQL. The InnoDB

transaction engine was important because it is ACID-compliant. Hiring Jim

Starkey means MySQL will probably create a new transaction engine, perhaps

forking from the existing InnoDB source code.

Rumors abound that Oracle is also considering purchasing JBoss and Zend

Technologies. PHP-developer Zend featured prominently at the Oracle World

mega-conference this fall and Oracle held sessions at the much smaller PHP

Conference, clearly positioning itself as a major DB in the LAMP [or

LA-Or-P] stack. This is consistent with Oracle's pursuit of Linux and LAMP

as a platform independent of its competitors, especially Microsoft.

MySQL announced on Feb. 27 its hiring of Starkey, and also the hiring of

a new chief technology officer, Taneli Otala, the former CTO of SenSage.

Coming Soon -- Oracle rootkits??

Coming Soon -- Oracle rootkits??

"It's not about the infrastructure; it's about the data", says a worried Shlomo Kramer,

CEO of Imperva, a database security company in California, about threats to DBs and data integrity.

Alexander Kornbrust, of Red Database Security GmbH, is developing rootkit-like technology that uses the

DB's system functions to mask and manipulate processes and data changes. Worse still, such DB rootkits

would be OS independent.

Check this link for more details: http://www.eweek.com/article2/0,1895,1914465,00.asp

OpenVZ Project Introduces Virtualization Website

OpenVZ Project Introduces Virtualization Website

The OpenVZ project now hosts a website

to freely distribute its OpenVZ virtualization software, provide

installation instructions, plus additional documentation as well as access

to different support options, including online chat. OpenVZ.org also

provides a participation platform for feedback, shared experiences, bug

fixes, feature requests and knowledge-sharing with other users.

OpenVZ is operating system level virtualization technology, built on

Linux, that creates isolated, secure virtual private servers (VPS) on a

single physical server. OpenVZ, supported by SWsoft, is a subset of the

Virtuozzo virtualization software product.

Open-Xchange Offers Free Live-CD

Open-Xchange Offers Free Live-CD

Open-Xchange, Inc., the vendor of open source collaboration software,

made news in several ways this January.

Open-Xchange, Inc. now offers a free, fully functional 'Live-CD' of

Open-Xchange Server 5 that gives users a cost-free way to test all the

attributes of the Open Source alternative to Microsoft Exchange.

Built on KNOPPIX Linux, the Live-CD contains a complete edition of

Open-Xchange Server 5 that boots directly from the CD-ROM, ensuring that

the host computer is not modified in any way. Frank Hoberg, CEO,

Open-Xchange Inc, explained, "This is not a polished, pre-run demo, but the

real live product that will give everyone who uses it a good idea of what

we offer."

It also announced hiring former IDC System Software Vice President

Daniel Kusnetzky as Executive Vice President for Marketing Strategy.

Kusnetzky is a noted expert on the Open Source industry, and has been a

staple as a keynote speaker at industry trade shows. He spent 11 years at

IDC doing research on the worldwide market for operating environments and

virtualization software and, previous to that, 15 years with the Digital

Equipment Corporation.

Finally, Open-Xchange announced that Systems Solutions Inc. of New York,

has joined them as a strategic system integration partner. Systems

Solutions helped in developing the SUSE Linux OpenExchange collaboration

platform for the Americas.

Open-Xchange Server 5 was launched in April 2005 as a commercial

product, and supports both Red Hat and SUSE Linux. The GPL version of

Open-Xchange Server is downloaded more than 9,000 times each month. The

Live-CD can be downloaded from www.open-xchange.com/live.

AppArmor Now Open-Source

AppArmor Now Open-Source

Novell announced the creation of the AppArmor security project early

this year, a new GPL Open Source project dedicated to advancing Linux

application security. AppArmor is an intrusion-prevention system that

protects Linux and applications from viruses and malware. Novell acquired

the technology in 2005 from Immunix, a leading provider of Linux security

solutions.

AppArmor limits the interactions between applications and users by

watching for possible security violations from a tree of allowed

interactions. Unexpected behaviors are blocked. AppArmor builds its

application profiles by working with a system administrator; another

version includes predefined profiles for applications such as Apache,

MySQL, and Postfix and Sendmail email servers.

Novell AppArmor is already being shipped and deployed on SUSE Linux

10.0, Novell's community Linux distribution and its SLES (SUSE Linux

Enterprise Server) 9 Service Pack 3.

FireFox wins Technical Excellence Award

FireFox wins Technical Excellence Award

CNET has awarded Firefox 1.5 its Editor's Choice award, and Firefox has

received two awards from PC Magazine: the Technical Excellence Award for

Software and the Best of 2005 Award. Firefox also garnered international

recognition from UK-based PC Pro and received the publication's Real World

Computing Award, and was chosen as the "Editor's Choice" by German-basedPC

Professionell magazine.

And, to accelerate adoption of Firefox, Mozilla has recently unveiled

the second phase of "Firefox Flicks," its community-driven marketing

campaign for the browser. The Firefox Flicks Ad Contest encourages

professionals, students, and aspiring creatives in film and TV production,

Web design, advertising and animation to submit high-quality 30-second ads

about the browser. This new contest builds on the first phase of the

campaign, which encouraged Firefox users worldwide to submit video

testimonials about their experience with Firefox. More information about

Firefox Flicks is available at www.firefoxflicks.com.

Conferences and Events

-

==> All LinuxWorld Expos <==

- http://www.linuxworldexpo.com/live/12/media/SN787380

- RSA Security Conference -- Feb 12-17, 2006 (just finished)

TechTarget summary:

-

http://searchsecurity.techtarget.com/general/0,295582,sid14_gci1165948,00.html

- OSBC San Francisco 2006 -- Feb 14-15, 2006 (just finished)

Presentations [Username: conference / Password: attendee]:

- http://www.osbc.com/live/13/events/13SFO06A/conference/CC579381

- O'Reilly Emerging Technology Conference

- March 6-9, 2006, San Diego, California

- Semantic Web Technology Conference 2006

- March 6-9, 2006, San Jose, California

- TelecomNEXT

- 2006 March 19-23, 2006, Las Vegas, NV

- EclipseCon 2006

- March 20-23, 2006

Santa Clara Convention Center

Santa Clara, California

- Gartner Wireless Mobile Summit

- March 27-29, 2006, Detroit, Michigan

- LinuxWorld Conference & Expo

- April 3-6, 2006, Boston MA

- MySQL Users Conference 2006

- April 24-27, 2006, Santa Clara, California

http://www.mysqluc.com/

MySQL Certification is offered at $75 (a $200 value) if pre-registered

- Desktop Linux Summit

- April 24-25, 2006, San Diego, CA

- JavaOne Conference

- May 16-19, Moscone Center, San Francisco, CA

- Red Hat Summit

- May 30 - June 2, 2006, Nashville, TN

- 21st Int'l Supercomputer Conference

- June 27 - 30, Dresden, Germany

- O'Reilly Open Source Convention 2006

- July 24-28, 2006, Portland, OR

- LinuxWorld Conference & Expo

- August 14-17, 2006 -- in foggy San Francisco, dress warmly!!

FREE Commercial Events of Interest

- BEA Dev2DevDays

- March-April, 2006, US/Asia/Europe

http://www.bea.com/dev2devdays/index.jsp?PC=26TU2GXXEVD2

- Sun Participation Age Tour

- March 30 - April 11, 2006, visiting Phoenix, Seattle, Santa Clara, Los Angeles

Distro News

Linux Kernel

Linux Kernel

User Download [ 2.6.15.4 ]: ftp://ftp.kernel.org/pub/linux/kernel/v2.6/linux-2.6.15.4.tar.gz

Dev Download [ 2.6.16-rc5 ]: ftp://ftp.kernel.org/pub/linux/kernel/v2.6/testing/linux-2.6.16-rc5.tar.gz

Gentoo Linux

Gentoo Linux

Gentoo Linux 2006.0, the first release this year, came out in February

and boasts many improvements since the 2005.1 version. Major highlights

include KDE 3.4.3, GNOME 2.12.2, XFCE 4.2.2, GCC 3.4.4, and a 2.6.15 kernel.

SUSE Linux

SUSE Linux

SUSE Linux 10.1 Beta 5 was released in February. Check here for downloads:

http://en.opensuse.org/Welcome_to_openSUSE.org

LFS 6.1.1 Released

LFS 6.1.1 Released

The Linux From Scratch community has announced the release of LFS 6.1.1.

This release includes fixes for all known errata since LFS-6.1 was

released. A new branch was created to test the removal of hotplug. This

branch requires a newer kernel and a newer udev than what is currently in

the development branch. Anyone who would like to help test this branch can

read the book online, or download to read locally. If you prefer, you can

check out the book's XML source from the Subversion repository and render

it yourself:

svn co svn://svn.linuxfromscratch.org/LFS/branches/udev_update/BOOK/

http://www.linuxfromscratch.org/lfs/index.html

Novell - XGL and hardware acceleration

Novell - XGL and hardware acceleration

ZDNET reported that Novell is releasing a new graphics package for its

SUSE Linux distro into the Open Source world. The package makes fuller use

of advanced computer graphics chips to manage desktop windows and the use

of 3D and semi-transparent objects. Based on the widely used OpenGL

libraries, XGL updates the interactions between XWindows software and

modern graphics hardware.

XGL makes better use of video memory for overlaps and screen redraws

and also supports vector graphics, which could replace many of the font

bitmaps used in most Linux distros. The source code was originally released

in January, but Novell is also adding a development framework for graphics

plugins. [The Fedora project has a similar effort underway called AIGLX

for 'Accelerated Indirect GL X'.]

The code and future releases will become part of the X.Org source tree

and thus could be used by any *nix in the future. It will premier in the

next release of SUSE, expected in the early summer.

This link shows XGL in action --

http://news.zdnet.com/i/ne/p/2006/transparency1_400x250.jpg

Software and Product News

JBoss Acquires and Open Sources ArjunaTS Transaction Monitor

JBoss Acquires and Open Sources ArjunaTS Transaction Monitor

JBoss(R) Inc. has acquired the distributed transaction monitor and web

services technologies owned by Arjuna Technologies and HP and will Open

Source them for the JBoss Enterprise Middleware Suite (JEMS(TM)). This

allows enterprise-quality middleware to be freely available to the mass

market.

The acquisition includes Arjuna Transaction Service Suite (ArjunaTS),

one of the most advanced and widely deployed transaction engines in the

world with a 20-year pedigree, and Arjuna's Web Services Transaction

implementation, the market's only implementation supporting both leading

web services specifications -- Web Services Transaction (WS-TX) and Web

Services Composite Application Framework (WS-CAF). This implementation is

also one of the few that has demonstrated interoperability with other

industry leaders such as Microsoft and IBM. The core Arjuna transaction

engine will be the foundation of JBoss Enterprise Service Bus (ESB).

As a co-author of the WS-TX and WS-CAF specifications, Arjuna has

developed an industrial-strength web services implementation that uniquely

supports both specs. In the web services area, a line is being drawn

between the specifications, with WS-TX supported by companies like Arjuna,

Microsoft, and IBM and WS-CAF supported by Arjuna, Oracle, and Sun among

others. With Arjuna's Web Services Transaction implementation as a core

product, JEMS bridges the gap between these two camps and remains

platform-independent.

JBoss expects to release ArjunaTS and Arjuna's Web Services Transaction

implementation as open source JEMS offerings in Q1 2006 backed by JBoss

Subscription services, training, and consulting. Like all JEMS products,

these offerings will run independently as free-standing products or on any

J2EE application server. For more information, visit www.jboss.com/products/transactions.

IBM Readies 9-way compiler for SPS-3

IBM Readies 9-way compiler for SPS-3

IBM is readying a special compiler for the new Cell Broadband Engine

chip in the forthcoming Sony Play Station 3. That chip has a 64-bit PPC

core and 8 additional synergistic processor elements, or SPEs, for real

time processing of gameplay. Each SPE has 256 KB of local cache and can

read data into a 128-bit register, for single instruction, multiple data

tasks.

The Cell BE chip was developed in partnership with Sony and Toshiba

and is well adapted to running immersive simulations and also in scientific

and signal processing applications. IBM is offering the Cell as a

processor option on its BladeCenter H chassis later this year.

The Cell compiler currently runs on Fedora Linux installations on 64-bit

x86 computers. Porting Linux to Cell based computers is an unconfirmed

option.

The Cell BE compiler implements SPE-specific optimizations, including

support for compiler-assisted memory realignment, branch prediction, and

instruction fetching. It addresses fine-grained SIMD parallelization as

well as the more general OpenMP task-level parallelization. The goal is to

provide near super-computer performance in commercial and consumer

computers.

A report on the compiler and benchmarking the Cell is at this link : http://www.research.ibm.com/journal/sj/451/eichenberger.html

and information on the project is at http://www.research.ibm.com/cell/.

Next generation open-source XML parser

Next generation open-source XML parser

XimpleWare recently announced the availability of version 1.5 of

VTD-XML, for both C and Java. This is a next generation open-source XML

parser that goes beyond DOM and SAX in terms of performance, memory usage

and ease of use.

XimpleWare claims VTD-XML is the world's fastest XML parser, 5x-10x

faster than DOM, and 1.5-3x faster than SAX, using a variety of file sizes.

VTD-SML features random access with built-in XPath support. Its also uses a

third of the memory of a DOM parser. This allows for support of large

documents, up to 2 GB.

For demos, latest benchmarks, and software downloads, please visit http://vtd-xml.sf.net.

AJAX IDE Released As Open Source

AJAX IDE Released As Open Source

ClearNova's AJAX-enabled ThinkCAP JX™ rapid application

development platform is now available as Open Source under GPL license for

non-commercial distribution. AJAX (Asynchronous JavaScript and XML) is a

set of programming techniques that allow Web applications to be much more

responsive and provide usability on par with traditional client/server

applications.

At the core of ThinkCAP's AJAX framework are two popular Open Source

AJAX projects: prototype and script.aculo.us. These

libraries provide excellent base functionality and are the two projects

driving the AJAX functionality of the Ruby-On-Rails project.

ThinkCAP JX allows 4GL developers to rapidly build web-based

applications without having to become JAVA, XML and JavaScript experts.

ThinkCAP JX is available for download at the www.thinkcap.org.

ThinkCAP JX runs on any operating system and Java application servers

such as IBM WebSphere, BEA Weblogic, JBoss, Tomcat, Jetty, and Resin, among

others.

Lexar and Google Offer USB Flash Drives with Web Applications

Lexar and Google Offer USB Flash Drives with Web Applications

Lexar Media, Inc., is bringing Google applications directly to customers

by including Picasa, Google Toolbar and Google Desktop Search applications

on its line of popular USB flash drives. The offering is the first time

consumers will be able to install Google applications from a USB flash

drive directly to their desktop.

Customers purchasing a Lexar JumpDrive simply have to plug the device

into the USB port and be prompted with instructions to easily install the

free applications. If the user accepts installation, Google products

automatically install to their computer and are then removed from the USB

flash drive.

First WiMAX products get certified

First WiMAX products get certified

In January, the WiMAX Forum began issuing certifications for products

meeting the 802.16-2004 IEEE standard. If fully implemented, WiMax supports

a range of several miles and speeds of up to 40Mbps. The standard for

mobile WiMax is 802.16e and was ratified in December of 2005.

Some last minute changes to the standard in late 2005 delayed these

first certifications for products in the European-designated 3.5GHz radio

frequency band. Certifications for the 2.5GHz radio frequency band used in

the US will start in the middle of 2006. Equipment makers seeking

certification include Redline Communications, Sequans Communications, and

Wavesat. Find more WiMAX info here: http://www.eweek.com/article2/0,1895,1912528,00.asp

Also See: Silicon Valley eyes wireless network - Partnership sets goal:

1,500 square miles of broadband access

http://www.sfgate.com/cgi-bin/article.cgi?f=/c/a/2006/01/28/BUG4FGUPFQ1.DTL&hw=Wireless+bids&sn=005&sc=829

Magical Realism... (non-Linux news of general interest)

Quantum Computation is... no computation?

Quantum Computation is... no computation?

[from:

www.physorg.com/]

By combining quantum computation and quantum interrogation, scientists

at the University of Illinois at Urbana-Champaign have found an exotic way

of determining an answer to an algorithm... without ever running the

algorithm.

Using an optical-based quantum computer, a research team led by

physicist Paul Kwiat has presented the first demonstration of

"counterfactual computation," inferring information about an answer, even

though the computer did not run. The researchers reported their work in the

Feb. 23 issue of Nature.

Quantum computers have the potential for solving certain types of

problems much faster than classical computers. Speed and efficiency are

gained because quantum bits can be placed in superpositions of one and

zero, as opposed to classical bits, which are either one or zero. Moreover,

the logic behind the coherent nature of quantum information processing

often deviates from intuitive reasoning, leading to some surprising

effects.

"It seems absolutely bizarre that counterfactual computation - using

information that is counter to what must have actually happened - could

find an answer without running the entire quantum computer," said Kwiat, a

John Bardeen Professor of Electrical and Computer Engineering and Physics

at Illinois. "But the nature of quantum interrogation makes this amazing

feat possible."

"In a sense, it is the possibility that the algorithm could run which

prevents the algorithm from running," Kwiat said. "That is at the heart of

quantum interrogation schemes, and to my mind, quantum mechanics doesn't

get any more mysterious than this."

Paul G. Allen Launches PDPplanet.com Site

Paul G. Allen Launches PDPplanet.com Site

Investor, philanthropist and co-founder of Microsoft Paul G. Allen

unveiled a new Web site, www.PDPplanet.com,

as a resource for computer history fans and those interested in Digital

Equipment Corporation (DEC) systems and XKL systems. From a PDP-8/S to a

DECSYSTEM-20 to a Toad 1, Allen's collection of systems from the late 1960s

to the mid-1990s preserves the significant software created on these early

computers.

Via the new Web site, registered users from around the world can telnet

into a working DECsystem-10 or an XKL Toad-1, create or upload programs,

and run them -- essentially stepping back in time to access an "antique"

mainframe, and getting a sense of how it felt to be an early programmer.

Along with Allen's Microcomputer Gallery being at the New Mexico Museum

of Natural History and Science in Albuquerque (opening later this year),

and the Computer History Museum in Mountain View, California, PDP Planet

provides an important exploration of early computer technology.

Bounty on MS Windows critical flaws

Bounty on MS Windows critical flaws

Its MS bug season! IDefense has offered a bounty of $10,000 for

uncovering major Windows flaws, but only if MS will identify them as

critical. Previously, TippingPoint offered bug 'bonuses' of $1,000-20,000

as part of its Zero Day Initiative [http://www.zerodayinitiative.com/benefits.html].

In an email made public, MS was critical of offering any compensation

for efforts now undertaken by computer security companies. "Microsoft

believes that responsible disclosure, which involves making sure that an

update is available from software vendors the same day the vulnerability is

first broadly known, is the best way to protect the end user."

How to Build WIMAX Networks

How to Build WIMAX Networks

NetworkAnatomy, a Northern California wireless communications company,

has taken the lead, on-line, in providing a low-cost [USD$175] reality

engineering education series via its monthly OnLine-CTO emagazine. The goal

is to overcome the lack of practical WiMax training in the US, where very

few WiMax projects have been initiated and there is only a small pool of

experienced engineers.

NetworkAnatomy's effort offers "how to" installments, with reference

material and skill tests. Click the blinking "New Service -- OnLine-CTO",

at the www.networkanatomy.com

website, subscribe, and dive into the WIMAX engineering series.

NetworkAnatomy can also be contacted by email through [email protected].

I, Robot....

I, Robot....

Robotics Trends and IDG World Expo announced in January that Carnegie

Mellon University's Robot Hall of Fame will hold its 2006 induction

ceremony at the 3rd annual RoboBusiness Conference and Exposition, the

international business development event for mobile robotics and

intelligent systems.

The conference and exposition will be held in Pittsburgh, PA on June

20-21, 2006. The event website is http://www.robobusiness2006.com

According to Dan Kara, conference chairman and President of Robotics

Trends, Inc., "We are extremely pleased to announce that the 2006 Robot

Hall of Fame induction ceremony will be part of the RoboBusiness Conference

and Exposition. The Robot Hall of Fame induction adds a great deal of

excitement, energy, prestige and glamour to the RoboBusiness event. Past

inductees to the Robot Hall of Fame include some of the most significant

and well known robots in the world including Honda's Asimo, NASA's Mars

Pathfinder and Unimate, the first industrial robot arm that worked on the

assembly line. The Robot Hall of Fame jurors are an equally distinguished

collection of international scholars, researchers, writers, and designers

including Gordon Bell, Arthur C. Clarke, Steve Wozniak, Rodney Brooks and

others. With the addition of the Robot Hall of Fame induction ceremony, the

RoboBusiness event becomes even more impactful, and certainly more

entertaining."

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/dyckoff.jpg) Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Copyright © 2006, Howard Dyckoff. Released under the

Open Publication license

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 124 of Linux Gazette, March 2006

Interfacing with the ISA Bus

By Abhishek Dutta

Abstract: The parallel port is a very popular choice for interfacing.

Although there are 8 data output lines as well as the CONTROL and STATUS

pins of the parallel port, this is often not sufficient for some complex

projects, which require more data I/O lines. This project shows how to get

32 general purpose I/O lines by interfacing with the ISA Bus. Though the

PCI bus can be a candidate for interfacing experiments, its greater speed

and feature-rich nature present great complexity in terms of hardware and

software to beginners. This project can be a stepping stone to those

thinking of ultimately getting to the PCI Bus for interfacing experiments.

It can also be useful for those thinking of making a Digital Oscilloscope

using a PC, A/D and D/A converters, a Microcontroller programmer, etc.

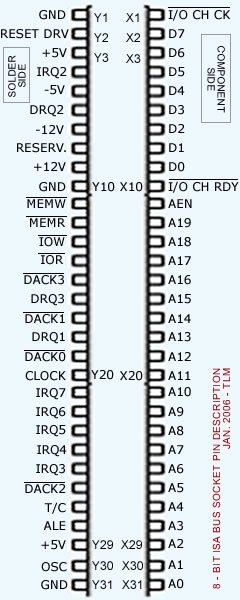

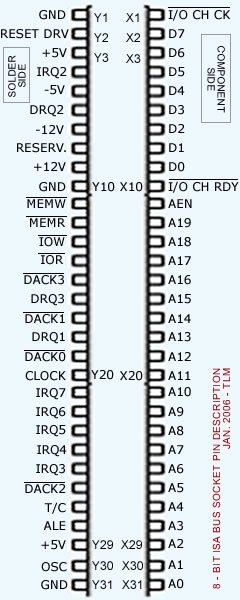

First, let's get familiar with the ISA connector:

Pin Description

We have designated as X(n) the side that contains components on

all standard ISA cards. Similarly, Y(n) is the side that contains the

solder. It is very important for you to be clear on the above convention:

you will damage your motherboard if you mistake one for the other.

The description for most commonly used pins are given below:

SIDE X

- D0 - D7 (pins X2 to X9)

- These are the 8 data lines representing the 8-bit data bus.

- A0 - A19 (pins X31 to X12)

- These are the 20 address lines that contain the address bits. This bus can address 1MB (2^20=1048576 bytes)

- AEN (pin X11)

- It is used by the DMA controller to take over to data and address bus during DMA transfer.

Side Y

- GND (pins Y1,Y10,Y31)

- They are connected to ground of computer.

- +5V (pin Y3)

- +5 Volt DC output

- -5V (pin Y5)

- -5 Volt DC output

- +12V (pin Y9)

- +12 Volt DC output

- -12V (pin Y7)

- -12 Volt DC output

- MEMW(pin Y11)*

- The microprocessor makes this line LOW while doing WRITE TO MEMORY.

- MEMR (pin Y12)*

- The microprocessor makes this line LOW while doing READ FROM MEMORY.

- IOW (pin Y13)

- The microprocessor makes this line LOW while doing WRITE TO PORT. (eg: when you write outportb(ADDRESS,BYTE), this line becomes LOW)

- IOR (pin Y14)

- The microprocessor makes this line LOW while doing READ FROM PORT. (eg: when you write byte = inportb(ADDRESS), this line becomes LOW)

- DACK0 - DACK3 (pins Y19,Y17,Y26,Y15)*

- The DMA controller signals on these lines to let devices know that that DMA has the control of buses.

- DRQ1 - DRQ3 (pins Y18,Y6,Y16)*

- These pins allows the peripheral boards to request the use of the buses.

- T/C (pin Y27)*

- The DMA controller sets this signal to let the peripheral know that the programmed number of bytes has been sent.

- IRQ2 - IRQ7 (pins Y4,Y25,Y24,Y23,Y22,Y21)*

- Interrupt Signals. The peripheral devices set these signals to request the attention of the microprocessor.

- ALE (pin Y28)*

- Address Latch Enable. This signal is used by the microprocessor to lock the 16 lower address bus in a latch during a memory or port input/output operation.

- CLOCK (pin Y20)*

- The system clock

- OSC (pin Y30)*

- It is a high frequency clock which can be used for the I/O boards.

* these pins will not be used in this project

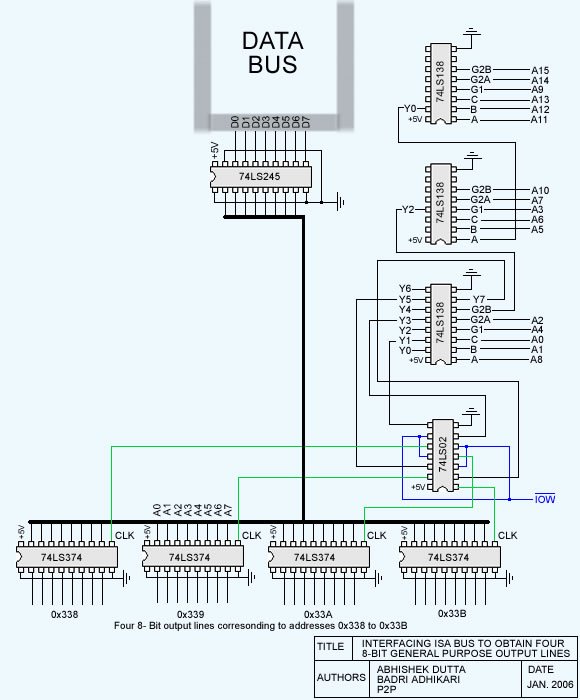

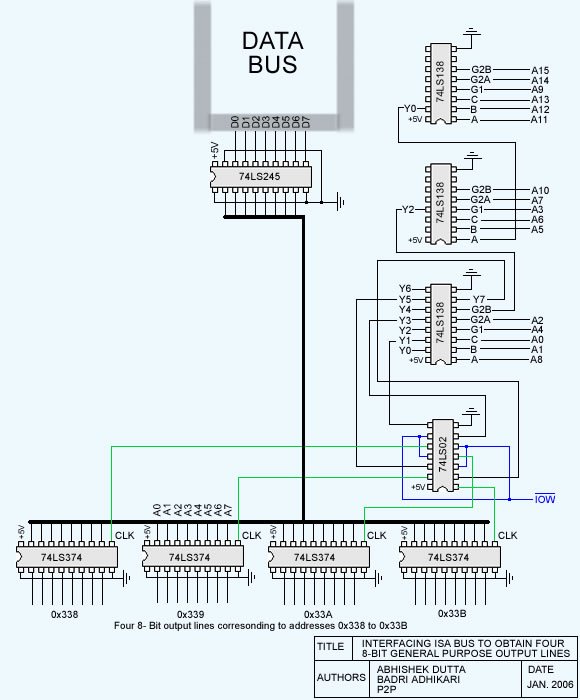

Getting Four Output lines out of an ISA Bus

Before going into the details of the full project let's examine the part

that handles the four 8-bit output lines. The addresses in the range 0x338

to 0x33B were not in use by any devices for input/output operations in our

computer.

The three 74LS138 ICs handle the address decoding. We configured the

circuit to produce a short pulse on the CLOCK line (represented by green

lines on the schematic) whenever an address in the range 0x338 to 0x33B and

port output (IOW) is requested.

Whenever the 74LS374 gets a CLOCK pulse it latches in the 8-bit data

present in the data bus. 74LS245 is a 3-state Octal Bus Transceiver. It

reduces DC loading by isolating the data bus from external loads.

[ This is true, at least in theory.

Don't use the output to power your favorite toaster oven,

and avoid shorting it to Vss or Vcc; anything other than an optocoupler may

not isolate quite as well as the manufacturer promises, and IC shrapnel is

difficult to pick out of the ceiling. -- Ben ]

To figure out which I/O port addresses are available for use in this

project, we examined the contents of ioports in the /proc

directory of our Linux system:

[root@thelinuxmaniac~]# cat /proc/ioports

0000-001f: dma1

0020-0021: pic1

0040-0043: timer0

.......................

.......................

01f0-01f7: ide0

0378-037a : parport0

037b-037f : parport0

03c0-03df : vga+

.......................

.......................

It is clear from the above output that the addresses 0x238-0x23B and

0x338-0x33B are not being used by any device. This is often the case in

most computers. However, if this address is occupied by some device, then

you have to change the wiring of address lines to the three 74LS138 ICs.

We'll describe the address decoding technique briefly so that you can

set up available addresses for the I/O device you are trying to build.

Address Decoding

We used the 74LS138 3-to-8 multiplexer for address decoding. Suppose we

want to assign the addresses 0x338-0x33B for four 8-bit output lines and

0x238-0x23B for four 8-bit input lines.The binary equivalent of these

addresses are:

| Address |

| 0x338 |

0 |

0 |

0 |

0 |

0 |

0 |

1 |

1 |

0 |

0 |

1 |

1 |

1 |

0 |

0 |

0 |

| 0x339 |

0 |

0 |

0 |

0 |

0 |

0 |

1 |

1 |

0 |

0 |

1 |

1 |

1 |

0 |

0 |

1 |

| 0x33A |

0 |

0 |

0 |

0 |

0 |

0 |

1 |

1 |

0 |

0 |

1 |

1 |

1 |

0 |

1 |

0 |

| 0x33B |

0 |

0 |

0 |

0 |

0 |

0 |

1 |

1 |

0 |

0 |

1 |

1 |

1 |

0 |

1 |

1 |

| 0x238 |

0 |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

0 |

0 |

1 |

1 |

1 |

0 |

0 |

0 |

| 0x239 |

0 |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

0 |

0 |

1 |

1 |

1 |

0 |

0 |

1 |

| 0x23A |

0 |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

0 |

0 |

1 |

1 |

1 |

0 |

1 |

0 |

| 0x23B |

0 |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

0 |

0 |

1 |

1 |

1 |

0 |

1 |

1 |

| Address Lines |

A15 |

A14 |

A13 |

A12 |

A11 |

A10 |

A9 |

A8 |

A7 |

A6 |

A5 |

A4 |

A3 |

A2 |

A1 |

A0 |

The only address lines that change for any of the eight addresses are

A8, A1, and A0 (the whole process of

connecting wires to 74LS138 IC is like solving a puzzle!) Connect the

remaining wires to the two 74LS138s so that they produce a low output when

these lines have the address bits that partially match our addresses. Now,

connect the above three lines to the third 74LS138. All 8 outputs of

this IC are used to select the 74LS374 latches corresponding to input and

output addresses after it is NORed with IOR and IOW; we used the 74LS02 to

distinguish between memory IO and port IO addressing.

| 74LS138 Truth Table |

| G1 |

G2 |

C |

B |

A |

Y0 |

Y1 |

Y2 |

Y3 |

Y4 |

Y5 |

Y6 |

Y7 |

| X |

H |

X |

X |

X |

H |

H |

H |

H |

H |

H |

H |

H |

| L |

X |

X |

X |

X |

H |

H |

H |

H |

H |

H |

H |

H |

| H |

L |

L |

L |

L |

L |

H |

H |

H |

H |

H |

H |

H |

| H |

L |

L |

L |

H |

H |

L |

H |

H |

H |

H |

H |

H |

| H |

L |

L |

H |

L |

H |

H |

L |

H |

H |

H |

H |

H |

| H |

L |

L |

H |

H |

H |

H |

H |

L |

H |

H |

H |

H |

| H |

L |

H |

L |

L |

H |

H |

H |

H |

L |

H |

H |

H |

| H |

L |

H |

L |

H |

H |

H |

H |

H |

H |

L |

H |

H |

| H |

L |

H |

H |

L |

H |

H |

H |

H |

H |

H |

L |

H |

| H |

L |

H |

H |

H |

H |

H |

H |

H |

H |

H |

H |

L |

| Refer to the 74LS138 datasheet for details |

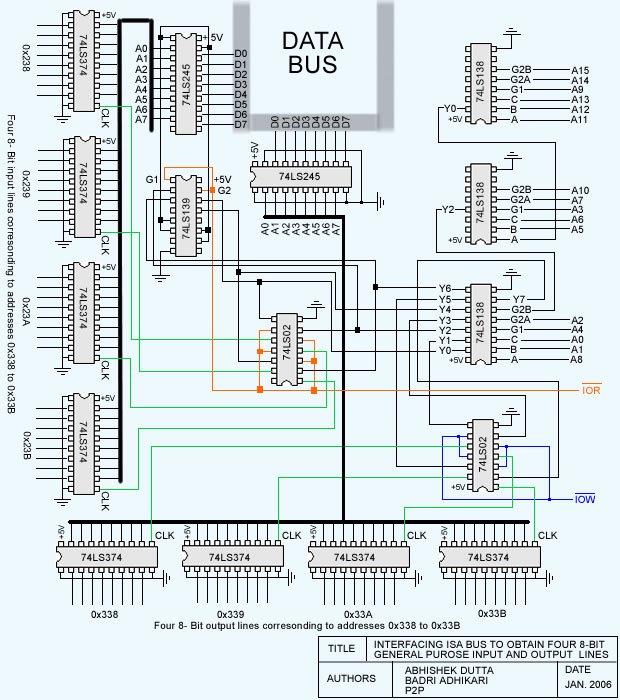

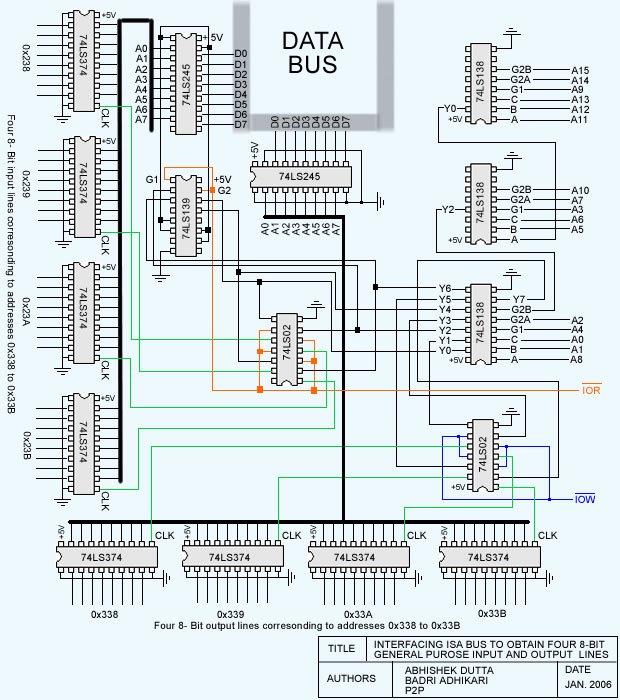

The Real Stuff

Now, finally, we are ready to describe the functioning of the complete circuit.

Description of the ICs used in this project

- 74LS138 and 74LS139

- Decoders/de-multiplexers; used for address decoding

- 74LS245

- Octal 3-state Buffer/Line Driver/Line Receiver

- 74LS374

- Octal Transparent Latch with 3-state outputs; octal D-type flip-flop with 3-state output

- 74LS02

- Quad 2-input NOR gate

The three 74LS138 IC are used for address decoding along with the two

74LS02s (2-input NOR gate.) Whenever a match is found in the address lines,

the respective output line, Y(x) of the third 74LS138 IC (connected to the

two 74LS02 IC), goes LOW. These lines along with IOW (and IOR) are

connected to the NOR gates (74LS02), which produces a HIGH only when the two

inputs go LOW simultaneously.

Hence, the output is high only when:

- a match is found in the address lines

- the IOW or IOR lines go LOW, representing the PORT IO operation.

Remember, if we do not consider the second case, our device will

conflict with the memory IO operations in the addresses 0x238-0x23B and

0x338-0x33B.

We can see in the circuit diagram that the output lines of NOR gates

are connected to the CLOCK pins of the 74LS374 latch. So, whenever the

above two cases match simultaneously, the CLOCK pulse is sent to the

respective latch and the data that is present on the data bus at that

moment is latched in.

Coding - controlling the I/O lines using C

isa.c illustrates the some simple

coding methods to control and test the I/O lines of the device created in

this project.

if(ioperm(OUTPUT_PORT,LENGTH+1,1))

{

...

}

if(ioperm(INPUT_PORT,LENGTH+1,1))

{

...

}

outb(data,port);

data = inb(port);

ioperm() gets the permission to access the specified ports from the

kernel; outb() and inb() functions (defined in sys/io.h) read from and

write to the specified port.

Some Debugging Techniques

It is not easy to get a complex project to work just by reading an

article like this. At some point you will need to debug your hardware.

Hopefully, these debugging techniques will help you (as they have helped us

- a lot!) to find the problem in your work. You will need a multimeter and

some LEDs. What we learned while debugging is that LEDs are the best way to

debug hardware of this nature when you don't have sophisticated debugging

instruments. Some important techniques we discovered:

- Use of Multimeter

- A multimeter will be very useful to check the zeros and ones coming

across ICs. Verify that expected output is coming at every IC. ZERO will be

measured as 0.8V and ONE will be measured as 3.8V (this will vary with

computer). This can be used if the address decoding does not work, or when

unexpected data is seen on the output lines. DO NOT CONNECT THE

MULTIMETER PROBES DIRECTLY TO DATA BUS OR THE ADDRESS LINES. ALWAYS CONNECT

IT TO THE OUTPUT OF THE RESPECTIVE ICS!

- Use of LEDs

- LEDs can be very useful to verify the data bits coming from the output

lines; the lighting up of LED will be visible across latches. To check

whether the clock pulse is going to the right latch, connect an LED to

the CLK pin and send data to that port in a continuous loop, like this:

while(1){

outb(0x80,0x338);

}

There are lots of other debugging techniques which you will probably

discover by yourself when you run into problems. Try to ensure that the

wiring at the connector that goes into the ISA slot is correct. We checked

every part of the device (every IC, all those jumper wires, etc.) and after

debugging for about a week we found that IOW and IOR wires were connected

to the wrong pins in the ISA slot. So, recheck the wiring. Fortunately, we

did not mistake the 12V pin for a 5V pin! ;)

The photo of the device that we constructed is here.

You can get more details and photos related to this project at http://www.myjavaserver.com/~thelinuxmaniac/isa

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/2002/note.png)

I am studying Computer Engineering at the Institute of Engineering,

Pulchowk Campus (NEPAL). I love to program in the Linux Environment. I like

coding in C, C++, Java and Web Site

Designing (but not always). I like participating in online programming

contests like that at topcoder.com. My

interests keep on changing and I love reading books on programming, murder

mysteries (Sherlock Holmes, Agatha Christie, ...) and watching movies.

Copyright © 2006, Abhishek Dutta. Released under the

Open Publication license

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 124 of Linux Gazette, March 2006

PyCon 2006 Dallas

By Mike Orr (Sluggo)

My most distinct impression of Dallas was the birds. Two hundred on the

electric wires. Another two hundred swoop in for the party. Others sit on

nearby trees. Four times as many birds as I've ever seen in one

place. All different kinds, all chirping loudly at each other. Now I know

where birds go when they fly south for the winter: to Dallas. Especially to

the intersection of Belt Line Road and the North Dallas Tollway. Around the

intersection stand four or five steel-and-glass skyscrapers. Corporate execs

watch the birds through their windows -- and get pooped on when they

venture outside. But this isn't downtown; it's a suburb, Addison, with a

shopping mall across the tollway. Behind CompUSA is the Marriott hotel where

the Python conference took place.

PyCon was started three years ago as a

low-budget developers' conference. It had always met at the George Washington

University in Washington DC, but attendance has been growing by leaps each

year and had reached the facility's capacity. After a nationwide search, the

Python Software Foundation decided to sign a two-year contract with a Dallas

hotel. That was partly because the Dallas organizers were so enthusiastic and

hard-working, the hotel gave us an excellent deal, and we wanted to see

how many people in the southern US would attend a conference if it were closer

to home. There's no scientific count, but I did meet attendees from Texas,

Utah, Arizona, Nevada, and Ohio, many of whom said they had never been to PyCon

before. Overall attendance was 410, down from 450. Not bad considering the

long move and the fact that many people won't go to Dallas because it's, well,

Dallas. But the break-even point was in the high 300s so it didn't bust the

bank. The layout had all the meeting rooms next to each other and there were

sofas in the hallway, so it was easier to get to events and hold impromptu

discussions than last year. The hotel staff was responsive; there were techs

on-hand to deal with the sound system. The main problem was the flaky wi-fi,

which will hopefully be improved next year (read "better be improved next

year".) A hand goes out to Andrew Kuchling, who proved his ability not only in

coding and documentation ("What's New in Python 2.x?") but also in

conference organizing. This was his first year running PyCon, and he got

things running remarkably smoothly.

There seemed to be more international attendees this year. I met people

from the UK, Ireland, Germany, the Netherlands, Sweden, Japan, Argentina, and a

German guy living in China. This is in spite of the fact that EuroPython and

Python UK are now well established, and the main pycon.org site is now an umbrella covering

both.

Here's the conference schedule. Several of the

talks were audio- or video-recorded and will be available here.

Keynotes

Guido Note 1

delivered two keynotes. One was his usual "State of the Python Universe".

The other was a look backward at Python's origins. The latter covered

territory similar to this 2003 interview, which explains

how Guido created Python to try out some language ideas and improve on ABC,

a language he'd had both good and bad experiences with. He also explained

why Python doesn't have type declarations: "Declarations exist to slow down

the programmer." There you have it.

The state of the Python universe has three aspects: community activity,

changes coming in Python 2.5, and changes coming in Python 3.0. There has been

a lot of activity the past year:

- The Python

source is now hosted at python.org in

Subversion rather than in SourceForge CVS.

- The

Buildbot, which was written in

Python, now watches over the Python source. Whenever somebody checks in a

patch, the bot tells several volunteer computers on different architectures to

download and compile Python and report if anything has broken. "The guilty

developer can be identified and harassed without human intervention." The

current results are visible on the

Web.

- A new website layout for python.org will

be rolled out March 5th.

- EasyInstall

and eggs are

getting some real-world use, especially with the

TurboGears project, which depends on

some ten third-party packages. ("TurboGears would not have been possible

without eggs," says its main developer.)

An .egg is like a Java .jar file: a package that knows its

version, what it depends on, and what optional services it can provide to other

packages or take from other packages. This is similar to .rpm and .deb but is

OS-neutral. It is expected that Linux package managers will eventually use

eggs for Python packages. Eggs can be installed as directories or zip files.

easy_install.py is a convenient command-line tool to download and

install Python packages (eggs or tarballs) in one step. It will get the

tarball from the Python Cheese Shop Note 2 (formerly known as the Python Package Index),

or scrape the Cheese Shop webpage for the download URL. You can also

provide the tarball directly, or another URL to scrape. Regardless of whether

the original was an egg or a simple tarball, EasyInstall will install it as an

egg, taking care of the *.pth magic needed for eggs.

Waitress: Well, there's egg and bacon; egg sausage and bacon; egg

and spam; egg bacon sausage and spam; spam bacon sausage and spam; spam egg

spam spam bacon and spam....

Wife: Have you got anything without spam?

Waitress: Well, there's spam egg sausage and spam, that's not got

much spam in it.

Wife: Could you do the egg bacon spam and sausage without the spam

then?

Python 2.5 changes

The first alpha is expected May 6; the final by September 30. Python 2.4.3

will be released in April, followed by 2.4.4, the last of the 2.4 series.

What's

New in Python 2.5 is unfinished but explains the changes better than I

can.

The most sweeping changes are the generator enhancements

(PEP 342)

and the new with keyword

(PEP 343). These allow

you to write coroutines and safe blocks.

"Safe" means you can guarantee a file will be closed or a lock released

without littering your code with try/finally stanzas. The

methodology is difficult to understand unless you have a Computer Science

degree, but the standard library will include helper functions like the

following:

#### OPENING/CLOSING A FILE ####

f = open("/etc/passwd", "r")

with f:

for line in f: # Read the file line by line.

words = line.strip().split(":")

print words[0], words[1] # Print everybody's username and password.

# The file is automatically closed when leaving this block, no matter

# whether an exception occurs, or you return or break out, or you simply

# fall off the bottom.

#### THREAD SYNCHRONIZATION ####

import thread

lock = thread.allocate_lock()

with lock:

do_something_thread_unsafe()

# The lock is automatically released when leaving this block.

#### DATABASE TRANSACTION ####

with transaction:

c = connection.cursor()

c.execute("UPDATE MyTable SET ...")

# The transaction automatically commits when leaving this block, or

# rolls back if an exception occurs.

#### REDIRECT STANDARD OUTPUT ####

f = open("log.txt", "w")

with stdout_redirected(f):

print "Hello, log file!"

# Stdout is automatically restored when leaving this block.

#### CURSES PROGRAMMING ####

with curses.wrapper2() as stdscr: # Switch the screen to CURSES mode.

stdscr.addtext("Look ma, ASCII graphics!")

# Text mode is automatically restored when leaving this block.

The same pattern works for blocking signals, pushing/popping the locale

or decimal precision, etc.

Coroutines are generators that you can inject data into at runtime.

Presumably the data will be used to calculate the next yield

value. This not only models a series of request/response cycles, but it

also promises to radically simplify asynchronous programming, making

Twisted much more accessible. Twisted

uses callbacks to avoid blocking, and that requires you to split your code into

many more functions than normal. But with coroutines those "many functions"

become a single generator.

Other changes for 2.5 include:

Guido resisted ctypes for a long time because it "provides new

ways to make a Python program dump core" by exposing it to arbitrary C bugs.

Skip Montanaro responded by listing several ways you can already make Python

dump core (try these at

home), and it was decided that ctypes wasn't any worse than

those.

Python 3.0 changes

Now that Guido is employed at Google and can spend 50% of his paid time

hacking Python, 3.0 doesn't have to wait until sometime after his 5-year-old

son Orlijn graduates college. The planned changes for 3.0 are listed in PEP 3000. Guido

highlighted the string/unicode issue: the str type will be

Unicode, and a new bytes type will be for arbitrary byte

arrays.

There's one late-breaking change, or rather a non-change: the unloved

lambda will remain as-is forever. lambda creates

anonymous functions from expressions. Attempts to abolish it were undone by

use cases that required syntax that's arguably worse than lambda,

and Ruby-style anonymous code blocks didn't fare any better. Programmers who

overuse lambda should still be shot, however.

BitTorrent

The other keynotes were on Plone and

BitTorrent. I missed the Plone talk,

but for BitTorrent Steve Holden interviewed Bram Cohen, BitTorrent's creator.

Bram talked about hacking while slacking (he wrote most of his code while

intentionally unemployed and living on savings), his new job as the owner of

Bittorrent Inc (not much time to code), why he chose Python for BitTorrent,

why BitTorrent doesn't make you into a carrier of malware (you won't be

uploading anything you didn't specifically request to download), Pascal, and

many other topics.

I made a wonderful faux pas the first evening at dinner when I sat

next to Bram, unaware he was going to speak. I asked what he did, and he said

he invented BitTorrent. I didn't remember what that was and kept thinking of

Linux's former version control system, whose name I couldn't remember. Yet

the term "torrent file" kept crossing my brain, clearly a configuration file

and not related to the Linux kernel. Finally I remembered, "BitTorrent is that

distributed file download thing, right?" Bram said yes. So I asked the guys

across the table, "What was the name of Linux's former version control system?"

They said, "BitKeeper". Ah ha, no wonder I got them confused. I

thought no more about it, then Bram ended up mentioning BitKeeper several times

during his keynote, usually in terms of how bad it is. He talked about

BitKeeper vs. git (Linux's new version control system), git vs. other things, and

then about BitTorrent vs. Avalanche. Avalanche is a distributed file

download system from Microsoft, which Bram called vaporware in

his blog, stirring up a lot of controversy (including a newspaper article in

Australia).

For those who think BitTorrent is all about illegally downloading

copyrighted songs and movies, Bram points to SXSW, a music

and film festival which will be using BitTorrent to distribute its

performances. "The Problem with Publishing: More customers require more

bandwidth. The BitTorrent Solution: Users cooperate in the distribution."

Other articles point out that a BitTorrent client normally interacts with

30-50 peers, reducing the strain on the original server by thirtyfold.

Bram also warned people to download BitTorrent directly from

www.bittorrent.com and not from some

random Google search. Shady operators are selling scams that claim to be

BitTorrent but contain spyware or viruses. The real BitTorrent is free to

download, and is Open Source under a Jabber-like license. The company does

accept voluntary donations, however, if you really want to give them money.

Session talks

The rest of PyCon was session talks, tutorials, lightning talks, Open Space,

sprints, and informal "hallway discussions". The most interesting talks I

saw or wanted to see were:

- Python in Your Pocket: Python for Series 60

- Nokia has released a version of Python for its

S60 mobile phone which includes Bluetooth and mobile Internet. The slides

showed it displaying stats from the GSM towers, weather reports, a stock market

graph, an online dictionary, and a stupid guitar tuner. There are Python

libraries to access the phone's battery usage, to dial a number, take a

picture, manage your inbox and address book and calendar, and display arbitrary

graphics and sound. You can write programs using an emulator and download the

working programs to the phone via Bluetooth. (Note: the URL points to a slide

show. Click anywhere to go to the next page. There appears to be no way to go

backward.)

- The State of Dabo

- Dabo is an application development framework

inspired by Visual Basic. It provides a high-level API for GUI design and

SQL database viewers. The database viewer is something I've wanted for years: a

simple way to make

CRUD grids and

data-entry forms similar to Microsoft Access. Only MySQL, PostgreSQL, and

Firebird are supported so far, but others are on the way. The GUI API is so

elegant that the wxPython developers themselves have started using it rather

than their own API, which is a thin wrapper around the wxWidgets C++

library.

- State-of-the-Art Python IDEs

- A survey of several Python IDEs, based on

research

done by the Utah Python Users' Group.

- Building Pluggable Software with Eggs

- A tutorial on Python's new packaging format.

-

Docutils Developers Tutorial: Architecture, Extending, and Embedding

- Docutils is the project name for ReStructured Text

(ReST), a wiki-like markup format. Several talks mentioned using

Docutils, and several Python projects have adopted it for documentation, data

entry, etc. One talk explained how to make slides with it. Another speaker

didn't make slides and had to download and learn Docutils the night before the

talk, but he was able to get his slides done anyway.

- What is Nabu?

- ReST includes a construct called

field lists

that look like email headers; they represent virtual database

records. ReST does not define the database or say what kind of record it is,

only that it's a record. Nabu is the first application I've seen to fully

exploit this potential. Nabu helps you extract relevant records from all

documents into a central database, which can then be queried or used in an

application. You'll have to decide beforehand

which fields a "contact record" or "URL bookmark" should contain, and how

you'll recognize one when you see it. Nabu's author Martin Blais keeps an

editor open for every task he does, to record random notes, TODO plans,

contacts, and URL bookmarks. The random notes are unstructured ReST sections;

the contacts are field lists scattered throughout the document. He also has a

file for each trip he's been on, containing random observations, restaurants to

remember (or forget), etc. Nabu is also suitable for blog entries, book lists,

calendar events, class notes, etc. One constraint is you must put "something

else" between adjacent records so ReST knows where each record ends. Putting

multiple records in a

bullet list

satisfies this constraint.

- Web frameworks

- "Python has more web application frameworks than

keywords," it has been rightly observed. A few people go further and say,

"You're not a real Python programmer until you've designed your own web

framework." (Hint: subclass

BaseHTTPServer.HTTPServer in the

standard library; it's easy!) So it's not surprising that PyCon had three

talks on TurboGears, two on Django, three on Zope (plus several BoFs), and a

couple on new small frameworks. All the discussion last year about framework

integration has made them more interoperable, but it has not cut the number of

frameworks. If anything, they've multiplied as people write experimental

frameworks to test design ideas. Supposedly there's a battle between

TurboGears and Django for overall dominance, but the developers aren't

competing, they just have different interests. Jacob Kaplan-Moss (Django

developer) and I (TurboGears developer) ran the Lightning Talks together, and

we both left the room alive. Some people work on multiple frameworks, hoping

the

holy grail

will eventually emerge. Much of the work focuses on WSGI and Paste, which help tie diverse components

using different frameworks together into a single application. Some work

focuses on AJAX, which is what makes Gmail and Google Maps so responsive and is

slowly spreading to other sites.

Lightning talks

Lightning talks are like movie shorts. If you don't like one, it's over in

five minutes. They are done one after the other in hour-long sessions. Some

people give lightning talks to introduce a brand-new project, others to focus

on a specialized topic, and others to make the audience laugh. This year there

were seventeen speakers for ten slots, so we added a second hour the next day.

But in the same way that adding a new freeway lane encourages people to drive

more, the number of excess speakers grew rather than shrank. We ended up with

thirty speakers for twenty slots, and there would have been more if I hadn't

closed the waiting list. The audience doesn't get bored and keeps coming back,

so next year we'll try adding a third hour and see if we can find the

saturation point. Some of the highlights were:

- Testosterone

- "A manly testing interface for Python." It's a

CURSES-based front end to Unittest. It may work with py.test too since it just

displays whatever the underlying routine prints.

- rst.el

- A ReStructured Text mode for Emacs.

- Python in Argentina

- Facundo Batista talked about Python use in

Argentina and the PyAr users

group. There are a hundred members throughout the country, and around fifteen

that gather for a meeting in Buenos Aires.

- Internet Censorship in China

- Philipp von Weitershausen gave a quick overview

of what it's like to use the Internet in China. There are 120 million users,

with 20 million more added every year. Chinese sites respond much more slowly

than international sites. Some 50,000 officials monitor what sites people

visit. Wikipedia, the BBC, and VOA are blocked completely due to undesirable

political content. Other sites go up and down, and the error keeps changing.

Sometimes it's a timeout, other times an error message. Encrypted connections

timeout. How do people feel about not having access to Wikipedia? Only 2% of

Chinese Internet users know it exists, so most don't know what they're missing

or think it's a big deal.

- How to Replace Yourself with a Small Bot

- Ben Collins-Sussman had too much time on his

hands and wrote an IRC bot that masqueraded as himself. He put it on a channel

and waited to see how long until people noticed. The bot had five or so canned

remarks like "Did you check the FAQ?", which it recited at random whenever

anybody addressed him. Hmm, maybe The Answer Gang

here at the Gazette needs one of those. The experiment didn't succeed too

well because the bot said wildly inappropriate things at the wrong

time.

[ I don't understand what you mean by "didn't

succeed", Mike - seems to me that wildly inappropriate responses to a

question is perfectly normal IRC behavior... so how could anyone tell? --

Ben ]

There were other good talks too but since I was coordinating I couldn't

devote as much attention to them as I would have liked.

Sprints

I attended the TurboGears sprint and worked on Docudo, a wiki-like beast for

software documentation, which groups pages according to software version and

arbitrary category and distinguishes between officially-blessed pages and

user-contributed unofficial pages. We wrote up a spec and started an

implementation based on the

20-Minute Wiki Tutorial.

This version will store pages in Subversion as XHTML documents, with

Subversion properties for the category and status, and use

TinyMCE for editing. TinyMCE

looks like a desktop editor complete with toolbars, but is implemented in

Javascript. We've got parts of all these tasks done in a pre-alpha application

that sort of works sometimes.

Other TurboGears fans worked on speeding up Kid templates, adding unittests,

improving compatibility with WSGI middleware and Paste, using our configuration

system to configure middleware, and replacing CherryPy with RhubarbTart and

SQLObject with SQLAlchemy. Don't get a heart attack about the last two: they

are just experimental now, won't be done till after TurboGears 1.0, and will

require a smooth migration path for existing applications. We had a variety of

skill levels in our sprint, and some with lesser skills mainly watched to learn

some programming techniques.

There were ten people in the TurboGears sprint and fifty sprinters total.

Other sprinters worked on Zope, Django, Docutils, the Python core, and a few

other projects.

The sprint was valuable to me, even though I'm not fully committed to

TurboGears, because I'm starting to write TurboGears applications at work:

it was good to write an application with developers who know more than I do

about it. That way they can say, "Don't do that, that's stupid, that's not the

TG way." I would have liked to work on the RhubarbTart integration but I had to

go with what's more practical for me in the short term. So sprinting is a

two-way activity: it benefits the project, and it also benefits you. And it

plants the seeds for future contributions you might make throughout the

year.

Impressions of Dallas

Dallas was not the transportation wasteland I feared (ahem Oklahoma City,

Raleigh, Charlotte...) but it did take 2-3 hours to get to PyCon without a car,

and that includes taking a U-shaped path around most of Dallas. An airport

shuttle van goes from the sprawling DFW campus to the south parking lot a mile

away. From there another airport shuttle goes to the American Airlines

headquarters, an apartment building (!), and finally the Dallas - Fort Worth

commuter train. That's three miles or thirty minutes just to get out of the

airport. The train goes hourly till 10:30pm, but not on Sundays. It cost

$4.50 for an all-day rail/bus pass. The train pokes along at a leisurely pace,

past flat green fields and worn-down industrial complexes, with a few housing

developments scattered incongruously among the warehouses. Freight trains

carried cylindrical cars labelled "Corn Syrup" and "Corn Sweetener". Good

thing I wasn't near the cars with a hatchet; I feel about corn syrup the way

some people feel about abortion. The train stopped in downtown Dallas at an

open section of track euphemistically called "Union Station". I transferred to

the light rail (blue line) going north. This train was speedy, going 55 mph

underground and 40 mph above, with stops a mile apart. Not the slowpoke things

you find in San Jose and Portland; this train means business. The companies

along the way seem to be mostly chain stores. At Arapaho Station (pronounced like a

rapper singing, "Ah RAP a ho!") in the suburb of Richardson, I transferred to bus

400, which goes twice an hour. A kind soul on the train helped me decide which

bus to catch. The bus travels west half an hour along Belt Line Road, a

six-lane expressway. It crosses other six-lane expressways every twenty

blocks. Dallas has quite the automobile capacity. We're going through a black

neighborhood now. The driver thinks the Marriott is a mile away and I

should have gotten another bus, but the hotel map says it's at Belt Line Road

and the North Dallas Tollway. When we approach the intersection with the

birds, all is explained. The road the hotel is named after goes behind the

hotel and curves, meeting the tollway. So the map was right.

[ A note from my own experience in Dallas, where I

teach classes in the area described above: a shuttle from DFW is ~$20 for

door-to-door service, and takes less than a half an hour. -- Ben ]

Around the hotel is mostly expense-account restaurants for the executive

crowd. We didn't find a grocery store anywhere. So I learned to eat big at

meals because there wouldn't be any food until the next one. There was a mall

nearby and a drugstore, for all your non-food shopping.

The weather was... just like home (Seattle). Drizzle one day, heavy rain

the next, clear the day after. Same sky but ten degrees warmer. Then the

temperature almost doubled to 80 degrees (24C) for three days. In February!

I would have been shocked but I've encountered that phenomenon in

California a few times.

Saturday evening a local Pythoneer took two of us to downtown Dallas.

Downtown has several blocks of old brick buildings converted to loft apartments

and bars and art galleries, and a couple coffeehouses and a thrift shop.

Another feature is the parking lot wavers. I swear, every parking lot had a

person waving at the entrance trying to entice drivers. It's 10pm, you'd think

the lot attendants would have gone home. Especially since there were plenty of

metered spaces on the street for cheaper. There weren't many cars around:

there were almost as many parking lots as cars! It was a bit like the Twilight

Zone: all these venues and not many people. We went to Café Brazil,

which is as Brazilian as the

movie. In other

words, not at all.

PyCon will be in Dallas next year around February-April, so come watch the

birds. The following year it might be anywhere in the US.

Footnotes

1

Guido van Rossum, Python's founder.

2

The name "Cheese Shop" comes from a Monty Python

skit.

The Cheese Shop was formerly called the Python Package Index (PyPI), but was

renamed because PyPI was confused with

PyPy, a Python interpreter written in

Python. They are both pronounced "pie-pie", and the attempt last year to get

people to call PyPI "pippy" failed. Some people don't like the term "Cheese

Shop" because it doesn't sell any cheese. But the shop in the skit didn't

either.

Talkback: Discuss this article with The Answer Gang

Mike is a Contributing Editor at Linux Gazette. He has been a

Linux enthusiast since 1991, a Debian user since 1995, and now Gentoo.

His favorite tool for programming is Python. Non-computer interests include

martial arts, wrestling, ska and oi! and ambient music, and the international

language Esperanto. He's been known to listen to Dvorak, Schubert,

Mendelssohn, and Khachaturian too.

Mike is a Contributing Editor at Linux Gazette. He has been a

Linux enthusiast since 1991, a Debian user since 1995, and now Gentoo.

His favorite tool for programming is Python. Non-computer interests include

martial arts, wrestling, ska and oi! and ambient music, and the international

language Esperanto. He's been known to listen to Dvorak, Schubert,

Mendelssohn, and Khachaturian too.

Copyright © 2006, Mike Orr (Sluggo). Released under the

Open Publication license

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 124 of Linux Gazette, March 2006

Migrating a Mail Server to Postfix/Cyrus/OpenLDAP

By René Pfeiffer and pooz

Once upon not so very long ago, a proprietary mail service system decided to stop working by

completely suspending all activities every 15 minutes. We quickly used a workaround to regularly

restart the service. After that, the head of the IT department approached Ivan and me

and asked for a solution. We proposed to replace the mail system by a combination of Postfix, Cyrus

IMAP, and OpenLDAP along with a healthy dose of TLS encryption. This article sheds some light on how

you can tackle a migration like this. I am well aware that there is plenty of information for every

subsystem, but we built a test system and tried a lot of configurations because we didn't see a

single source of information that deals with the connection of all these parts.

Preparations

First I have some words of caution.

Moving thousands of user accounts with their mailboxes from one mail platform to another shouldn't be

done lightly. We used a test server that ran for almost two months and tried to look at most of the

aspects of our new configuration. Here is a rundown of important things that should be done in

advance.

The Idea

You need to have a rough idea of what you want to achieve before you start hacking config files. Our

idea was to replace the mail system running on CommuniGate Pro with a free software equivalent.

Since our infrastructure is spread among multiple servers, we only had to worry about the mail

server itself: how to recreate the configuration, how to move the users' data, and how to reconnect

it with our external mail delivery and web mail system. We have external POP3/IMAP users that access

their mail directly, and the web mail system uses IMAP. The relation of every server and service is

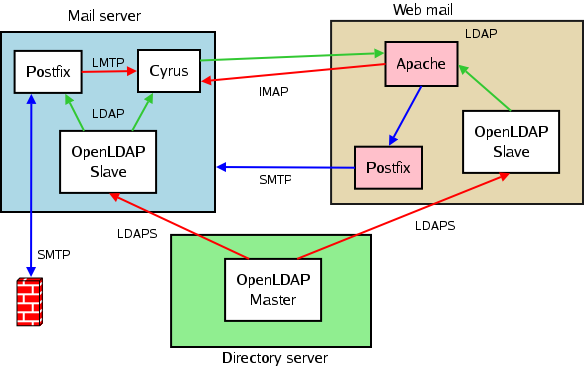

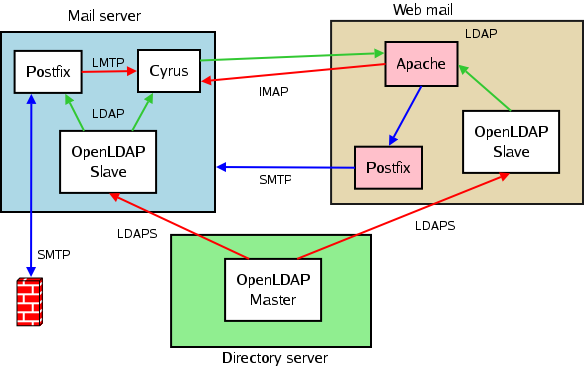

shown in this little picture.

Putting everything together: we wanted a Cyrus server to handle the mailboxes, a Postfix server to deal with

incoming and outgoing email, and an OpenLDAP server to hold as many settings as possible. The LDAP tree

gets a lot of requests (we get 80000+ mail requests per day), so we decided that every server involved

with user email should have a local copy in the shape of two OpenLDAP slave servers. The green lines in the diagram

are read operations. The red lines are write operations. The blue lines denote SMTP transactions. Mail enters

our system at the firewall, and every mail for outside domains is handled by the firewall, too. We will now

take a look at how the services in the white boxes have to be configured in order to work in tune.

Encryption with OpenSSL

All of the software packages involved in the mail system are capable of using encryption via

Transport Layer Security (TLS). We like to use TLS with SMTP, have our OpenLDAP servers do all

synchronisations via LDAPS/LDAP+TLS, and offer IMAPS and POP3S.

TLS can be implemented by using OpenSSL and putting the necessary keys and certificates into the

right place. You need the following files:

- a key for every server that uses TLS

- a certificate for that key, also on every server that uses TLS

- a certificate from the Certification Authority (CA) that signed your key in order to verify

certificates

It doesn't matter if you use an external CA or create one yourself. What

does matter is

that you have certificates of CAs you can trust. This is the most crucial factor when deploying

encryption. We use our own CA and create keys and certificates as needed. If you do that, you need

to keep track of your keys. A good way to do this is to set up your own CA and put it into a CVS or

Subversion repository (which should be secured, but you already know that, don't you?) This can be

done in a directory where you keep your keys and sign them.

mkdir myCA

chmod 0700 myCA

cd myCA

mkdir {crl,newcerts,private}

touch index.txt

echo "01" > serial

cp /usr/share/examples/openssl/openssl.cnf .

Use the sample openssl.cnf file and edit the values in the section ca or

CA_default. The paths need to point to the directories we have just created. You also

need to edit the information about your CA in the root_ca_distinguished_name section.

A sample openssl.cnf is attached to this article.

When you have taken care of your CA's configuration you can create its private key and certificate.

openssl req -nodes -config openssl.cnf -days 1825 -x509 -newkey rsa -out ca-cert.pem -outform PEM

After that, all you have to do is to create a key and a certificate request for every server you wish

to involve in encrypted transmissions.

openssl genrsa -rand /dev/random -out yourhost.example.net.key

openssl req -new -nodes -key yourhost.example.net.key -out yourhost.example.net.csr

I use

/dev/random as the entropy source. If your system lacks sufficient I/O (i.e.,

keyboard strokes or mouse movements) or has no hardware random generator, you might consider using

/dev/urandom instead. Signing this key with your own CA in this directory is done by using:

openssl ca -config openssl.cnf -in yourhost.example.net.csr -out yourhost.example.net.cert

In order to use encryption and to allow certificate verification, you will have to copy your CA's

certificate ca-cert.pem, your host's key yourhost.example.net.key, and the key