...making Linux just a little more fun!

February 2008 (#147):

- Mailbag

- Talkback

- 2-Cent Tips

- NewsBytes, by Howard Dyckoff and Kat Tanaka Okopnik

- A dummies introduction to GNU Screen, by Kumar Appaiah

- Away Mission: David vs. Goliath Technical Conferences, by Howard Dyckoff

- Booting Linux in Less Than 40 Seconds, by Alessandro Franci

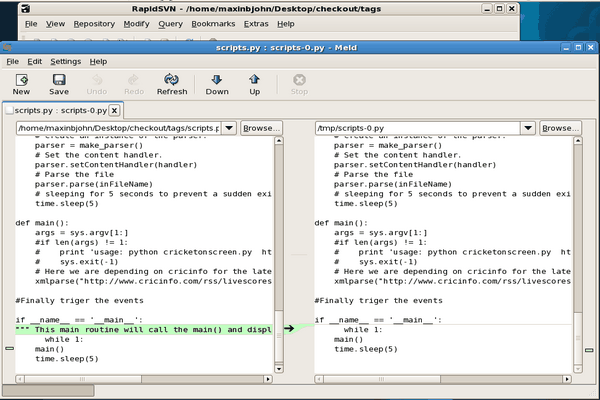

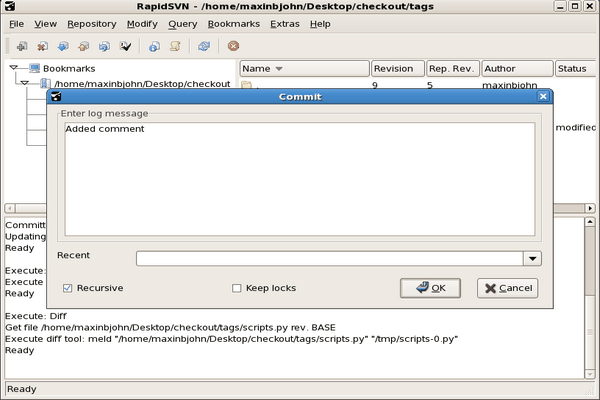

- App of the Month, by Maxin B. John

- Tips on Using Unicode with C/C++, by René Pfeiffer

- Hollywood, Linux, and CinePaint at FOSDEM 2008, by Robin Rowe

- Ecol, by Javier Malonda

- The Linux Launderette

Mailbag

This month's answers created by:

[ Amit Kumar Saha, Ben Okopnik, Justin Piszcz, Kapil Hari Paranjape, Karl-Heinz Herrmann, René Pfeiffer, MNZ, Neil Youngman, Rick Moen, Suramya Tomar, Mike Orr (Sluggo), Thomas Adam ]

...and you, our readers!

Gazette Matters

Arabic translation

Ben Okopnik [ben at linuxgazette.net]

Sun, 30 Dec 2007 10:50:38 -0500

Hi, all -

We've got somebody that just volunteered to translate bits and pieces of

LG into Arabic. Since he's working by himself, and is not a native

speaker (and since I can't read Arabic myself), does anyone here have

the ability to vet the stuff? It's at 'http://arlinux.110mb.com/lgazet/'.

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

[ Thread continues here (3 messages/2.22kB) ]

Kudos

Ben Okopnik [ben at linuxgazette.net]

Mon, 21 Jan 2008 11:59:28 -0500

[[[ I took the liberty of retitling this one. -- Kat ]]]

----- Forwarded message from rahul d <[email protected]> -----

Date: Mon, 21 Jan 2008 00:15:31 -0800 (PST)

From: rahul d <[email protected]>

Subject: None

To: [email protected]

hi,

i'm a python noob. Read one of ur articles. just

mailed u ppl to tell you guys tht u ppl r doin a nice

job...

regards

Rahul

----- End forwarded message -----

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

Our Mailbag

32 bit programs on Debian AMD64 - Pointers needed

Neil Youngman [ny at youngman.org.uk]

Sat, 8 Dec 2007 14:29:39 +0000

I've had a badly configured Debian on a Core2 Duo system for a while and I am

starting again from scratch. The area where I am having trouble finding

guidance is support for 32 bit programs and plugins on the AMD64

architecture.

I have found various recipes for getting specific programs working. Many of

them appear to involve maintaining a separate i386 architecture installation

in addition to the main AMD64 installation.

I am not keen on on maintaining 2 installations, nor am I keen on piecemeal

solutions for each 32 bit program I may need to run. Can anybody point me to

a good guide on the issues involved and/or a general solution to the problem

of running 32 bit code on AMD64 debian?

Neil Youngman

[ Thread continues here (2 messages/2.50kB) ]

large file server/backup system: technical opinions?

Karl-Heinz Herrmann [kh1 at khherrmann.de]

Sun, 20 Jan 2008 18:09:08 +0100

Hi Tags's,

at work we are suffering from the ever increasing amount of data.

This is a Medical Physics Group working with MRI (magnetic resonance

imaging) data. In worst case scenarios we can produce something like

20GB of data in an hour scantime. Luckily we are not scanning all the

time .-) Data access safety is mostly taken care of by firewalls and

access control outside our responsibility. But storing and backups

are our responsibility.

Currently we have about 4-6 TB distributed over two

"fileservers" (hardware raid5 systems) and two systems are making daily

backups of the most essential part of these data (home, original

measurement data). The backup machines are taking more than a full

night by now and can't handle anything while backuppc is still sorting

out the new data. The machine the backup is from is fine by morning.

We will have a total of three number crunching machines over the year

and at least these should have speedy access to these data. Approx. 20

hosts are accessing the data as well.

Now we got 10k EU (~15k $US) for new backup/file storage and are

thinking about our options:

* Raid system with iSCSI connected to the two (optimally all three)

number crunchers which are exporting the data to the other hosts via

NFS. (eSATA any good?)

* an actual machine (2-4 cores, 2-4GB RAM) with hardware raid (~24*1TB)

serving the files AND doing the backup (e.g. one raid onto another

raid on these disks)

* A storage solution using fibre-channel to the two number crunchers.

But who does the backup then? The oldest number cruncher might be

able to handle this nightly along with some computing all day. But it hasn't

got the disk space right now.

The surrounding systems are all ubuntu desktops, the number crunchers

will run ubuntu 64bit and the data sharing would be done by NFS --

mostly because I do not know of a better/faster production solution.

The occasional Win-access can be provided via samba-over-nfs on one of

the machines (like it does now).

Now I've no experience with iSCSI or fibre channel under Linux. Will

these work without too much of trouble setting things up? Any specific

controllers to get/not to get? Would the simultaneous iSCSI access from

two machines to the same raid actually work?

I also assume all of the boxes have 2x 1Gbit ethernet so we might be

able to set up load balancing -- but the IP and load balancing

would also have been tought to our switches I guess -- And these are

"outside our control", but we can talk to them. Is a new multi core

system (8-16 cores, plenty RAM) able to saturate the 2xGbit? Will

something else max out (hypertransport, ... )?

Any ideas -- especially ones I did not yet think of -- or experiences

with any of the exotic hardware is very much welcome....

Karl-Heinz

[ Thread continues here (7 messages/33.12kB) ]

[svlug] recommended percentage swap on 400G drive

Rick Moen [rick at linuxmafia.com]

Fri, 28 Dec 2007 16:31:39 -0800

----- Forwarded message from Rick Moen <[email protected]> -----

Date: Thu, 27 Dec 2007 21:16:21 -0800

To: [email protected]

From: Rick Moen <[email protected]>

Subject: Re: [svlug] recommended percentage swap on 400G drive

Quoting Darlene Wallach (

[email protected]):

> Is there a percentage of the disk size I should calculate for

> swap?

The amount of desirable swap on your system, and its placement, really

isn't directly related to disk size (except in the "you have to have X

amount of space in order for allocating Y from it to be reasonable"

sense): It's more related to total system physical RAM than anything

else, and secondarily to your usage patterns with that RAM (number of

active apps, RAM footprint of each of those).

The rule of thumb on all *ixes that you'll see quoted ad nauseam is that

total swap space should generally be somewhere from 1.5x to 2 or 3x

total physical RAM.[1] If your system has multiple physical hard

drives, all of which are roughly similar in overall speediness, then

ideally you want to put some swap on each physical drive -- max no. being

32 ;-> -- so that the (fairly intelligent) swapper process can split

the necessary seeking[2] activity between them, for best performance

through parallelism. By contrast, if any of the drives is markedly

slower[3], it's still worth putting some swap on it, but you'd want to

specify a lower swap priority to the swapper process. (See "man 2

fstab" or "man 8 swapon" for details.)

Ideally, you would also want to physically place the swap partitions

between other partitions in a manner calculated to (you hope) reduce

average seek time by keeping the heads in the general vicinity more

often than not.

As if all this detail wasn't enough to contend with, it turns out that

Linux swap files (as opposed to partitions) are a contender again.

They were common in very early Linux days, but fell out of favour when

it emerged that swap partitions yielded much better performance.

However, it turns out that, with the 2.6.x kernel series, swap files

once again have competitive performance, and might be worth using. See:

http://lkml.org/lkml/2006/5/29/3

[1] This is an OK rough heuristic, but obviously doesn't fit all usage

models. In general terms, you want enough swap so that you're very

unlikely to get tasks killed by the out-of-memory killer for lack of

virtual memory, even when your system is heavily loaded. Too much swap

really only wastes disk space, which is relatively cheap and plentiful,

so most people are wise to err slightly on the side of overallocation.

Theoretically, if you had huge gobs of RAM, e.g., 64GB RAM, in relation

to your usage, some would argue that you should have no swap (and

certainly not 128GB of it!), since you're basically never going to need

to swap out files or processes at all. But actually, memory pages managed

in virtual memory don't always back files at all, as Martin Pool points

out in the page you cited

(http://sourcefrog.net/weblog/software/linux-kernel/swap.html).

[ ... ]

[ Thread continues here (1 message/4.15kB) ]

SugarCRM abids by GPLv3 but still forces a badge/notice to be displayed?

Suramya Tomar [security at suramya.com]

Sun, 02 Dec 2007 23:59:16 +0530

Hey Everyone,

While surfing the web I found this following site

(http://robertogaloppini.net/2007/12/02/open-source-licensing-sugarcrms-original-way-to-abide-the-gpl/)

by Roberto Galoppini where he talks about how the SugarCRM has managed

to keep almost the same licensing requirements as before even after they

started using the GPLv3 license.

Now I am not an expert by any stretch of imagination but from what I

understood by reading this page and what I remember from the

discussions/postings on TAG it looks like Roberto is making sense... Is

that so or am I reading it incorrectly or missing something?

If thats the case then how does the GPLv3 prevent Badgeware programs

from claiming to be open source if they use the attribution clause to

force users to display their badges?

- Suramya

--

Name : Suramya Tomar

Homepage URL: http://www.suramya.com

Disclaimer:

Any errors in spelling, tact, or fact are transmission errors.

Problems with UTF-8 over SMTP

Kat Tanaka Okopnik [kat at linuxgazette.net]

Tue, 22 Jan 2008 12:13:11 -0800

[[[ The originating thread for this discussion is

http://linuxgazette.net/147/misc/lg/transliterating_arabic.html -- Kat

]]]

On Tue, Jan 22, 2008 at 02:39:21PM -0500, Benjamin A. Okopnik wrote:

> Latin character set (ISO-8859-1 and such) to Russian, yes.

>

> Eh... I'll send this example, and hope the 8-bit stuff makes it through

> the mail.

> ```

> ben@Tyr:~$ tsl2utf8 -h

> Mappings:

>

> A|<90> B|<91> V|<92> G|<93> D|<94> E|<95> J|<96> Z|<97>

> I|<98> Y|<99> K|<9a> L|<9b> M|<9c> N|<9d> O|<9e> P|<9f>

> R|<a0> S|<a1> T|<a2> U|<a3> F|<a4> H|<a5> C|<a6> X|<a7>

> 1|<a8> 2|<a9> 3|<aa> 4|<ab> 5|<ac> 6|<ad> 7|<ae> 8|<af>

> a|<b0> b|<b1> v|<b2> g|<b3> d|<b4> e|<b5> j|<b6> z|<b7>

> i|<b8> y|<b9> k|<ba> l|<bb> m|<bc> n|<bd> o|<be> p|<bf>

> r|<80> s|<81> t|<82> u|<83> f|<84> h|<85> c|<86> x|<87>

> !|<88> @|<89> #|<8a> $|<8b> %|<8c> ^|<8d> &|<8e> *|<8f>

> +|<91>

>

> ben@Tyr:~$ tsl2utf8

> samovar

> <81><b0><bc><be><b2><b0><80>

> babu!ka

> <b1><b0><b1><83><88><ba><b0>

> 7jno-^fiopskiy grax uv+l m$!% za hobot na s#ezd *@eric.

> <ae><b6><bd><be>-<8d><84><b8><be><bf><81><ba><b8><b9> [...]

> '''

Alas, as you may note from the above, it came through as utter

mojibake, even though my system is capable of reading (some) Russian.

http://people.debian.org/~kubota/mojibake/

http://en.wikipedia.org/wiki/Mojibake

Hmm. Wikipedia sugests that I call it krakozyabry (крокозя́бры). ;)

This looked like a useful gizmo: http://2cyr.com/decode/?lang=en

but it failed to produce anything ungarbled this time.

--

Kat Tanaka Okopnik

Linux Gazette Mailbag Editor

[email protected]

[ Thread continues here (26 messages/65.29kB) ]

Subnotebooks

Mike Orr [sluggoster at gmail.com]

Sun, 23 Dec 2007 21:37:37 -0800

Anybody tried the ASUS eee PC (http://en.wikipedia.org/wiki/Eee_pc) ?

I'm thinking about getting the 8GB model which costs $500. It's a 2

lb subnotebook with 1 GB RAM, 8 GB flash "memory", and Xandros (based

on Debian/Corel), and compatible with the Debian repository. I

haven't seen one and they seem hard to find even mail order, though

some Best Buys have the 4 GB model. My main concerns are the small

keyboard, 800x600 screen, and one-button touchpad. But as a second

computer for running Python, Firefox, Kopete, gvim, and maybe the Gimp

when traveling, I think it might do OK.

Any opinions on the other Linux (sub)notebooks which have begun

appearling? I thought about the Zonbu but it has a nonstandard

version of Gentoo tied to their subscription plan.

--

Mike Orr <[email protected]>

[ Thread continues here (4 messages/5.13kB) ]

rsync options

Martin J Hooper [martinjh at blueyonder.co.uk]

Wed, 05 Dec 2007 08:29:31 +0000

Just a quick question guys...

Backed up my Windows Document directory using rsync in Ubuntu

with the following command line:

rsync -Havcx --progress --stats /home/martin/win/My\ Documents/*

/home/martin/back/mydocs/

(Mounted ntfs drive to mounted smbfs share)

If I run that again a few weeks or so later will it copy all

31000+ files again or will it just copy new and changed files?

[ Thread continues here (17 messages/14.27kB) ]

Transliterating Arabic

Ben Okopnik [ben at linuxgazette.net]

Mon, 21 Jan 2008 15:26:13 -0500

[[[ Discussion of UTF-8 problems in this thread have been split off to

http://linuxgazette.net/147/misc/lg/problems_with_utf_8_over_smtp.html

-- Kat ]]]

On Fri, Jan 18, 2008 at 08:43:12PM +0200, MNZ wrote:

> On Dec 30, 2007 5:50 PM, Ben Okopnik <[email protected]> wrote:

> > Hi, all -

> >

> > We've got somebody that just volunteered to translate bits and pieces of

> > LG into Arabic. Since he's working by himself, and is not a native

> > speaker (and since I can't read Arabic myself), does anyone here have

> > the ability to vet the stuff? It's at 'http://arlinux.110mb.com/lgazet/'.

>

> Hi,

> I'm a native Arabic speaker. I can go through the translated text and

> check it but I'm terrible at actually typing Arabic and I'm not that

> good at it anyway.

A week or two ago, I hacked up a cute little Latin-Russian (UTF8)

converter (faking a few bits along the way, since the Russian alphabet

is longer than the English one), so I thought "heck, I'll just adjust it

so it can do Arabic - that'll give MNZ an easy way to do it." [laugh] I

knew that it was written right-to-left - I could handle that bit - but

having looked at the character set, as well as the whole

initial/medial/final/isolated thing, I've concluded that I'd be crazy to

even try.

> I'll help with the translation as much as I can. I'll

> start in a few days though because I have some exams right now.

That's great - just contact the project coordinator, and let me know if

you guys need any help. Other than converters, of course. ;)

> PS: Is anyone doing an Esperanto translation? just wondering.....

LG's former editor, Mike Orr, is a one-man walking advert for the

language - although he's not translating LG into it, AFAIK. You could

always poke him about spreading the idea among his friends.

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

[ Thread continues here (5 messages/14.11kB) ]

Building a ARM Linux kernel

Amit Kumar Saha [amitsaha.in at gmail.com]

Mon, 7 Jan 2008 20:54:02 +0530

Hi all,

I want to cross-compile a ARM Linux kernel (2.6.21.1) using gcc-4.1 on

Ubuntu 7.04(i686) (2.6.20.15-generic). This will require a

'arm-linux-gcc' cross-compiler. Hence, to build the 'gcc-4.1' I

started to build gcc for 'arm' target :

1. ./configure --target=arm

2. make [the dump is attached]

The build process stops giving:

* Configuration arm-unknown-none not supported

How do I proceed beyond this?

Regards & Thanks,

Amit

--

Amit Kumar Saha

Writer, Programmer, Researcher

http://amitsaha.in.googlepages.com

http://amitksaha.blogspot.com

[ Thread continues here (7 messages/6.98kB) ]

Version control for /etc

Rick Moen [rick at linuxmafia.com]

Tue, 25 Dec 2007 03:24:57 -0800

[[[ This is a followup to "Version control for /etc" in

http://linuxgazette.net/144/lg_mail.html -- Kat ]]]

A promising solution to the exact problem discussed earlier -- put together

by my friend Joey Hess. See: http://kitenet.net/~joey/code/etckeeper/

One key ingredient is David H?rdeman's "metastore"

(http://david.hardeman.nu/software.php), used to capture metadata that

git would otherwise ignore.

----- Forwarded message from Jason White <[email protected]> -----

Date: Tue, 25 Dec 2007 19:07:59 +1100

From: Jason White <[email protected]>

To: luv-main <[email protected]>

Subject: etckeeper

For those who are running Debian or debian-derived distributions, there is a

relatively new package, etckeeper, which I have found rather useful: it

maintains a revision history of /etc in a Git repository, including file

permissions and other metadata not normally tracked by Git.

It is also invoked by Apt, using standard Apt mechanisms, to commit changes

introduced into /etc when packages are installed or removed.

I don't know whether there exist any similar tools for non-Debian

distributions.

----- End forwarded message -----

[ Thread continues here (4 messages/8.02kB) ]

The joys of cheap hardware

Neil Youngman [Neil.Youngman at youngman.org.uk]

Sun, 9 Dec 2007 15:07:50 +0000

A little while back I bought a cheap HP desktop and today I decided to put a

2nd hard disk in. This is when I found that, although the spec sheet tells

you you have a spare 3.5 in hard disk bay, it doesn't tell you that there are

only 2 SATA connectors on the board and they are both in use! The PCB has

spaces for 4 SATA connectors, but only 2 have been connected up, although

bizarrely they have put in an IDE connector and a floppy connector.

Fortunately, I have a spare SATA controller, so I can still get the disk in.

Unfortunately, when I boot Debian the disk order gets swapped, so GRUB sees

the original disk as disk 1, but Debian sees it as disk 2. The upshot is that

it refuses to boot, as it can not mount the root partition. Interestingly

Knoppix still sees them in the expected order.

Using partition labels in /etc/fstab isn't a solution because it can't

actually find /etc/fstab.

I have a temporary workaround, as I have swapped the DVD SATA connection to

the second hard disk, so both are connected from the motherboard. This has

the advantage that they are mounted in a predictable order, but the

disadvantage is that I no longer have the option of booting from CDROM,

without swapping the cables back.

Does anyone know how the disk order is determined and is there any way to

force Debian to put the disks connected from the Motherboard ahead of those

connected from the additional SATA controller?

Neil Youngman

[ Thread continues here (8 messages/10.01kB) ]

No Internet from network client

Peter [petercmx at gmail.com]

Wed, 9 Jan 2008 17:02:05 +0700

I have been following "Serving Your Home Network on a Silver Platter

with Ubuntu" which is an August article. Just what I needed  One problem is that I cannot access the Internet from a client. Not

sure why and do not know where to look. Any ideas please?

There are two NIC's, eth0 which connects only to the router and eth1

which is the local network connected to a hub.

This is the route from the server and it appears to work - I can ping

and download

One problem is that I cannot access the Internet from a client. Not

sure why and do not know where to look. Any ideas please?

There are two NIC's, eth0 which connects only to the router and eth1

which is the local network connected to a hub.

This is the route from the server and it appears to work - I can ping

and download

> routeKernel IP routing tableDestination Gateway Genmask

> Flags Metric Ref Use Iface10.0.0.0 *

> 255.255.255.0 U 0 0 0 eth1192.168.1.0 *

> 255.255.255.0 U 0 0 0 eth0default

> 192.168.1.1 0.0.0.0 UG 100 0 0 eth0

This is the route from a client. I can access the server by putty but I

cannot reach the Internet.

Kernel IP routeing table

Destination Gateway Genmask Flags Metric Ref Use Iface

10.0.0.0 * 255.255.255.0 U 0 0 0 eth0

link-local * 255.255.0.0 U 1000 0 0 eth0

This is the hosts file on the server

$ cat /etc/hosts127.0.0.1 localhost127.0.1.1 spider

# The following lines are desirable for IPv6 capable hosts::1

ip6-localhost ip6-loopbackfe00::0 ip6-localnetff00::0

ip6-mcastprefixff02::1 ip6-allnodesff02::2 ip6-allroutersff02::3

ip6-allhosts

This is the hosts file on the client (at present I need to switch the cable

to get the Internet which is why there are two entries for spider)

127.0.0.1 localhost

127.0.1.1 client-1

10.0.0.88 spider

192.168.1.70 spider

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

ff02::3 ip6-allhosts

And this is a trace from the client

tracert google.com

google.com: Name or service not known

Cannot handle "host" cmdline arg `google.com' on position 1 (argc 1)

So I know not .... where should I look?

Peter

[ Thread continues here (14 messages/20.34kB) ]

Installing on ARCHOS

Douglas Wiley [drwly at yahoo.com]

Wed, 5 Dec 2007 14:28:25 -0800 (PST)

I have downloaded a program for the ARCHOS. It has an IPK extension that ARCHOS does not recognize. I am new to Linux. Can you help me fins out how to install this program?

Thanx

-drw-

[ Thread continues here (6 messages/5.24kB) ]

SVN commit_email.pl script support for multiple repositories

Smile Maker [britto_can at yahoo.com]

Wed, 9 Jan 2008 22:45:52 -0800 (PST)

Folks,

I have got a subversion repository running on /svn

Under that there are different directories /svn/a/aa ,/svn/b/bb like that.

I would like to send a mail to a group of ppl when the checkin happens in only /svn/a/aa this directory and sub dirs

I used post-commit script hook supplied with svn.

in that I added a line like

$REPOS/hooks/commit-email.pl "$REPOS" "$REV" -m "*aa*" [email protected] --diff "n" --from "[email protected]"

this is not working.

-m makes the support to multiple project and it accepts regex as an argument.

whatever i have provided any thing wrong.........

This is not following link also doesnt help

http://svn.haxx.se/users/archive-2007-05/0402.shtml

Thanks in Advance

---

Britto

[ Thread continues here (2 messages/3.01kB) ]

Writeup about using the Kodak V1253 (video) camera with Linux

Peter Knaggs [peter.knaggs at gmail.com]

Sun, 20 Jan 2008 22:24:31 -0800

I put together a bit of a writeup about using the Kodak V1253 (video)

camera with Linux:

http://www.penlug.org/twiki/bin/view/Main/HardwareInfoKodakV1253

It's one of the inexpensive cameras that does a fair job of

capturing 720p video (1280x720), and it works over USB

with gphoto2 / gtkam in linux.

Cheers,

Peter.

Linux driver for kingston data silo ds100-S1mm

[agarwal_naveen at ongc.co.in]

Thu, 6 Dec 2007 02:49:34 -0800

Sir

I am looking for linux driver to install kingston data silo ds100-S1mm

tape drive. Can you pl. help me

Naveen

[ Thread continues here (2 messages/1.33kB) ]

RAID Simulators for Linux

Amit Kumar Saha [amitsaha.in at gmail.com]

Tue, 1 Jan 2008 21:25:22 +0530

Hello!

Does any one here have experience using a RAID Simulator or know of

any? Basically, what I am looking for is 'emulating' RAID on a single

computer, single hard disk.

Looking forward to some insights

Regards,

Amit

--

Amit Kumar Saha

Writer, Programmer, Researcher

http://amitsaha.in.googlepages.com

http://amitksaha.blogspot.com

[ Thread continues here (12 messages/19.60kB) ]

Python question

Ben Okopnik [ben at linuxgazette.net]

Fri, 28 Dec 2007 10:01:07 -0500

Hey, Pythoneers -

I've just installed the "unicode" package after finding out about it

from Ren<a9>; it sounds like a very cool gadget, something I can really

use... but it appears to be broken:

ben@Tyr:~$ unicode

Traceback (most recent call last):

File "/usr/bin/unicode", line 159, in ?

out( "Making directory %s\n" % (HomeDir) )

File "/usr/bin/unicode", line 33, in out

sys.stdout.write(i.encode(options.iocharset, 'replace'))

NameError: global name 'options' is not defined

Line 570 says

(options, args) = parser.parse_args()

but even if I give it an arg like "-h", it still gives me the same

error. Any suggestions for fixing it before I turn it in to the Ubuntu

maintainers?

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

[ Thread continues here (3 messages/4.38kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 147 of Linux Gazette, February 2008

Talkback

Talkback:145/john1.html

[ In reference to "Linux on an ARM based Single Board Computer" in LG#145 ]

Bart Massey [bart.massey at gmail.com]

Mon, 3 Dec 2007 12:45:56 -0800

The US Distributor of this product appears to be Hightech Global (

http://www.hitechglobal.com/Boards/Armadillo9.htm), who is selling it for

$645. In my humble opinion, that price is substantially too high. For

comparison, the apparently much more powerful, physically much smaller

Gumstix Verdex XL6P (

http://gumstix.com/store/catalog/product_info.php?cPath=27&products_id=178)

costs $169, plus perhaps another $100 for various expansion boards--you'd

want at least the $24 VX Breakout board, which has the host USB port on it.

(I'm not affiliated in any way with Gumstix, nor have I ever purchased any

of their products. I'm thinking seriously about it, though.)

Just thought folks should know.

[ Thread continues here (3 messages/2.40kB) ]

Talkback:145/lg_mail.html

[ In reference to "Mailbag" in LG#145 ]

Ben Okopnik [ben at linuxgazette.net]

Wed, 5 Dec 2007 09:36:10 -0500

----- Forwarded message from Sitaram Chamarty <[email protected]> -----

Date: Wed, 5 Dec 2007 14:02:28 +0530

From: Sitaram Chamarty <[email protected]>

To: [email protected]

Subject: Re: compressed issues of LG

My apologies for sending an email to a thread that I only saw on the web

site, and not being a member of the mailing list and all...

But in response to the above subject, I thought someone should mention

that LZMA is the compression algo behind 7ZIP. Never mind what it says

about 7-ZIP not being good for backups, because 7ZIP is the archive

format, not the compression algo.

So, the usual "tar ... | lzma >some.file" will always work.

Also see http://lwn.net/Articles/260459/ if possible.

Regards,

Sita

----- End forwarded message -----

Talkback:issue84/okopnik.html

[ In reference to "/okopnik.html" in LG#issue84 ]

clarjon1 [clarjon1 at gmail.com]

Thu, 24 Jan 2008 12:50:14 -0500

Hey, all.

I've recently downloaded a bunch of HTML files, and wanted to name

them by their title. I remembered the scripts in the Perl One-Liner

of the Month: The Adventure of the Misnamed Files (LG 84), and thought

that they would be useful, as they seemed to be what I needed.

I first tried the one-liner, and instead of the zero, I got 258

(which, btw, is the number of files in the directory I was in) So I

copied the "expanded" version of the script, and saved it as

../script1.pl. Ran it, it came up with 0 as output (which, according

to the story, is a Good Thing), so I then tried the second one liner.

Laptop thought for a second, then gave me a command line again. So, I

run ls, and lo and behold! No changes. Tried it with the expanded

version, saved as ../script2.pl. Same result.

I was wondering if you might know of an updated version that I could

try to use? I'm not well enough versed in Perl to figure it out all

on my own, and I'm not usre what (or where) I should be looking...

Also, for reference, the files are all html, and about half named with

an html extension, and the other half have no extension.

Thanks in advance. I'd hate to have to do the task manually.

--

clarjon1

[ Thread continues here (3 messages/9.64kB) ]

Talkback:126/pfeiffer.html

[ In reference to "Digging More Secure Tunnels with IPsec" in LG#126 ]

Tim Chappell [tchappe1 at timchappell.plus.com]

Wed, 9 Jan 2008 20:40:07 -0000

Hi,

Having read your ipsec articles (125/126) I've been attempting to get a

similar system going.

I wonder if you can help? I'm trying to setup an ipsec VPN (tunnel mode)

between two networks which are both behind DSL routers. I've managed to get

it going successfully without the modems, but once they're in place it

doesn't appear to work. Is such a thing possible? The modems both have ports

500/4500 open to allow NAT-T through (and AH/ESP passthrough).

[ ... ]

[ Thread continues here (2 messages/12.54kB) ]

Talkback:145/lg_tips.html

[ In reference to "2-Cent Tips" in LG#145 ]

Thomas Adam [thomas at edulinux.homeunix.org]

Sun, 9 Dec 2007 12:34:55 +0000

On Thu, Nov 29, 2007 at 05:02:14PM +0700, Mulyadi Santosa wrote:

> Suppose you have recorded your console session with "script" command.

> And then you want to display it via simple "less" command. But wait, you

> see: (note: by default ls use coloring scheme via command aliasing, so

> if you don't have it, simply use ls --color)

>

> ESC]0;mulyadi@rumah:/tmp^GESC[?1034h[mulyadi@rumah tmp]$ ls

> ESC[00mESC[00;34mgconfd-doelESC[00m ESC[00;34mvir

> tual-mulyadi.Bx4b1XESC

>

> How do you make these "strange" characters to appear as color? Use less

> -r <your script file> and you'll see colors as they originally appear.

Which is only half the intention. You're still going to have to run the

result via col(1) first:

col -bx < ./some_file > ./afile && mv ./afile ./some_file

--

Thomas Adam

--

"He wants you back, he screams into the night air, like a fireman going

through a window that has no fire." -- Mike Myers, "This Poem Sucks".

Talkback:146/lg_cover.html

[ In reference to "Holiday Greetings to Everyone!" in LG#146 ]

Amit Kumar Saha [amitsaha.in at gmail.com]

Wed, 2 Jan 2008 09:07:12 +0530

Hello all!

New Year Greetings to all the members of the TAG and LG Staff.

Good Bless!

--Amit

Talkback:130/tag.html

[ In reference to "The Monthly Troubleshooter: Installing a Printer" in LG#130 ]

Ian Chapman [ichapman at videotron.ca]

Sat, 15 Dec 2007 11:43:11 -0500

Ben,

I had to edit a file to add myself to the folks including cups who

could use your ben@Fenrir:~# head -60 /usr/share/dict/words > /dev/lp0

to get the printer going? Where and what was that file. I've looked

back at article 130 and am not able to see it.

Regards Plain text Ian.

[ Thread continues here (2 messages/1.84kB) ]

Talkback:124/pfeiffer.html

[ In reference to "Migrating a Mail Server to Postfix/Cyrus/OpenLDAP" in LG#124 ]

René Pfeiffer [lynx at luchs.at]

Mon, 10 Dec 2007 22:30:00 +0100

Hello, Peter!

Glad to be of help, but please keep in mind posting replies also to the

TAG list. Others might find helpful comments, which is never a bad

thing.

On Dec 10, 2007 at 1428 -0600, Peter Clark appeared and said:

> [...]

> Some more questions for you, if you do not mind.

>

> # Indices to maintain

>

> You have mailLocalAddress, mailRoutingAddress and memberUid being

> maintained (in your example slapd.conf). What is calling upon them in

> your example?

These attributes were supposed to be used in a future project, that's

the main reason. I took the slapd.conf from a live server and anonymised

the critical part of the configuration.

> You also have mail listed, isn't mail and mailLocalAddress

> the same thing?

No, we only used the mail attribute in our setup, we ignored

mailLocalAddress.

> Also, mailQuotaSize, mailQuotaCount,mailSizeMax; I

> understand mailQuotaSize but did you restrict a user by the # of

> messages in their account (mailQuotaCount) and how did you use

> mailSizeMax?

These attributes are used by the quota management system, which I didn't

describe in the article. It is basically a web-based GUI where

administrators can change these values. Some scripts read the quota

values from the LDAP directory and write it to the Cyrus server by using

the Cyrus Perl API. mailSizeMax isn't used in the setup, but again it

was supposed to be.

> Doesn't imap.conf and main.cf hold those values?

AFAIK the imap.conf only holds IMAP-relevant things. Cyrus is only

interested in the authentication, and this is done by saslauthd.

main.cf only holds references to the configuration files that contain

the LDAP lookups.

> Does the order in which the indices are listed matter?

>

> ie could:

> index accountStatus eq

> index objectClass,uidNumber,gidNumber eq

> index cn,sn,uid,displayName,mail eq,pres,sub

>

> be written:

> index cn,sn,uid,displayName,mail eq,pres,sub

> index accountStatus eq

> index objectClass,uidNumber,gidNumber eq

>

> also couldnt:

> index accountStatus eq

> index objectClass,uidNumber,gidNumber eq

> be combined to:

> index accountStatus,objectClass,uidNumber,gidNumber eq

>

> Is it separated due to visual aesthetics or does it make a difference to

> the database somehow?

Frankly I doubt that the order matters. The indices I used are an

educated guess. Having too much indices slows things down, having too

few leads also to low performance. Generally speaking all attributes

that are accessed often should have indices.

[ ... ]

[ Thread continues here (3 messages/27.05kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 147 of Linux Gazette, February 2008

2-Cent Tips

2-cent tip: Wonky serial connections and stalled downloads

Ben Okopnik [ben at linuxgazette.net]

Wed, 5 Dec 2007 22:29:29 -0500

For various odd reasons [1], Ubuntu's 64-bit implementation of the

'usb-serial' module results in downloads over serial links using it

(e.g., GPRS-based cell cards) stalling on a regular basis. Eventually -

say, within a minute or two - these hangups resolve, and given that many

protocols implement some kind of a retry routine, the download continues

- but there are a few exceptions: notably 'apt-get' and some HTTP

downloads. These simply drop the connection with a "timeout" error

message. This, especially in the former case, can be really painful.

Here are a couple of methods that I've found to make life easier. In

testing these over the past several months, I've found them to be fairly

reliable.

HTTP: 'wget' is a great tool for continuing broken downloads (that's

what that "-c" option is all about) - especially if it's properly

configured. This doesn't require much: just create a ".wgetrc" file in

your home directory and add the following lines:

read_timeout = 10

waitretry = 10

After you do that, both 'wget' and 'wget -c' become much more friendly,

capable, and hard-working; they no longer hang around with shady types,

drink up their paychecks, or kick the dog. Life, in other words, becomes

quite good.

apt-get: This one takes a bit more, but still doesn't involve much

difficulty. Add the following entries to your '/etc/apt/apt.conf':

Acquire::http::Retries "10";

Acquire::http::timeout "10";

Then, whenever you don't want to stay up all night nursing your 'apt-get

upgrade' or whatever, launch it this way (assuming that you're root):

until apt-get -y upgrade; do sleep 1; done

This will keep relaunching 'apt-get' until it's all done - and will time

out quickly enough when the link stalls that you won't be wasting much

time between retries. This is a big improvement over the default

behavior.

[1] I did some Net research at the time, and found several discussions

that support my experience and diagnosis; unfortunately, I don't recall

the search string that I used back then, and can't easily dig these

resources up again. "The snows of yesteryear", indeed...

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

2-cent tip: Starting screensaver in KDE

clarjon1 [clarjon1 at gmail.com]

Mon, 21 Jan 2008 14:04:59 -0500

Well, here's a little tidbit I've found that may be of use to some people.

As many KDE users may know, you can have a keyboard shortcut set to

lock the screen. You can do the same with just starting the

screensaver!

Create a new item in the KDE menu editor, name it whatever youwant,

and have it to run this command:

kdesktop_lock --dontlock

Set it to a keyboard shortcut, save the menu, and viola! You now have

a keyboard shortcut to start your screensaver.

[ Thread continues here (2 messages/1.63kB) ]

2-cent tip: finding a USB device with Perl

Samuel Bisbee-vonKaufmann [sbisbee at computervip.com]

Sat, 29 Dec 2007 06:05:08 +0000

Greetings,

I got a USB toy for Christmas that didn't have a *nix client. After some

detective work I found a Perl module that did what I needed, except that

the module tried to access the toy with specific vendor and product ids.

For whatever reason my toy's ids did not match, so I modified the module

to search for my device. [1]

The first step is to find the product name for your device. This is

easily done with `lsusb` on the command line.

Next, break our your text editor and write some code. Remember, because

Perl uses libusb you will have to run your code as root; if you get

errors about being unable to access the device, then this is probably

the cause.

Here is the code that I used (was inside a sub, hence the use of 'return'):

my $usb = Device::USB->new;

my $dev;

foreach($usb->list_devices())

{

$dev = $usb->find_device($_->idVendor(), $_->idProduct()) and last if $_->product() eq "YOUR PRODUCT'S NAME FROM lsusb";

}

return -1 if !$dev;

This code iterates over the buses, checking each product's name for our

device's name from `lsusb`. If the device is found, then it will store

the handler in '$dev' and break out of the loop, else it will bubble the

error up by returning a negative value. When the device is found you

would claim and control it as normal (example in the 'new()' sub from

http://search.cpan.org/src/PEN/Device-USB-MissileLauncher-RocketBaby-1.00/lib/Device/USB/MissileLauncher/RocketBaby.pm).

If you are interested, I was playing with

Device::USB::MissileLauncher::RocketBaby

(http://search.cpan.org/~pen/Device-USB-MissileLauncher-RocketBaby-1.00/lib/Device/USB/MissileLauncher/RocketBaby.pm).

[1] It turns out that my USB toy uses the same ids; I probably just

tried to run the code when the device was unplugged. Oh well, at least I

got to learn how Perl interfaces with [USB] devices.

--

Sam Bisbee

[ Thread continues here (8 messages/18.33kB) ]

2-cent tip: Automatically reenabling CUPS printer queues

René Pfeiffer [lynx at luchs.at]

Thu, 27 Dec 2007 16:05:23 +0100

Hello!

I have a short shell script fragment for you. It automatically reenables

a printer queue on a CUPS printing server. CUPS takes different actions

when a print job encounters a problem. The print server can be

configured to follow the error policy "abort-job", "retry-job" or

"stop-printer". The default setting is "stop-printer". The reason for

this is not to drop print jobs or to send them to a printer that is not

responding. Beginning with CUPS 1.3.x you can set a server-wide error

policy. CUPS servers with version 1.2.x or 1.1.x cna only have a

per-printer setting.

If you have a CUPS server an wish the print queue to resume operation

automatically after they have been stopped, you can use a little shell

script to scan for disabled printers (stopped printing queues) and

reenable them.

#!/bin/sh

#

# Check if a printer queue is disabled and reenable it.

DISABLED=3D`lpstat -t | grep disabled | awk '{ print $2; }'`

for PRINTER in $DISABLED

do

logger "Printer $PRINTER is stopped"

cupsenable -h 127.0.0.1:631 $PRINTER && logger "Printer $PRINTER has been enabled."

done

This script can be executed periodically by crontab or by any other

means.

Best,

René.

[ Thread continues here (5 messages/5.98kB) ]

2-cent tip: Tool to do uudecoding

Mulyadi Santosa [mulyadi.santosa at gmail.com]

Tue, 15 Jan 2008 09:26:34 +0700

For some reasons, you might still need to uudecode a whole or some

part of file(s). A good example is if you're a loyal Phrack reader

just like I am. Most likely, the authors put uudecoded text right into

the body of an article. Usually, it PoC (Proof of Concept) code so the

readers can gain better understanding of the explanation and try it by

themselves without re-typing the code.

So, you need uudecode but where is it? In recent distros like Fedora

7, it's packed into different name. For example, in Fedora it is

gmime-uudecode and included in gmime RPM.

To execute, simply do something like below:

$ gmime-uudecode -o result.tar.gz phrack-file-0x01.txt

The above command assume you know the format of the uudecoded file. If

you don't, just use arbitrary extension and use "file" command to find

out.

You don't need to crop the text file, gmime-uudecode will scan the

body of the text file, looking for a line containing "begin" string.

The scanning ends at the line containing "end".

Talkback: Discuss this article with The Answer Gang

Published in Issue 147 of Linux Gazette, February 2008

NewsBytes

By Howard Dyckoff and Kat Tanaka Okopnik

|

Contents: Contents:

|

Please submit your News Bytes items in

plain text; other formats may be rejected without reading.

A one- or two-paragraph summary plus a URL has a much higher chance of

being published than an entire press release. Submit items to [email protected].

News in General

Sun Pockets MySQL

Sun Pockets MySQL

At the same time Oracle was buying BEA, Sun announced its agreement to buy

Open Source stalwart MySQL AB. It was only a billion or so.

"As part of the transaction, Sun will pay approximately $800 million in cash

in exchange for all MySQL stock and assume approximately $200 million in

options." (from biz.yahoo.com)

While there was some negative reaction (see ArsTechnica's posting

here),

many firms in the partner network around MySQL seemed supporting or

accepting. Principal among these were those in the PHP community such as

Harold Goldberg, CEO, Zend Technologies (the PHP company).

"This is a very good deal for the open source and web economies and it

confirms the success of the LAMP (Linux-Apache-MySQL-PHP) stack as a web

platform. The valuation of the transaction reflects the broad enterprise

adoption of LAMP, which is also driving strong revenue growth at Zend

Technologies. We have a long history of working closely with MySQL and

are encouraged to see the senior roles the MySQL executives will play at Sun.

It gives us confidence that we will be able to work with Sun, like we did with

MySQL, to advance the innovation and open standards that power the adoption of

the LAMP stack." - Harold Goldberg, CEO, Zend Technologies

Andi Gutman, co-founder of Zend and a PHP community leader, expressed support

in his Jan 16th blog posting at

http://andigutmans.blogspot.com/.

"...Sun missed the boat on the modern Web. Today there is very little of the

huge PHP-based Web community that actually runs on Solaris."

Gutman noted that Sun needs to garner mindshare among the PHP community:

"In order to be successful, Sun has to recognize how significant PHP is for

the MySQL user base and has to be pragmatic in how it thinks about and

approaches this new business opportunity. By doing so they can truly use this

acquisition as an opportunity to become a serious player in the modern Web

server market."

MySQL and Zend have both promoted the popularity of the LAMP stack. These two

companies have tremendous overlap in their customer bases and have worked

closely together to ensure their products work well together. As a result,

much of the modern Web uses PHP and MySQL.and both Zend and MySQL have been

backed by Index Ventures.

According to MySQL VP Kaj Arno in his company blog: "...I expect Sun to

add value to our community. I don't expect huge change, though. We

continue to work with our quality contributors, we continue to provide our

MySQL Forums, the Planet MySQL blog aggregator, we remain on the #mysql-dev

and #mysql channels on Freenode, we provide MySQL University lessons, we meet

at the MySQL Users Conference. We'll put effort into connecting the many FOSS

enthusiasts and experts at Sun - whom we will now learn to know better - with

our active user community."

Sun was pushing PostgreSQL on Solaris in the last year and this switch to

favoring MySQL was unexpected. The main thrust seems to be fulling

out Sun's services portfolio and increased access to Linux users.

Sun CEO Jonathon Schwartz noted the revenue opportunities for Sun and spoke of

having a unique combination of developer resources for both a popular database

and a Unix operating system. See his blog entry here:

http://blogs.sun.com/jonathan/

Said Schwartz: "MySQL is already the performance leader on a variety of

benchmarks - we'll make performance leadership the default for every

application we can find (and on every vendor's hardware platforms, not just

Sun's - and on Linux, Solaris, Windows, all). For the technically oriented,

Falcon will absolutely sing on

Niagara... talk about a match made in heaven." (Niagara is the

multi-core SPARC processor)

He also wrote, "Until now, no platform vendor has assembled all the core

elements of a completely open source operating system for the Internet. No

company has been able to deliver a comprehensive alternative to the leading

proprietary OS."

There is also a Sun blog posting about the high cost of proprietary databases

and the growing "commoditization" of databases here:

http://blogs.sun.com/jkshah/entry/cost_of_proprietary_database

An eWeek article compares the acquisition approaches of Sun and Oracle, noting

that this further commits Sun to an Open Source strategy, and quotes industry

analysts as favoring the Sun-MySQL deal. See:

http://www.eweek.com/c/a/Enterprise-Apps/Oracle-Sun-Seek-Big-Buys/?kc=EWKNLENT011808STR2

Oracle closed its deal with BEA after upping its purchase price to $8.5

Billion dollars in January. BEA had been integrating its own purchases

and has overlaps with the Oracle product line in portals, business process

tools, and the ubiquitous Weblogic Java Application Server. Industry

analysts view Oracle's motive primarily as a market share ploy, to position

itself better vs. IBM and Microsoft.

The Wall Street Journal wrote "Acquisitions by Silicon Valley software giants

Oracle Corp. and Sun Microsystems Inc. suggested a slowing economy and other

forces could kick a recent wave of high-tech deals into higher gear." Wonder

who's next??

AMD Acknowledges Faux Pas, Plans for New 8-way Opteron

AMD Acknowledges Faux Pas, Plans for New 8-way Opteron

"We blew it, and we're humbled by it," AMD CEO Hector Ruiz told a

December analysts conference in New York. It has shipped few of

its new quad-core architecture Opterons due to problems in the translation

lookaside buffer (TLB). Although there are OS-level patches for the

problems with almost no performance penalty, BIOS level workarounds can result

in a 10% or greater performance penalty (and some worst case tests show a 30%

hit). All the OEMs - Dell, HP, IBM, Sun, et al. - are careful not

to incur a support nightmare, assuming customers may not install the OS

patches correctly.

The TLB issue primarily impacts virtualization due to how guest OS might use

some TLB registers. If the guest OS is patched not to use the

registers, there is no need for a BIOS fix. So some Barcelona

chips have been shipped for use in HPC clusters where bare metal performance

is optimized, such as the Texas Advanced Computing Center at U of

T. AMD said that it has shipped about 35,000 Barcelona chips by

mid-December and it expects to ship "hundreds of thousands" by the first

quarter of 2008. At the January earnings announcement, AMD did show that

shipments for its 4-way chips were ramping up.

(From Mercury News)

The Sunnyvale chip maker backed away from its previously stated goal of ending

its string of losses in 2007, saying it's aiming to break even in the second

quarter and turn an operating profit in the third quarter. It also confirmed

it will delay the widespread launch of its "quad-core" server chip until the

first quarter of next year. CEO Ruiz spoke encouragingly of "...a

phenomenal transition year in 2008."

As recently as 2005, AMD was grabbing market share from rival Intel. But then

came a series of missed deadlines on new products and price-cutting. The

company also acknowledged that it significantly overpaid for ATI Technologies

($5.6 billion) and will write down the value of the biggest acquisition in AMD

history.

The AMD road map includes a transition to 45nm process technology with

Shanghai and Montreal processors as successors to Barcelona starting in the

3rd Quarter of 2008. The Montreal chip will sport an octal core in 2009 with a

1 MB L2 cache and 6-12 MB of L3 per cache, roughly matching or modestly

exceeding the Intel roadmap. AMD is also a partner with IBM in

developing a 32 nanometer chip fabrication process that may be cheaper and

more flexible than the 32 nm process that Intel will use for its CPUs next

year.

Also helping, in January, HP announced that it would sell a new consumer PC -

the Pavilion m8330f - that uses AMD's new quad-core Phenom processor.

From ArsTechnica:

http://arstechnica.com/news.ars/post/20071213-forecasting-2008-amd-strikeapologiststrike-analyst-day.html

AMD

acknowledges quad-core woes; Promises rebound; Highlights roadmap

http://blogs.zdnet.com/BTL/?p=7339&tag=nl.e622

Intel Splits from OLPC Board

Intel Splits from OLPC Board

Intel has decided to pursue its own plans for 3rd World student computers,

ending its short association with the One Laptop Per Child project after

only a few months on its board. In a January statement, the OLPC board

said that Intel had violated written agreements with the board of

directors, specifically in not helping to develop software jointly with

the project. The OLPC statement also claimed that Intel "disparaged" the

OLPC's XO laptop to developing nations that were in negotiations to

purchase the XO.

The Intel scheme for 3rd world classrooms uses a small but more standard

laptop with flash storage that runs Windows, while OLPC uses Linux and

open source applications and has hardware -- especially the screen and

keyboard -- more suitable for 3rd world environments. OLPC's XO also consumes

substantially less power. The first models used a low-power AMD processor and

Intel was angling to get OLPC to switch to a low-power Intel chip.

An Intel spokesperson claimed the issue was that OLPC wanted Intel to work

"exclusively on the OLPC system".

Here are links to BBC pages that reviews features of both the OLPC XO and

Intel's Classmate mini-laptop:

http://news.bbc.co.uk/2/hi/technology/7094695.stm

and

http://news.bbc.co.uk/2/hi/technology/7119160.stm

JBuilder 2007 Named "Best Java IDE"

JBuilder 2007 Named "Best Java IDE"

InfoWorld has named CodeGear's JBuilder 2007 the "Best Java

Integrated Development Environment" as part of its recent 2008

Technology awards.

CodeGear is the developer tools foundry descended from Borland and its JBuilder

2007 IDE now runs on the open source Eclipse framework. JBuilder speeds the

development of Java and Web-based applications. CodeGear makes tools for

C/C++ and Java development as well as Ruby on Rails.

"I found a very smooth, very robust IDE with many innovative features. It's

safe to say that CodeGear decided to throw everything it had at this release -

and succeeded brilliantly," wrote Andrew Binstock, senior contributing editor

at InfoWorld. "...JBuilder feels solid throughout - a remarkable achievement

given its status as a first release on Eclipse."

To learn more about CodeGear and its products, visit

www.codegear.com.

Details on all winners of the InfoWorld 2008 Technology of the Year

awards are available online at:

http://www.infoworld.com/

SCO-Novell Damage Claims Get a Court Date

SCO-Novell Damage Claims Get a Court Date

The drama continues. A Federal judge has set an April date to determine just

how much the SCO Group must compensate Novell for royalties it collected

on Unix operating system licenses after Novell, and not

SCO, was proven to be the copyright holder.

Utah district judge Dale Kimball set the trial for April 29 in Salt Lake City.

SCO must compensate Novell for the royalties it collected but its has less in

the bank than that amount. Novell is concerned that SCO and its financial

backers may try to extract or liquidate it assets before paying.

Exist Global Acquires DevZuz

Exist Global Acquires DevZuz

Exist Global, a Philippines based software engineering firm, announced

their acquisition of US-based open source expert DevZuz to create a system

to help companies utilize outsourcing and overseas companies along with

open source technologies to create a cost efficient and also successful

software application.

DevZuz provides a platform that links enterprises to open source

application developers. Exist's strength is their cost effective rapid

software development. With the global demand for innovative software

increasing, combining these companies will

- Create a strategic software development firm that focuses on

services for start-ups, enterprises, and ISVs

- Provide low-cost, effective software applications to drive

businesses across the globe

- Utilize DevZuz's technology, CodeATLAS, a code search and open

source project repository that connects developers and software

components in a social networking model

http://www.exist.com/index.html

http://www.devzuz.com/web/guest/home

Eaton Corp/UbuntuIHV Certification

Eaton Corp/UbuntuIHV Certification

Eaton Corporation announced that its MGE Office Protection Systems

Personal Solution Pac v3 for Linux and Network Shutdown Module v3 is the

"first UPS power management solution to receive Ubuntu's Independent

Hardware Vendor (IHV) Certification". This software allows for default UPS

integration and is designed to assure communication, monitoring and

graceful shutdown during prolonged power disturbances.

MGE Office Protection Systems UPS hardware users can download the free

software at http://www.mgeops.com/index.php/downloads/software_downloads.

For additional information on MGE Office Protection Systems Linux

solutions, visit http://www.mgeops.com/index.php/products__1/power_management

.

For more information about Ubuntu and the IHV partners, visit http://webapps.ubuntu.com/partners/system/

.

To learn more about Eaton's complete line of MGE Office Protection

Systems products and service portfolio, visit www.mgeops.com.

TuxMobil Now Offers 7,000 Linux Guides for the Laptop

TuxMobil Now Offers 7,000 Linux Guides for the Laptop

The TuxMobil project is the largest online resource on Linux and mobile

computing, covering all aspects concerning Linux on laptops and notebooks.

In ten years, Werner Heuser has compiled more than 7,000 links to Linux

laptop and notebook installation and configuration guides.

These guides and how-tos are suitable for newbies as well as experts.

Most of the guides are in English, but special TuxMobil sections are

dedicated to other languages.

TuxMobil indexes the guides by manufacturer and model as well as by

processor type, display size and Linux distribution. All major Linux

distributions (RedHat, Fedora, Gentoo, Debian, Novell/SuSE, Ubuntu,

Mandriva, Knoppix) and many not-so-well-known distributions are present.

Other Unix derivatives like BSD, Minix and Solaris are also covered.

Linux installation guides for Tablet PCs and a survey of suitable drivers

and applications like handwriting-recognition tools are described in a

separate section.

TuxMobil provides details about Linux hardware compatibility for PCMCIA

cards, miniPCI cards, ExpressCards, infrared, Bluetooth, wireless LAN

adapters and Webcams.

http://tuxmobil.org/mylaptops.html

http://tuxmobil.org/tablet_unix.html

http://tuxmobil.org/hardware.html

http://tuxmobil.org/reseller.html

Open Moko

Open Moko

OpenMoko, creator of an integrated open source mobile platform, is now a

separate company.

"We have reached our initial milestone with the developer version of the

Neo 1973 - the world's first entirely open mobile phone," said OpenMoko CEO

Sean Moss-Pultz.

OpenMoko also announced a partnership with Dash Navigation, Inc. The Dash

Express, an Internet-connected GPS device for the consumer market,

runs on the Neo mobile hardware and software platform. The Dash Express is

now available for pre-order directly from Dash Navigation.

In further news, OpenMoko announced it has inked a deal with mobile device

distributor, Pulster, in Germany. Pulster specializes in online sales of

mobile devices, selling into the industrial and education markets with

focus on Linux-based solutions. Pulster will distribute the Neo 1973 and

Neo FreeRunner, a Wi-Fi-enabled mobile device with sophisticated graphics

capable of handing a new generation of open source mobile applications.

http://www.openmoko.com

http://www.dash.net

http://www.pulster.de/

Demonstrating Open Source Health Care Solutions (DOHCS '08) at

SCaLE

Demonstrating Open Source Health Care Solutions (DOHCS '08) at

SCaLE

An opportunity for providers, administrators, technical people and

journalists in the health field to see firsthand how open source is making

strong in-roads and hear real world success stories firsthand.

A number of the presenters and sponsors were just featured by the

California Health Care Foundation (CHCF) in their analysis here:

http://www.chcf.org/topics/view.cfm?itemID=133551

Events

InfoWorld's Virtualization Executive Forum - Free

Feb 4, Hotel Nikko, San Francisco, CA

https://ssl.infoworld.com/servlet/voa/voa_reg.jsp?promoCode=VIPGST

Southern California Linux Expo - SCaLE 2008

February 8 - 10, Los Angeles, CA

www.socallinuxexpo.org

Florida Linux Show 2008

February 11, Jacksonville, FL

http://www.floridalinuxshow.com

JBoss World 2008

February 13 - 15, Orlando, FL

http://www.jbossworld.com

COPU Linux Developer Symposium

February 19 - 20, Beijing, China

http://oss.org.cn/modules/tinyd1/index.php?id=3

FOSDEM 2008

February 23 - 24, Brussels, Belgium

http://www.fosdem.org/

USENIX File and Storage Technologies (FAST '08)

February 26 - 29, San Jose, CA

http://www.usenix.org/fast08/

Sun Tech Days

February 27 - 29, Hyderabad, India

http://developers.sun.com/events/techdays

Software Development West 2008

March 3 - 7, Santa Clara, CA

http://sdexpo.com/2008/west/register.htm

O'Reilly Emerging Technology Conference 2008

March 3 - 6, Marriott Marina, San Diego, CA

http://conferences.oreilly.com/etech

Sun Tech Days

March 4 - 6, Sydney, Australia

http://developers.sun.com/events/techdays

CeBIT 2008

March 4 - 9, Hannover, Germany

http://www.cebit.de/

DISKCON Asia Pacific

March 5 - 7,

Orchid Country Club, Singapore

Contact: [email protected]

Novell BrainShare 2008

March 16 - 21, Salt Palace, Salt Lake City, UT

www.novell.com/brainshare

(Early-bird discount price deadline: February 15, 2008)

EclipseCon 2008

March 17 - 20, Santa Clara, CA

http://www.eclipsecon.org/

($1295 until Feb 14, higher at the door; 15% discount for alumni and Eclipse

members)

AjaxWorld East 2008

March 19 - 20, New York City

http://www.ajaxworld.com/

SaaScon

March 25 - 26,

Santa Clara, CA

http://www.saascon.com

Sun Tech Days

April 4 - 6, St. Petersburg, Russia

http://developers.sun.com/events/techdays

RSA Conference 2008

April 7 - 11, San Francisco, CA

www.RSAConference.com

(save up $700 before January 11, 2008)

2008 Scrum Gathering April 14 - 16, Chicago, IL

http://www.scrumalliance.org/events/5--scrum-gathering

MySQL Conference and Expo

April 14 - 17,

Santa Clara, CA

www.mysqlconf.com

Web 2.0 Expo

April 22 - 25,

San Francisco, CA

sf.web2expo.com

Interop Las Vegas - 2008

April 27 - May 2, Mandalay Bay, Las Vegas, NV

http:://www.interop.com/

JavaOne 2008

May 6 - 9, San Francisco, CA

http://java.sun.com/javaone

Forrester's IT Forum 2008

May 20 - 23, The Venetian, Las Vegas, NV

http://www.forrester.com/events/eventdetail?eventID=2067

DC PHP Conference & Expo 2008

June 2 - 4, George Washington University, Washington, DC

http://www.dcphpconference.com/

Symantec Vision 2008

June 9 - 12, The Venetian, Las Vegas, NV

http://vision.symantec.com/VisionUS/

Red Hat Summit 2008

June 18 - 20, Hynes Convention Center, Boston, MA

http://www.redhat.com/promo/summit/

Dr. Dobb's Architecture & Design World 2008

July 21 - 24, Hyatt Regency, Chicago, IL.

http://www.sdexpo.com/2008/archdesign/maillist/mailing_list.htm

Linuxworld Conference

August 4 - 7,

San Francisco, California

http://www.linuxworldexpo.com/live/12/

Distros

Restful Ruby on Rails 2.0 Arrives on Track

Restful Ruby on Rails 2.0 Arrives on Track

The latest incarnation of the Ruby framework was a full version update that

arrived in early December and was quickly patched in mid-December to Version

2.0.2. The 2.x version expands the commitment of the Ruby community to REST

(Representational State Transfer) as the paradigm for web applications.

The new version follows a full year in development and implements new resources,

a more Restful approach to development, and beefed up security to resist XSS

(cross site scripting) and CSRF (cross site resource forgery - an area of

increasing exploitation). The CSRF hardening comes from including a special

token in all forms and Ajax requests, blocking requests made from outside of

your application.

Rails 2.x also features improved default exception handling via a class level

macro called 'rescue_from' which can be used to declaratively point

certain exceptions to a given action.

All commercial database adapters are now in their own 'gems'. Rails now only

ships with adapters for MySQL, SQLite, and PostgreSQL. Other adapters

will be provided by the DB firm independently of the Rails release schedule.

Get the latest Ruby on Rails

here

(http://www.rubyonrails.org/down

)

Debian 4.0.r2 Patches Security

Debian 4.0.r2 Patches Security

The Debian community released an Etch "point release" in late December which

included multiple security fixes for the Linux kernel that could allow

escalation to root privileges, denial-of-service (DOS) attacks, and toeholds

for malware. Application fixes are also included to prevent vulnerabilities

from being exploited. Among effected applications are OpenOffice

and IceWeasel (Debian's implementation of Firefox).

Other changes include stability improvements in specific situations, improved

serial console support when configuring grub, and added support for SGI O2

machines with 300MHz RM5200SC CPUs (from mips). Etch was first released in April

of 2007.

FreeBSD 6.3 Released

FreeBSD 6.3 Released

FreeBSD 6.3 was released in January and continues providing performance and

stability improvements, bug fixes and new features. Some of the highlights

include: KDE updated to 3.5.8, GNOME updated to 2.20.1, X.Org updated to 7.3;

BIND updated to 9.3.4; Sendmail updated to 8.14.2; lagg driver ported from

OpenBSD / NetBSD; Unionfs file system re-implemented; freebsd-update now

supports an upgrade command.

FreeBSD 6.3 is dedicated to the memory of Dr. Junichiro Hagino for his

visionary work on the IPv6 protocol and his many other contributions to the

Internet and BSD communities. Read the

release

announcement and

release

notes for further information.

openSUSE 11.0 Alpha 1 in Testing

openSUSE 11.0 Alpha 1 in Testing

openSUSE 11.0, Alpha 1, is now available for download and testing. The main

changes against Alpha 0 are: Sat Solver integration, Michael Schröder's 'sat

solver' library is now the default package solver for libzypp; heavy changes

to the appearance of the Qt installation (ported to Qt 4); KDE 4.0.0, Perl

5.10, glibc 2.7, NetworkManager 0.7, CUPS 1.3.5, Pulseaudio.

Here is the full

release

announcement.

MEPIS 7.0 is Released for Christmas

MEPIS 7.0 is Released for Christmas

MEPIS has released SimplyMEPIS 7.0. The ISOs for the 32 and 64 versions are in

the release directory at the MEPIS subscriber site and public mirrors.

The Mepis community also released the Mepis-AntiX 7.01 update for older PCs.

X.Org 7.4 Planned for Feb '08

X.Org 7.4 Planned for Feb '08

New development versions of X.org were released January. Version 7.4 is

scheduled to be released in late February 2008.

See the list of updated modules here:

http://www.x.org/wiki/Releases/7.4

Major items on the ToDo list include a new SELinux security module and a new

Solaris Trusted Extensions security module, both using XACE.

Software & Products

Perl 5.10 Released, First Update in 5 Years

Perl 5.10 Released, First Update in 5 Years

The ubiquitous Perl language, the Swiss Army knife of the Internet and *ix

distros, has got a whole new bag. The famous parsing interpreter gains

speed, while shedding weight, claims Perl

Buzz. Other interpreter improvements include:

-

Relocatable installation, for more filesystem flexibility

-

Source code portability is enhanced

-

Many small bug fixes

A simplified, smarter comparison operator is now in Perl 5.10. On this new

feature, Perl Buzz comments, "The result is that all comparisons

now just Do The Right Thing, a hallmark of Perl programming."

Other new language features include:

-

An improved switch statement

-

Regex improvements including "named captures" as opposed to positional

captures; also a recursive patterns feature

-

State variables that persist between subroutine calls

-

User defined pragmata

-

A "defined-or" operator

-

Better error messages

The Perl development team, called the Perl Porters, has taken working features

from the ambitious Perl 6 development

project to add useful functionality and to help bridge to the future version.

Perl 5.10 is available here:

ftp://ftp.cpan.org/pub/CPAN/src/5.0/

See a slide show on new Perl features at

http://www.slideshare.net/rjbs/perl-510-for-people-who-arent-totally-insane

Scribus 1.3.3.10

Scribus 1.3.3.10

The Scribus Team is pleased to announce the release of Scribus 1.3.3.10

This stable release includes the following improvements:

- Several fixes and improvements to text frames and the Story Editor.

- New Arabic Translation.

- More translation and documentation updates.

- Many improvements to PDF Forms exporting and non-Latin script handling

in

PDFs.

One of the major additions to this release is the final complete German

translation of the Scribus documentation by Christoph Schäaut;fer and Volker

Ribbert.

The Scribus Team will also participate again at the Third Libre Graphics

Meeting in Krakow, Poland in May. LGM is open to all and the team welcomes

seeing users, contributors and potential developers. For more info see:

http://www.libregraphicsmeeting.org/2008/

Public Beta of VMware Stage Manager

Public Beta of VMware Stage Manager

VMware Stage Manager, a new management and automation product that streamlines

bringing new applications and other IT services into production. Building on

the management capabilities of VMware Infrastructure, VMware Stage Manager

automates management of multi-tier application environments - including the

servers, storage and networking systems that support them - as they move

through various stages from integration to testing to staging and being

released into production.

Stage Manager should setup pre-production infrastructure while enforcing

change and release management procedures.

For more information on VMware Stage Manager or to download the beta, please

visit

http://www.vmware.com/go/stage_manager_beta.

Apatar Open Source Data Integration Partners with MySQL AB

Apatar Open Source Data Integration Partners with MySQL AB

Apatar, Inc., a provider of open source data integration tools, has joined the

MySQL Enterprise Connection Alliance (MECA), the third-party partnership

program for MySQL AB. This will make It easier to integrate MySQL-based

solutions with other data sources, such as databases, CRM/ERP applications,

flat files, and RSS feeds.

Apatar has released open source tools that enable non-technical staff to

easily link information between databases (such as MySQL, Microsoft SQL

Server, or Oracle), files (Excel spreadsheets, CSV/TXT files), applications

(Salesforce.com, SugarCRM), and the top Web 2.0 destinations (Flickr, Amazon

S3, RSS feeds).

Apatar tools include:

Apatar Enterprise Data Mashups

(www.apatar.com

)

Apatar Enterprise Data Mashups is an open source on-demand data integration

software toolset, which helps users integrate information between databases,

files, and applications. Imagine if you could visually design (drag-and-drop)

a workflow to exchange data and files between files (Microsoft Excel

spreadsheets, CSV/TXT files), databases (such as MySQL, Microsoft SQL,

Oracle), applications (Salesforce.com, SugarCRM), and the top Web 2.0

destinations (Flickr, RSS feeds, Amazon S3), all without having to write a

single line of code. Users install a visual job designer application to create

integration jobs called DataMaps, link data between the source(s) and the

target(s), and schedule one-time or recurring data transformations. Imagine

this capability fits cleanly and quickly into your projects. You've just

imagined what Apatar can do for you.

ApatarForge

(www.apatarforge.org

)

ApatarForge is an on-demand service, released under an open-source license,

which allows anyone to build and publish an RSS/REST feed of information from

their favorite Microsoft Excel spreadsheets, news feeds, databases, and

applications. ApatarForge is the community effort where business users and

open source developers publish, share, and re-use data integration jobs,

called DataMaps, over the web. ApatarForge is the prime destination for Apatar

users to collaborate and extend an Apatar Data Integration project.

ApatarForge.org now hosts more than 160 DataMaps containing metadata. All

DataMaps are available for free download at

www.apatarforge.org.

For more information on how MySQL can be used with Apatar, please visit

http://solutions.mysql.com/solutions/item.php?id=441

Emerson, Liebert Introduce Software-Scalable Ups

Emerson, Liebert Introduce Software-Scalable Ups

Emerson Network Power announced its new Liebert NX UPS with Softscale

technology, delivering "the first software scalable UPS for data centers".

Designed for small and medium size data centers, the UPS allows customers to pay

for only the UPS capacity they while providing the flexibility to purchase and

unlock additional capacity when needed via a simple software key. Visit here for

more information:

(http://www.liebert.com/newsletters/uptimes/2007/11nov/liebertnx.asp)

Free power and cooling monitoring tool for Liebert

customers

To help better maintain a data center's power and cooling infrastructure,

Emerson Network Power is now offering a facility management tool to all

Liebert service contract customers at no additional cost. The Customer

Services Network is a web-based monitoring and reporting tool that provides

you with up-to-date information on every piece of critical protection

equipment, right from your desktop. Visit here for the complete story:

(http://www.liebert.com/newsletters/uptimes/2007/11nov/customerservicesnetwork.asp

)

Good OS Announces Debut of gOS 2.0 "Rocket" at CES

Good OS Announces Debut of gOS 2.0 "Rocket" at CES

Good OS, the open source startup introduced gOS, a Linux operating

system with Google and web applications, on a $199 Wal-Mart PC last

November.

gOS 2.0 "Rocket" is packed with Google Gears, new online offline

synchronization technology from Google that enables offline use of web

apps; gBooth, a browser-based web cam application with special effects,

integration with Facebook and other web services; shortcuts to launch

Google Reader, Talk, and Finance on the desktop; an online storage drive

powered by Box.net; and Virtual Desktops, an intuitive feature to easily