...making Linux just a little more fun!

Mailbag

This month's answers created by:

[ Sayantini Ghosh, Amit Kumar Saha, Ben Okopnik, Joey Prestia, René Pfeiffer, Neil Youngman, Rick Moen, Thomas Adam ]

...and you, our readers!

Still Searching

Reg:Postfix mail redirection

Smile Maker [britto_can at yahoo.com]

Tue, 1 Jul 2008 00:10:15 -0700 (PDT)

Folks:

If I have got mails which does not contain <MydomainName > in the from

address which is sent to some aliases should get "redirected" to my

account.

Any idea..

--

Britto

Getting started with Linux & OLPC

Ben Okopnik [ben at linuxgazette.net]

Mon, 14 Jul 2008 11:10:02 -0400

[[[ I've changed the subject line from the undescriptive "can u

help...." -- Kat ]]]

----- Forwarded message from nick zientarski <[email protected]> -----

From: nick zientarski <[email protected]>

To: [email protected]

Subject: can u help....

Date: Mon, 14 Jul 2008 02:16:57 -0400

hello~

i bought an OLPC "give one/get one" computer, well...4 total, one for my

mother and I...we need a little help with getting started on the linux

system...

the more i read about this open source way to compute gets me more and

more excited, it is just a little much starting out...any resources or

service companies i could mail to start our new relationship with

linux..we would be more than greatful

thank you for your time  ~nick zientarski~

~nick zientarski~

----- End forwarded message -----

Our Mailbag

Averatec n40bers and sy0b3es

Tony Davis [tdavis803 at bellsouth.net]

Sat, 19 Jul 2008 14:49:37 -0400

Sirs

i have a averatec 5400 and when i try to type something i get this get n40bers and sy0b3es What is wrong

THANK You

Tony Davis

the only way i can type anything is to hold down a fn key

[ Thread continues here (2 messages/1.33kB) ]

inquiry on tcpdump

Ignacio, Domingo Jr Ostria - igndo001 [DomingoJr.Ignacio at postgrads.unisa.edu.au]

Tue, 1 Jul 2008 13:24:03 +0930

Hello everyone,

Good day!

I am using tcpdump in my linux system to sniff and capture tcp packet

headers.

I inserted a new variable, srtt, into the print_tcp.h header file and

tcp.c source code.

I want to print/ouput/capture the inserted variable srtt into the tcp

packet headers once I run tcpdump.

Any idea on how to go about this?

However, once I run tcpdump with all the changes I made (of course,

after configuring and making) and I got an error:

Bad header length and <bad opt>.

I tried to increase the snaplength to 1514 but I still got the same

errors. What seems to be the problem, anyone? Thanks!

Also, I cannot see the inserted srtt variable in the tcpdump trace

files. Any idea on these guys?

I don't know if this is the right venue to ask these question since this

is for linux queries but still I am hoping that anyone has an experience

on this. Help would be appreciated.

Cheers, Dom

[ Thread continues here (5 messages/5.48kB) ]

[OT] Nagware from Google Translate

Jimmy O'Regan [joregan at gmail.com]

Tue, 15 Jul 2008 16:18:52 +0100

I was trying to point a friend of mine to http://librivox.org today,

and for some reason he couldn't connect to it. The quickest way of

finding a proxy he could use was via Google Translate (he's Polish).

When I looked at the page, however, I say that the phrase 'some free

software' (in refernce to open source software, such as Audacity) had

become 'niektóre wolne oprogramowanie *rejestrujące*' - 'some

registered free software' - which makes me wonder if Google's next

service will be nagware.google.com

[ Thread continues here (2 messages/1.47kB) ]

SMTP Auth Problem

Joey Prestia [joey at linuxamd.com]

Sat, 05 Jul 2008 09:28:38 -0700

Hi all,

I am administering a e-mail server running RHEL 5 with sendmail 8.13 and

using dovecot imap most of the users are going to need to be able to

send mail from their laptops and i have no idea what network they will

be utilizing. After looking at

http://www.joreybump.com/code/howto/smtpauth.html and following the

guide there I was able to get authenticated relay enabled.

I am using saslauthd and never created sasl passwords but

following the guide at http://www.joreybump.com/code/howto/smtpauth.html

was able to get it working. now after 6 months of no problem and then

the other day changed my password to a stronger one now I cannot relay

from anywhere.

[root@linuxamd ~]# sasldblistusers2

listusers failed

[root@linuxamd ~]#

[root@linuxamd ~]# testsaslauthd -u joey -p ******

0: NO "authentication failed"

is this my problem?

All accounts are stored in /etc/passwd saslauthd is running and I

performed a restart of saslauthd with out any errors.

I have tried with both kmail and thunderbird. My usual settings are tls

if available and and smtp auth with username and password I thought at

first that this was the problem it was using the old password so I

removed the default smtp server settings and removed the passwords. So

it would force me to manually enter the password again I did this while

on another network and tried to send with no success. As soon as I

disconnected from the neighboring lan and connected to the local lan it

took and sent but I am unsure if it is using auth it seems like it is

only sending because its on my lan how would I verify the authentication

process is working at all? I am not certain exactly how saslauthd

works and have seen nothing unusual in my logs. Thunderbird simply times

out when I try to send from other networks and tells me to check my

network settings.

Thanks in advance,

--

Joey Prestia

L. G. Mirror Coordinator

http://linuxamd.com

Main Site http://linuxgazette.net

[ Thread continues here (18 messages/42.62kB) ]

Regular Expressions

Deividson Okopnik [deivid.okop at gmail.com]

Thu, 17 Jul 2008 23:50:11 -0300

Quick regular expressions questions, I have a string and i want to

return only whats inside the quotes inside that string - example the

string is -> "Deividson" Okopnik <-, and i want only -> "Deividson"

<-. Its guaranted that there will be only a single pair of

double-quotes inside the string, but the lenght of the string inside

it is not constant.

Im using PHP btw

Thanks

DeiviD

[ Thread continues here (13 messages/16.08kB) ]

What other existing RMAIL Emacs commands or techniques or mechanisms are useful for sorting out spam messages?...

don warner saklad [don.saklad at gmail.com]

Mon, 30 Jun 2008 08:50:57 -0400

For nonprogrammer type users what other existing RMAIL Emacs commands

or techniques or mechanisms are useful for sorting out spam messages

more efficiently and effectively?... for example in RMAIL Emacs

laboriously sorting out spam messages can be tried with

Esc C-s Regexp to summarize by: ...

For nonprogrammer type users it appears that using existing commands

for each session is an alternative to attempts at composing programs

or tweaking others' programs or the difficulties of dotfiles.

C-M-s runs the command rmail-summary-by-regexp

as listed on the chart

C-h b describe-bindings

Key translations:

key binding

--- -------

C-x Prefix Command

C-x 8 iso-transl-ctl-x-8-map

^L

Major Mode Bindings:

key binding

--- -------

C-c Prefix Command

C-d rmail-summary-delete-backward

RET rmail-summary-goto-msg

C-o rmail-summary-output

ESC Prefix Command

SPC rmail-summary-scroll-msg-up

- negative-argument

. rmail-summary-beginning-of-message

/ rmail-summary-end-of-message

0 .. 9 digit-argument

< rmail-summary-first-message

> rmail-summary-last-message

? describe-mode

Q rmail-summary-wipe

a rmail-summary-add-label

b rmail-summary-bury

c rmail-summary-continue

d rmail-summary-delete-forward

e rmail-summary-edit-current-message

f rmail-summary-forward

g rmail-summary-get-new-mail

h rmail-summary

i rmail-summary-input

j rmail-summary-goto-msg

k rmail-summary-kill-label

l rmail-summary-by-labels

m rmail-summary-mail

n rmail-summary-next-msg

o rmail-summary-output-to-rmail-file

p rmail-summary-previous-msg

q rmail-summary-quit

r rmail-summary-reply

s rmail-summary-expunge-and-save

t rmail-summary-toggle-header

u rmail-summary-undelete

w rmail-summary-output-body

...

[ Thread continues here (3 messages/4.45kB) ]

kernel inquiry

Ignacio, Domingo Jr Ostria - igndo001 [DomingoJr.Ignacio at postgrads.unisa.edu.au]

Thu, 3 Jul 2008 16:51:39 +0930

Hi guys,

In the tcp.h header file , I found the variables:

#define TCPOPT_WINDOW 3

#define TCPOPT_SACK 5

#define TCPOPT_TIMESTAMP 8

Does anyone can help me with what the numbers 3,5,8 means? Are they the

tcp option numbers in the TCP header? Or are they port definitions?

Cheers,

Dom

[ Thread continues here (3 messages/4.94kB) ]

Tool to populate a filesystem?

René Pfeiffer [lynx at luchs.at]

Wed, 16 Jul 2008 23:46:06 +0200

Hello!

I am playing with 2.6.26, e2fsprogs 1.41 and ext4, just to see what ext4

can do and what workloads it can handle. Do you know of any tools that

can populate a filesystem with a random amount of files filled with

random data stored a random amount of directories? I know that some

benchmarking tools do a lot of file/directory creation, but I'd like to

create a random filesystem layout with data, so I can use the usual

tools such as tar, rsync, cp and others to do things you usually do when

setting up, backuping and restoring servers.

Curious,

René.

[ Thread continues here (13 messages/24.92kB) ]

Multi-host install of FC6

Ben Okopnik [ben at linuxgazette.net]

Wed, 16 Jul 2008 17:22:12 -0400

Hi, all -

I'm going to be teaching a class next week in which the student machines

are going to have Fedora Core 6 installed on them, so the poor on-site

tech guy is going to be spending his weekend flipping the 6 installation

CDs among a dozen-plus machines - not a happy-fun thing at all, as I see

it.

Does anyone know of a way to set up an "install server" for FC6? Perhaps

I've been spoiled by the Solaris 'jumpstart' system, where you set up a

simple config file, tell your hosts to load from the jump server, and

walk away - but I can't help feeling that anything Solaris can do, Linux

can do better.

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

[ Thread continues here (11 messages/12.45kB) ]

Auto detect and mount SD Card

kanagesh radhakrishnan [rkanagesh at gmail.com]

Thu, 24 Jul 2008 12:30:31 +0530

Hello,

I am working on an ARM based custom development board that has a Micro SD

connector.

I use Linux (kernel version 2.6.24) on the board and I have support for the

Micro SD cards. The SD driver has support for hotplug. At the bash prompt

on the target board, I am able to mount the card, write data to it and read

data from it. What utility do I need to add to the root file system for the

card to be detected automatically when it's inserted and the file system on

the card's media to be mounted to a specified mount point?

A couple of years ago, I was working with PCMCIA Flash memory cards and USB

memory disks. There used to be a utility called Card Manager (cardmgr) that

detects the insertion of a PCMCIA flash memory card and mounts it

automatically. USB memory disks used to be automounted by the hotplug

scripts. Is there any utility similar to this for SD/MMC/Micro SD cards?

Thanks in advance,

Kanagesh

[ Thread continues here (2 messages/1.90kB) ]

SCP Sensing Remote file presense

Smile Maker [britto_can at yahoo.com]

Tue, 22 Jul 2008 04:28:11 -0700 (PDT)

Folks:

When you do scp to remote machine, linux assumes to overwrite the target

file if exists. Is there any way to check whether the target file exists

and then copy the actual file.

---

Britto

[ Thread continues here (3 messages/2.04kB) ]

asking about MIB structure of SNMP agent running on various type of Solaris, HP-UX and Windows

Mulyadi Santosa [mulyadi.santosa at gmail.com]

Wed, 9 Jul 2008 17:03:12 +0700

Hello gang!

As the subject says, I have hard times understanding the result of

snmpwalk against those platforms. Not that I can't query those nodes,

but snmpwalk is unable to translate the numeric form of OID into human

readable string label.

More precisely, I need to find the MIB files that describe the

returned SNMP data. So far, my best bet is by referring to

oidview.com, but the MIB files I have downloaded from this site don't

always work as expected i.e some OIDs are still in numeric form, thus

I don't whether it refers to disk usage, cpu utilization and so on.

I will highly appreciate any inputs regarding this issue.

regards,

Mulyadi.

[ Thread continues here (8 messages/10.32kB) ]

is it possible to make CUPS daemons cooperate between themselves?

Mulyadi Santosa [mulyadi.santosa at gmail.com]

Wed, 9 Jul 2008 17:26:55 +0700

Hi gang...

Sorry if the subject sounds confusing, but I wonder, is it possible?

AFAIK, each CUPS daemons manage its own queue on the node's own

disk...and by design I don't see CUPS is designed as cooperative

daemon.

By "cooperative" I mean something like having unified print spool,

unified and shared printer device's name among CUPS daemons...and so

on.

Looking forward to hear any ideas about it...

regards,

Mulyadi.

[ Thread continues here (7 messages/6.96kB) ]

thanks for CUPS tips

Mulyadi Santosa [mulyadi.santosa at gmail.com]

Thu, 24 Jul 2008 08:24:38 +0700

Hi all

Just wanna say big thanks for your suggestions when I asked about CUPS

configuration days ago. it works quite nicely in my environment.

I know I haven't share much for LG but you answered my question faster

than my close colleagues/partner. I highly appreciate that. You guys

really do something valuable in your free time.

God bless!

regards,

Mulyadi.

Followup: US sanctions-compliant Linux

Simon Lascelles [simon.lascelles at rems.com]

Thu, 3 Jul 2008 11:17:08 +0100

I would like to thank everyone for their comments. I do hope the topic has

not made too many peoples blood pressure to rise to "dangerous levels",

although it is clear a topic several people are passionate about.

In summary it looks to me that Linux could be used on one of two basis's;

1. If we were not a UK company (or any company citied in the

restrictions). To resolve this we could establish an off shore company to

provide the distribution to circumvent this restriction.

2. Encryption was removed from the package. A task I believe is

relatively simple to achieve.

Two points I would like to make for your information and further comment is

that I believe the restriction on US content is 10% not 1% as one person

commented. Secondly I will of course seek legal advice before proceeding

but wanted to see if this issue had been seen before, by the experts (the

legal guys don't tend to know what they are talking about anyway).

Thank you once again and if you have further advice on the issue it would be

gratefully appreciated.

--

Simon Lascelles

Managing Director

REMS International

Email: [email protected] <blocked::mailto:[email protected]>

Web Site: http://www.rems.com/ <http://www.rems.com/>

Mobile: 07956 676112

Telephone: 01727 848800;

Yahoo:

[email protected]

Mobile communications are changing the face of business, and organisations

that deploy mobile solutions will reap the greatest competitive advantage

_________________________________________________

[ Thread continues here (2 messages/4.38kB) ]

Searching for multiple strings/patterns with 'grep'

Amit k. Saha [amitsaha.in at gmail.com]

Thu, 3 Jul 2008 16:46:41 +0530

On Mon, Jun 23, 2008 at 5:11 PM, Ben Okopnik <[email protected]> wrote:

> On Mon, Jun 23, 2008 at 11:52:44AM +0530, Amit k. Saha wrote:

>> Hello TAG,

>>

>> I have a text file from which I want to list only those lines which

>> contain either pattern1 or patern2 or both.

>>

>> How to do this with 'gre'p?

>>

>> Assume, file is 'patch', and 'string1' and 'string2' are the two patterns.

>>

>> The strings for me are: 'ha_example' and 'handler'- so I cannot

>> possibly write a regex for that.

>

> Over the years, I've developed a working practice that says "don't use

> grep for anything more than a simple/literal search." Remembering the

> bits that you have to escape vs. those you don't is way too much of a

> pain. Stick with 'egrep', and you don't have to remember all that stuff.

>

> ``

> egrep 'string1|string2' filepattern

hmm. Works great.

-Amit

--

Amit Kumar Saha

http://blogs.sun.com/amitsaha/

http://amitksaha.blogspot.com

Skype: amitkumarsaha

Talkback: Discuss this article with The Answer Gang

Published in Issue 153 of Linux Gazette, August 2008

Mailbag 2

This month's answers created by:

[ Rick Moen ]

...and you, our readers!

Editor's Note

Jimmy O'Regan forwarded and crosslinked several followup discussions on his

article on Apertium from LG 152. For your reading ease and pleasure, I've

gathered those threads into this separate page. -- Kat

Cuneiform OCR source available for linux

Jimmy O'Regan [joregan at gmail.com]

Tue, 1 Jul 2008 11:22:57 +0100

Cognitive released the source of the kernel of their OCR system

(http://www.cuneiform.ru/eng/index.html), and the linux port

(https://launchpad.net/cuneiform-linux) has reported their first

success: https://launchpad.net/cuneiform-linux/+announcement/561

Hopefully, they'll be able to get the layout engine working - I've

used the Windows version, and it was very good at analysing a

complicated mixed language document.

[ Thread continues here (3 messages/1.29kB) ]

Playing With Sid : Apertium: Open source machine translation

Jimmy O'Regan [joregan at gmail.com]

Thu, 3 Jul 2008 10:57:57 +0100

---------- Forwarded message ----------

From: Jimmy O'Regan <[email protected]>

Date: 2008/7/3

Subject: Re: Playing With Sid : Apertium: Open source machine translation

To: Arky <[email protected]>

2008/7/3 Arky <[email protected]>:

> Arky has sent you a link to a blog:

>

> Accept my kudos

>

Thanks, and thanks for the link

Would you mind if I forwarded your e-mail to Linux Gazette? LG has a

lot of Indian readers, and maybe we can recruit some others who would

be interested in helping with an Indian language pair.

Also, I note from your blog that you have lttoolbox installed, and

that you can read Devanagari - we have a Sanskrit analyser that uses

Latin transliteration; I tried to write a transliterator to test it

out, but, alas, it doesn't work, and I can't tell why.

It's in our 'incubator' module in SVN, here:

http://apertium.svn.sourceforge.net/svnroot/apertium/trunk/apertium-sa-XX/apertium-sa-XX.ucs-wx.xml

> Blog: Playing With Sid

> Post: Apertium: Open source machine translation

> Link:

> http://playingwithsid.blogspot.com/2008/07/apertium-open-source-machine.html

>

> --

> Powered by Blogger

> http://www.blogger.com/

>

[ Thread continues here (6 messages/9.61kB) ]

Apertium-transfer-tool info request

Jimmy O'Regan [joregan at gmail.com]

Fri, 11 Jul 2008 17:47:01 +0100

[I'm assuming Arky's previous permission grant still stands, also

cc'ing the apertium list, for further comment]

---------- Forwarded message ----------

From: Rakesh 'arky' Ambati <[email protected]>

Date: 2008/7/11

Subject: Apertium-transfer-tool info request

To: [email protected]

Hi,

I am trying to use apertium-transfer-tools on Ubuntu Hardy, can you

kindly point to working example/tutorial where transfer rules are

generated from alignment templates.

Cheers

--arky

Rakesh 'arky' Ambati

Blog [ http://playingwithsid.blogspot.com ]

[ Thread continues here (3 messages/13.54kB) ]

[Apertium-stuff] Apertium-transfer-tool info request

Felipe Sanchez Martinez [fsanchez at dlsi.ua.es]

Fri, 11 Jul 2008 23:45:29 +0200

Hi,

Jimmy, very good explanation, :D

> Also; the rules that a-t-t generates are for the 'transfer only' mode

> of apertium-transfer: this example uses the chunk mode - most language

> pairs, unless the languages are very closely related, would really

> be best served with chunk mode. Converting a-t-t to support this is on

> my todo list, and though doing it properly may take a while, I can

> probably get a crufty, hacked version together fairly quickly. With a

> couple of sed scripts and an extra run of GIZA++ etc., we can also

> generate rules for the interchunk module.

We could exchange some ideas about that, and future improvements such as

the use of context-dependent lexicalized categories. This would give

a-t-t better generalization capabilities and make the set of inferred

rules smaller.

> The need for the bilingual dictionary seemed a little strange to me at

> first, but Mikel, Apertium's BDFL, explained that it really helps to

> reduce bad alignments. This probably means that a-t-t can't generate

> rules for things like the Polish to English 'coraz piêkniejsza' ->

> 'prettier and prettier', but I haven't checked that yet.

The bilingual dictionary is used to derive a set of restrictions to

prevent an alignment template (AT) to be applied in certain conditions

in which it will generate a wrong translation. Restrictions refer to the

target language (TL) inflection information of the non-lexicalized words

in the AT. For example, suppose that you want to translate the following

phrase from English into Spanish:

"the narrow street", with the following morphological analysis (after

tagging): "^the<det><def><sp>$ ^narrow<adj><sint>$ ^street<n><sg>$"

The bilingual dictionary says:

''

^narrow<adj><sint>$ -------> estrecho<adj><f><ND>$

^street<n><sg>$" -------> calle<n><f><sg>$

''

Supose that you want to apply this AT:

SL: the<det><def><sp> <adj><sint> <n><sg>

TL: el<det><def><f><sg> <n><f><sg> <adj><f><sg>

Alignment: 1:1 2:3 3:2

Rstrictions (indexes refer to the TL part of the AT):

w_2 = n.f.* w_3 = adj.*,

* Note: "the" and "el" are lexicalized words

This AT generalizes:

[ ... ]

[ Thread continues here (6 messages/15.77kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 153 of Linux Gazette, August 2008

Talkback

Talkback:116/okopnik.html

[ In reference to "Introduction to Shell Scripting, part 6" in LG#116 ]

Mr Dash Four [mr.dash.four at googlemail.com]

Mon, 30 Jun 2008 13:06:25 +0100

Hi Gang,

_*

*_Found this list thanks to the excellent article of Ben Okopnik here

<http://linuxgazette.net/116/okopnik1.html> - I was trying to create a

floppy disk to help me boot-up an old PC (PII, 256MB RAM, 2xUSB + FDD),

by transferring the control over to the USB drive (which can't be

booted-up directly as the PC is too old). The USB contains slimmed-down

version of FC8 containing propriety tools and programs. I wasn't

successful, though this is not why I am writing this email (help with

the floppy-usb boot-up is also appreciated though that may be a subject

to a different email as Ben's article points out that I would need

Knoppix Linux, but I have FC8 on the USB drive). Anyway...

After unsuccessfully trying to make the boot-up floppy, allowing me to

boot-up the USB drive I tried to make a bare-bone boot-up floppy,

containing just stage1 & stage 2 files to get me to the GRUB prompt (see

'What happened' below) with the intention of trying my luck from there

and this is where I encountered my problem.

_*The problem:*_ I can't boot from my HDD - when I try I see the word

"GRUB " on the screen and then nothing (this is not the GRUB prompt - it

is just a message on the screen saying "GRUB " and then the system halts)!

I can access all my partitions - no problem (none of them appear

damaged, same is valid for my partition table, thank god) using

Boot/Rescue CD, which I made following the instructions shown in section

3.4 here <http://orgs.man.ac.uk/documentation/grub/grub_3.html>.

_*My system:*_

Fedora Core 8 with the latest updates, P4-M, 1GB RAM, 80GB HDD, split

into the following partitions:

hda1 - primary, WinXP, NTFS

hda2 - extended

hda5 - logical, data disk, NTFS

hda6 - logical, RedHat 9, ext2

hda7 - logical, Fedora Core 8 /boot, ext2

hda8 - logical, Fedora Core 8 LVM volumes (5 of them mapped to /, /usr,

/var, /home and /var/cache), lvm

hda9 - logical, data backups, NTFS

hda3 - primary, Service/Rescue DOS, FAT

GRUB used to be in my MBR as through its menu I used to boot all

partitions successfully (up until now that is). One other thing I need

to point out - as soon as the partitions were made I have created a

copies of the boot sectors of all my partitions, plus the main MBR

sector using these commands:

%dd if=/dev/hda of=hda-mbr-full bs=512 count=1 # mbr including partitions

%dd if=/dev/hda of=hda-mbr-nopart bs=446 count=1 # no partition

information, just the MBR

%dd if=/dev/hdaX of=hdaX-bootsect bs=512 count=1 # boot record of a

particular partition

where 'X' is the number of the partition. The files were saved on a

separate usb device (memory stick) for safekeeping.

I should also point out that within FC8 Linux my HDD is mapped as

/dev/sda (not /dev/hda).

[ ... ]

[ Thread continues here (4 messages/20.03kB) ]

Talkback:107/pai.html

[ In reference to "Understanding Threading in Python" in LG#107 ]

Ben Okopnik [ben at linuxgazette.net]

Thu, 3 Jul 2008 10:40:33 -0400

----- Forwarded message from Greg Robinson <[email protected]> -----

Date: Wed, 2 Jul 2008 20:41:13 -0700

From: Greg Robinson <[email protected]>

To: [email protected]

Subject: Understanding threading in Python

Hello,

I appreciated the article entitled "Understanding Threading in Python"

by Krishna G Pai. However, it states that "there is no significant

difference between threaded and non threaded apps." While this is true

for the specific example used in the article, the statement is

misleading. Don't neglect the advantages of threading, even with green

threads such as those in Python, in situations where the thread is

likely to block on something other than local processing. For example,

if someone fires off numerous RPCs to multiple computers in

succession, each RPC call may have to sit and wait for the callback.

This can take much longer than dispatching multiple simultaneous RPCs

and handling each one concurrently.

Cheers,

Greg Robinson

----- End forwarded message -----

Talkback:152/lg_tips.html

[ In reference to "2-Cent Tips" in LG#152 ]

Greg Metcalfe [metcalfegreg at qwest.net]

Fri, 25 Jul 2008 11:35:02 -0700

Regarding "2-cent tip: Removing the comments out of a configuration file":

I don't like to invoke Yet Another Interpreter (Perl, Python, etc.) for simple

problems, when I've already got a perfectly good one (the bash shell)

running, and all those wonderful GNU programs. So I often view 'classic'

config files (for httpd, sshd, etc) via the following, which I store as

~/bin/dense:

!#/bin/bash

# Tested on GNU grep 2.5.1

grep -Ev ^\([[:space:]]*#\)\|\(^$\) $1

~/bin is in my path, so the command is simply 'dense PATH/FILE'. This code

strips comments, indented comments, and blank lines.

Of course, if you need this frequently, and bash is your login shell, a better

approach might be to just add:

alias dense='grep -Ev ^\([[:space:]]*#\)\|\(^$\)'

to your ~/.bashrc, since it's so small, thus loading it into your environment

at login. Don't forget to source the file via:

'. ~/.bashrc'

after the edit, if you need it immediately!

Regards,

Greg Metcalfe

[ Thread continues here (3 messages/8.53kB) ]

Talkback:152/oregan.html

[ In reference to "Apertium: Open source machine translation" in LG#152 ]

Jimmy O'Regan [joregan at gmail.com]

Fri, 4 Jul 2008 17:43:45 +0100

I mentioned the infinite monkeys idea in relation to SMT in my

article... I found today that it has been done:

http://www.markovbible.com/

"You've seen the King James, the Gutenberg and the American Standard,

but now here is the future ........ The Markov Bible.

Everyone has heard the saying 'a million monkeys typing for a million

years will eventually produce the bible'.

Well, we have done it!!! And in a far shorter time.

Using our team of especially trained Markov Monkeys, we can rewrite

whole books of the bible in real time."

[ Thread continues here (2 messages/4.45kB) ]

Talkback:116/herrmann.html

[ In reference to "Automatic creation of an Impress presentation from a series of images" in LG#116 ]

Karl-Heinz Herrmann [kh1 at khherrmann.de]

Wed, 2 Jul 2008 12:23:06 +0200

Hi TAG,

I got an email a few days back including a patch to the

img2ooImpress.pl script in issue 116. I would like to forward the

following discussion to TAG (with permission, Rafael is CC'ed):

-------------------------------------------------------------------------

From: Rafael Laboissiere <[email protected]>

To: Karl-Heinz Herrmann <[email protected]>

Subject: Re: Bug in your img2ooimpress.pl script

Date: Tue, 24 Jun 2008 21:53:36 +0200

User-Agent: Mutt/1.5.17+20080114 (2008-01-14)

* Karl-Heinz Herrmann <

[email protected]> [2008-06-24 21:39]:

> thanks for the patch... actually I stumbled onto the problem myself

> after an upgrade of OODoc some time back and sent a correction to LG

> which got published as talkback for the article. That patch is there:

> http://linuxgazette.net/132/lg_talkback.html#talkback.02

>

> but as #116 was before they introduced talkbacks the talkback is not

> actually linked in the original article so it doesn't help people

> much. As LG is mirrored in many places the original articles are not

> changed usually.

Indeed... Sorry for bothering you with this. It is too bad that the LG

article does not link to the talkback.

> I hope -- apart from having to find and fix that bug -- that script

> was helpful

Yes, it was. I wrote another Perl script based on yours to directly

convert a PDF file into ooImpress. Besides the OpenOffice::OODoc

module, it needs the xpdf-utils (for pdfinfo), the pdftk, and the

ImageMagick (for convert) packages. The script is attached below.

[...]

and another followup by Rafael:

I am attaching below an improved version of the script. I improved the

header material, put it under the GPL-3+ license and it now accepts a --size

option.

I also put the script in a (kind of) permanent URL:

http://alioth.debian.org/~rafael/scripts/pdf2ooimpress.pl

----------------------------------------------------------------------

Is there a possibility to "upgrade" the older TAG pages with the

talk-back links? I'm of course aware of the mirror problem.

[[[ FYI to Karl-Heinz (and everyone else interested) - I manually

build the Talkback style for dicussions of articles that predate the

Talkback link. It doesn't help during the discussion, but it does make

the published version look just as spiffy! -- Kat ]]]

In any case his improved script version might be a nice 2c tip (or mail

bag) contribution.

K.-H.

[ Thread continues here (6 messages/14.69kB) ]

Talkback:116/okopnik.html - success!

[ In reference to "Introduction to Shell Scripting, part 6" in LG#116 ]

Mr Dash Four [mr.dash.four at googlemail.com]

Sun, 06 Jul 2008 02:00:12 +0100

Hi, Ben,

> Please don't drop TAG from the CC list; we all get "paid" for our time

> by contributing our technical expertise to the Linux community, and that

> can't happen in a private email exchange.

Apologies, it wasn't intentional - I've got your message and hit the

'reply' button without realising there was a cc: as well.

>> Here is my plan (funny enough I was about to start doing this in about 2

>> hours and possibly spend the whole of tomorrow - Sunday - depending in

>> what kind of a mess I may end up in):

>>

>> 1. Backup my entire current /boot partition (it is about 52MiB).

>> 2. Restore a month-old backup of this /boot partition to a safe' location

>> (USB drive). As this backup is old apart from the new kernel version it

>> won't contain anything wrong with the partition and my first task will be

>> to compare the files, which may cause my partition not to boot (menu.lst

>> etc) as well as the boot sector. I would expect to see changes and will

>> ignore the ones caused by the kernel updates (like new versions of the

>> vmlinuz- file).

>> 3. If I find such changes between the 'old' backup and the new one, which

>> prevent me from booting up the new partition then I will reverse them and

>> see if I can boot up.

The first two steps went off without a hitch. To my big surprise I did

NOT find any significant changes to the /boot partition. I've attached

'files.zip' which contains a few interesting files in it.

'boot_list.txt' and 'boot_grub_list.txt' lists the contents of my '/'

and '/grub' directories of the '/boot' partition. The only difference

between the old (backed up) and the new was file size and different

version numbers of the kernel files (vmlinuz, initrd and the like).

>> 4. If there are NO changes I could find (the least favourable option for

>> me as I will enter uncharted waters here!) then I would have no option,

>> but to run grub-install /dev/sda while within FC8 Live CD to restore GRUB

>> in the hope of getting GRUB to load. If I could then boot normally from

>> the hard disk then I would compare what has been done (both in terms of

>> files and the boot sector - bot on the /boot partition as well as the

>> absolute on /dev/sda) and see if I can find any differences. If not, well

>> ... it will remain a great mystery what really went wrong, sadly!

>>

Well, this was my almost last-chance saloon and given that

'grub-install' messed up my mbr completely (read below) I was VERY

reluctant to use it again to re-install GRUB, so after re-reading the

original article on how to re-install it (refer to my 1st email) coupled

with me getting nowhere with managing to boot from the CD via the GRUB

menu (instead of typing each and every grub command) I did the following:

[ ... ]

[ Thread continues here (3 messages/16.22kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 153 of Linux Gazette, August 2008

2-Cent Tips

2-cent Tip: Caching in the Shell

Amit k. Saha [amitsaha.in at gmail.com]

Wed, 16 Jul 2008 15:04:37 +0530

Hi Gang,

Just wanted to share this with you: (I am sure most of you already know this)

Shells cache the commands which you have executed so as to save the

time required to search your PATH every time you execute it. Type

'hash' in your terminal and you will see something like:

$ hash

hits command

1 /usr/bin/which

2 /usr/local/bin/log4cpp-config

1 /usr/bin/gnome-screensaver

Every time you execute a (non shell-builtin) command, it is

automatically added to the 'cache' and lives there for the rest of the

session- once you logout or even close the pseudo-terminal (like

gnome-terminal) it is gone.

Another observation, the most recently executed command is added to

the bottom of the list.

Happy to share!

I found this a good read:

http://crashingdaily.wordpress.com/2008/04/21/hashing-the-executables-a-look-at-hash-and-type/

-Amit

--

Amit Kumar Saha

http://blogs.sun.com/amitsaha/

http://amitksaha.blogspot.com

Skype: amitkumarsaha

Talkback: Discuss this article with The Answer Gang

Published in Issue 153 of Linux Gazette, August 2008

News Bytes

By Deividson Luiz Okopnik and Howard Dyckoff

|

Contents:

|

Please submit your News Bytes items in

plain text; other formats may be rejected without reading.

(You have been warned!) A one- or two-paragraph summary plus a URL has a

much higher chance of being published than does an entire press release. Submit

items to [email protected].

News in General

Xandros Buys Rival Linspire

Xandros Buys Rival Linspire

At the end of June, Xandros acquired Linspire as a wholly-owned

subsidiary that it intends to manage as a separate division. Financial

information was not disclosed, even to Linspire's shareholders. (The

Board of Directors was fired last year by former CEO and current Board

chairperson Michael Robertson.) Other details on the acquisition are

available as an FAQ on the Linspire Web site:

http://www.linspire.com/lindows_news_pressreleases.php

Xandros expressed a commitment to maintain the open source "Freespire"

project, which is a free and redistributable version of Linspire. Also,

Linspire CEO Larry Kettler and other Linspire managers will be joining

the Xandros management team.

Major motives for the deal were providing Xandros with Linspire's

advanced CNR technologies and Linux expertise. It also enlarges the

Xandros customer base and support network. CNR is an automated update

technology and software delivery service that has helped popularize

Linspire (formerly called "Lindows") with former Windows users. Xandros

expects to incorporate CNR by the end of July.

"Products like the ASUS Eee PC have demonstrated the huge potential

market for Linux-based OEM netbook solutions, and CNR will help Xandros

make these platforms easy to enhance and maintain, while providing

on-demand delivery of a growing number of Linux applications, utilities,

games, and content", said Andreas Typaldos, Xandros CEO. "Linspire's CNR

technologies will speed up Xandros's expansion into the applications

space."

Former Linspire CEO Kevin Carmony has used his blog to discuss

potentially sordid aspects of the transaction, including charges that

Board Chairperson Michael Robertson may be trying to convert Linspire

assets to cash for personal use and also may be involved in rumored

Microsoft negotiations with AOL and Xandros. See:

http://kevincarmony.blogspot.com/2008/06/xandros-acquires-linspire-assets-in.html

Red Hat Voted "Greenest" Operating System

Red Hat Voted "Greenest" Operating System

A recent Network World comparison examined power consumption to determine

who had the "greener" operating system: Windows, Red Hat, and openSUSE.

Network World ran multiple power consumption tests using Red Hat Enterprise

Linux 5.1, SUSE Enterprise Linux 10 SP1, and Windows Server 2008 Enterprise

Edition on servers from Dell, IBM, and HP. Red Hat Enterprise Linux ranked

at the top in keeping power draw in check, pulling about 12 percent less

power than did Windows 2008 on identical hardware. This reduced power draw

was evident across testing in both performance and power saving modes, and

included all server models used in the testing.

From the summary at

http://www.linuxworld.com/research/2008/060908-green-windows-linux.html:

"We ran multiple power consumption tests... on four popular 1U server

machines, one each from Dell and IBM and two from HP. The results showed

that while Windows Server 2008 drew slightly less power in a few test

cases when it had its maximum power saving settings turned on, it was

RHEL that did the best job of keeping the power draw in check across the

board." The tests also showed that firmware updates and new drivers were

needed to take full advantage of newer chip-based power optimizations

such as CPU throttling.

Red Hat noted its involvement in projects focused on saving power.

Example of these efforts include http://www.LessWatts.org, an

Intel-sponsored project bringing together developers, users, and sysadmins

all in the effort to create a community around saving power on Linux. One

part of the LessWatts program is PowerTOP, a Linux tool that helps identify

programs that are power-hungry while a system is idle. PowerTOP technology

is also being applied to the kernel itself, where there is ongoing auditing

work to find pollers. The end result is a more efficient kernel.

Another area of development concerns support for CPU clock frequency

scaling. Clock scaling allows us to change the clock speed of a running CPU

on the fly. Red Hat Enterprise Linux 5.2 has support for Intel's Dynamic

Acceleration Technology, which provides power saving by quiescing idle CPU

cores, and also offers performance gains by potentially overclocking busy

cores within safe thermal levels. Red Hat worked close with AMD to prove

support for PowerNow! - a technology for power management. The new

tickless kernel provided in Fedora 9 is likely to move to Red Hat

Enterprise Linux in the future. This allows the kernel to properly idle

itself. Today, the kernel tick-rate is 1000/second, so it is hard to

quiesce it. The tickless kernel sets the system into a low-power state

based on knowledge of future timer-based events. Long, multi-second idle

periods are possible.

Kernel 2.6.26 Released with New Virtualization Support, KDB

Kernel 2.6.26 Released with New Virtualization Support, KDB

The new kernel, version 2.6.26, was released in mid-July, and adds

support for read-only bind mounts, x86 Page Attribute Tables, PCI

Express ASPM (Active State Power Management), ports of KVM to IA64, S390

and PPC, other KVM improvements including basic paravirtualization

support, preliminary support of the future 802.11s wireless mesh

standard, a new built-in memory tester, and a kernel debugger.

Although Linux has supported bind mounts, a sort of directory symlink that

allows sharing the contents of a directory in two different paths, since

kernel 2.4, a bind mount could not be made read-only. That changes with

2.6.25, with a more selective application of r/o properties for improved

security.

Also included are several file system updates, including IPv6 support

for NFS server export caches. IPv6 support also extends to TCP SYN

cookies.

Kernel 2.6.25 has improved USB and Infiniband support. This release adds

support for the OLPC XO hardware. This includes the ability to emulate the

PCI BARs in the kernel. This also adds functionality for running EC

commands and a CONFIG_OLPC. A number of OLPC drivers depend upon

CONFIG_OLPC. A forward-looking addition is the increase of maximum physical

memory size in 64-bit kernels from 40 to 44 bits, in preparation for future

chips that support larger physical memory sizes.

Conferences and Events

- Liferay Meetup 2008

- August 1, Los Angeles, CA

http://www.liferay.com/web/guest/about_us/events/liferay_2008/-/meetups/entry/943832

- LinuxWorld Conference

- August 4 - 7, San Francisco, California

http://www.linuxworldexpo.com/live/12/

- Agile Conference 2008

- August 4 - 8, Toronto, Canada

http://www.agile2008.org/

- Splunk Live Developer Camp - Free

- August 4, South Beach Harbor Center, San Francisco, CA

http://www.splunk.com/index.php/bootcamp08

- Splunk Live Southwest Tour 2008 - Free

- August 12 - Scottsdale, AZ

August 13 - San Diego, CA

August 14 - Los Angeles, CA

http://www.splunk.com/goto/splunklivesouthwest08

- Office 2.0 Conference 2008

- September 3 - 5, St. Regis Hotel, San Francisco

http://itredux.com/2008/07/03/office-20-conference-2008-registration/

- JSFOne Conference / Rich Web Experience - East

- September 4 - 6, Vienna, VA

http://www.jsfone.com/show_register_existing.jsp?showId=166

- Gartner Business Process Management Summit 2008

- September 10 - 12, Washington, D.C.

http://gartner.com/it/page.jsp?id=611409

- Summit on Virtualization and Security

- September 14, Marriott Hotel, San Francisco, CA

http://misti.com/default.asp?Page=65&ProductID=7508&ISS=24037&SID=690850

- IT Security World 2008

- September 15 - 17, Marriott Hotel, San Francisco, CA - Optional Workshops: September 13, 14, 17 & 18

http://misti.com/default.asp?Page=65&Return=70&ProductID=7154

- VMworld 2008

- September 16 - 18, Las Vegas, NV

http://www.vmworld.com

- Open Source in Mobile (OSiM) World

- September 17 - 18, Hotel Palace, Berlin, Germany

http://www.osimworld.com/newt/l/handsetsvision/osim08

- Oracle Develop and OpenWorld 2008

- September 21 - 25, San Francisco, CA

http://www.oracle.com/openworld/2008/develop.html

- Backhaul Strategies for Mobile Operators

- September 23, New York City

http://www.lightreading.com/live/event_information.asp

- Semantic Web Strategies Fall 2008

- October 5 - 7, Marriott Hotel, San Jose, CA

http://www.web3event.com/register.php

- Mobile Content Strategies 2008

- October 6 - 7, Marriott Hotel, San Jose, CA

http://www.mobilecontentstrategies.com/

- LinkedData Planet Fall 2008

- October 16 - 17, Hyatt, Santa Clara, CA

http://www.linkeddataplanet.com/

- EclipseWorld 2008

- October 28 - 30, Reston, VA

http://www.eclipseworld.net/

- OpenOffice.org Conference - OOoCon 2008

- November 5 - 7, Beijing, China

http://marketing.openoffice.org/ooocon2008

- Agile Development Practices 2008

- November 10 - 13, Orlando, FL

http://www.sqe.com/AgileDevPractices/

- ISPcon Fall 2008

- November 11 - 13, San Jose, CA

http://www.ispcon.com/future/f08_schedule.php

- DeepSec In-Depth Security Conference 2008

-

November 11 - 14, Vienna, Austria

DeepSec IDSC is an annual European two-day in-depth conference on

computer, network, and application security. In addition to the conference

with thirty-two sessions, seven two-day intense security training courses

will be held before the main conference. The conference program includes

code auditing, SWF malware, Web and desktop security, timing attacks,

cracking of embedded devices, LDAP injection, predictable RNGs and the

aftermath of the OpenSSL package patch, in-depth analysis of the Storm

botnet, VLAN layer 2 attacks, digital forensics, Web 2.0 security/attacks,

VoIP, protocol and traffic analysis, security training for software

developers, authentication, malware de-obfuscation, in-depth lockpicking,

and much more.

Distro News

openSUSE 11.0 goes GA

openSUSE 11.0 goes GA

The openSUSE Project announced the release of openSUSE 11.0 in June. The

11.0 release of openSUSE includes more than 200 new features specific to

openSUSE, a redesigned installer that makes openSUSE easier to install,

faster package management thanks to major updates in the ZYpp stack, and

KDE 4, GNOME 2.22, Compiz Fusion for 3D effects, and much more. Users have

the choice of GNOME 2.22, KDE 4, KDE 3.5, and Xfce. GNOME users will find

a lot to like in openSUSE 11.0. openSUSE's GNOME is very close to upstream

GNOME.

GNOME 2.22 in openSUSE 11.0 includes the GNOME Virtual File System

(GVFS), with support for networked file systems, PulseAudio,

improvements in Evolution, and Tomboy. openSUSE 11.0 includes a stable

release of KDE 4.0. This release includes represents the next generation

of KDE and includes a new desktop shell, called Plasma, a new look and

feel (called Oxygen), and many interface and usability improvements.

KControl has been replaced with Systemsettings, which makes system

configuration much easier. KDE's window manager, KWin, now supports 3-D

desktop effects. KDE 4.0 doesn't include KDEPIM applications, so the

openSUSE team has included beta versions of the KDEPIM suite (KMail,

KOrganizer, Akregator, etc.) from the KDE 4.1 branch that's in

development and scheduled to be released in July and for online update.

(KDE 3.5 is still available on the openSUSE DVD for KDE users who aren't

quite ready to make the leap to KDE 4.)

Applications also include Firefox 3 and OpenOffice.org 2.4. Get openSUSE

here: http://software.opensuse.org/

Gentoo 2008.0 Released

Gentoo 2008.0 Released

The Gentoo 2008.0 final release, code-named "It's got what plants

crave," contains numerous new features including an updated installer,

improved hardware support, a complete rework of profiles, and a move to

Xfce instead of GNOME. LiveDVDs are not available for x86 or AMD64,

although they may become available in the future.

Additional details include:

Improved hardware support: includes moving to the 2.6.24 kernel and many

new drivers for hardware released since the 2007.0 release.

Xfce instead of GNOME on the LiveCD: To save space, the LiveCDs switched

to the smaller Xfce environment. This means that a binary installation

using the LiveCD will install Xfce, but GNOME or KDE can be built from

source.

Updated packages: Highlights of the 2008.0 release include Portage

2.1.4.4, a 2.6.24 kernel, Xfce 4.4.2, gcc 4.1.2, and glibc 2.6.1.

Get Gentoo at: http://www.gentoo.org/main/en/where.xml

Elyssa Mint, Xfce Community Edition RC1 BETA 025 Released

Elyssa Mint, Xfce Community Edition RC1 BETA 025 Released

This is the first BETA release of the Xfce Community Edition for Linux

Mint 5, codename Elyssa, based on Daryna and compatible with Ubuntu Hardy

and its repositories.

MintUpdate was refactored and its memory usage was drastically reduced.

On some systems, the amount of RAM used by mintUpdate after a few days

went from 100MB to 6MB. Mozilla also greatly improved the memory usage

in Firefox between version 2 and 3.

Elyssa comes with kernel version 2.6.24, which features a brand new

scheduler called CFS (Completely Fair Scheduler). The kernel scheduler

is responsible for the CPU time allocated to each process. With CFS, the

rules have changed. Without proper benchmarks, it's hard to actually tell

the consequences of this change, but the difference in behavior is quite

noticeable from a user's point of view. Some tasks seem slower, but

overall the system feels much snappier.

Linux Mint 5 Elyssa is supported by CNR.com, which features commercial services and

applications that are not available via the traditional channels. The

Software Portal feature introduced in Linux Mint 4.0 Daryna is receiving

more focus, as it represents the easiest way to install applications. About

10 times more applications will be made available for Linux Mint 5

Elyssa.

Download Linux Mint from this URL: http://www.linuxmint.com/download.php

Software and Product News

MindTouch Deki Wiki releases OSSw Enterprise Collaboration Platform

MindTouch Deki Wiki releases OSSw Enterprise Collaboration Platform

MindTouch announced in July the newest version of its open source

collaboration and collective intelligence platform. MindTouch Deki

(formerly Deki Wiki) "Kilen Woods" now delivers new workflow

capabilities and new enterprise adapters to help information workers, IT

professionals, developers, and others connect disparate enterprise

systems and data sources.

At the Web 2.0 conference in April, MindTouch showcased the social

networking version of Deki Wiki - a free, open source wiki that offers

complete APIs for developers. MindTouch enabled users to hook in legacy

applications and social Web 2.0 applications for mashups, while enabling

IT governance across it all. Deki Wiki is built entirely on a RESTful

architecture, and engineered for concurrent processing.

Although there have been recent point releases updating the platform

unveiled at OSCon 2007, according to Aaron Fulkerson, co-founder and CEO

of MindTouch, this is an enterprise class release and a new platform for

Deki Wiki. "MindTouch Deki has evolved into a powerful platform that is

the connective tissue for integrating disparate enterprise systems, Web

services, and Web 2.0 applications, and enables real-time

collaboration... and application integration."

Fulkerson also told Linux Gazette, "MindTouch Deki

Enterprise offers adapters to widely-used IT and developer systems

behind the firewall and in the cloud, which allows for IT governance no

matter where your data lives. MindTouch Deki Enterprise is the only

wiki-based platform that offers this level of sophistication and

flexibility."

MindTouch Deki "Kilen Woods" addresses the needs of enterprise customers

by providing a wiki collaboration interface with a dozen enterprise

adapters to corporate systems and new Web services like SugarCRM,

Salesforce, LinkedIn, and WordPress, or apps like MySQL, VisiFire,

PrinceXML, ThinkFree Office, and more.

Besides these adapters, users have extensions to over 100 Web services

to create workflows, mashups, dynamic reports, and dashboards. This

allows for a collaborative macro-view of multiple systems in a common

wiki-like interface. "Previously we didn't have these workflow

capabilities," Fulkerson told Linux Gazette, "that has been

under development for most of this year..."

MindTouch Deki enables businesses to connect and mashup application and

data silos that exist across an enterprise and effectively fills the

collaboration gap across systems. For example, MT Deki can take content

from a database server and mash it up with other services, such as Google

Earth or Google maps, LinkedIn, or CRM systems.

Site administrators simply need to register selected external

applications, and business users can immediately create mashups, dynamic

reports, and dashboards that can be shared. For example, with the desktop

connector, users can simply drag and drop an entire directory to

MindTouch Deki, and the hierarchy will be automatically created as wiki

pages. Users can also publish an entire e-mail thread, complete with all

attachments, to MT Deki in a single click.

MindTouch Deki "Kilen Woods" was showcased at OSCON in July and is

available for download. Desktop videos of MT Deki in operation can be

seen at http://wiki.mindtouch.com/Press_Room/Press_Releases/2008-07-23

WINE 1.1.1 Released with Photoshop Improvements

WINE 1.1.1 Released with Photoshop Improvements

WINE 1.1.1 was released in mid-July. This was the first development

version since the release of WINE 1.0.

WINE 1.1.1 features installer fixes for Adobe Photoshop CS3 and

Microsoft Office 2007.

Get the source at: http://source.winehq.org/

Genuitec announces the general availability of Pulse 2.2

Genuitec announces the general availability of Pulse 2.2

Genuitec, a founding member of the Eclipse Foundation, announced general

availability of Pulse 2.2, a free way to obtain, manage, and configure

Eclipse Ganymede and its plugins. Pulse 2.2 will support full Ganymede

tool stacks.

Users are able to choose if they want to use the latest Eclipse Ganymede

release, stay on the Eclipse Europa stack, or utilize both versions in

separate tools configurations with Pulse. With this flexibility, users

will not hinder current preferences, projects, or environments, as well

as providing a great way to test new Ganymede features.

In addition to Ganymede support, Pulse 2.2 offers an expanded catalog

with hundreds of plugins to chose from. Unlike many distribution

products, Pulse 2.2 is multiple open source-license compliant.

Download Ganymede today at http://www.poweredbypulse.com/eclipse_packages.php.

Pulse 2.2 Community Edition is a free service and is available at

http://www.poweredbypulse.com.

Skyway Builder CE now GA

Skyway Builder CE now GA

Skyway Software announces the release and general availability of Skyway

Builder Community Edition (CE), the open-source version of our

model-centric tool for developing, testing, and deploying Rich Internet

Applications (RIAs) and Web services. The GA version of Skyway Builder

CE is available for immediate download.

Highlights of Skyway Builder CE include: -- Free and open-source (GPL

v3) -- Integrated into Eclipse -- Full Web Application Modeling --

Extensible models -- Build your own building blocks (custom steps) --

Seamless integration of custom Java code and models -- Runtime using

Spring Framework

New Canoo UltraLightClient for Faster Web Application Development

New Canoo UltraLightClient for Faster Web Application Development

Canoo announced in June the new release of its UltraLightClient (ULC), a

library to build rich Web interfaces for business applications.

Previously announced at JavaOne 2008, Canoo ULC simplifies the

development and deployment of Web applications. Canoo has recorded a

screencast showing how to develop a sample Web application using this

new release.

New features in ULC 08: - easy project setup using a wizard for Eclipse

- generates an end-to-end application skeleton from a predefined data

structure - high-level component to simplify the development of forms

- improved data types - sortable ULC tables by default - data binding

A new developer guide describes how to be productive from day one. The

release notes list the changes added to the current product version.

The Canoo development library includes user-interface elements such as

tabbed window panes, sortable tables, or drag and drop interactions and

keyboard shortcuts.

The final version of UltraLightClient 08 is available for download at

the Canoo product Web site. A license costs 1299 Euro per developer, and

includes free runtime distribution for any number of servers. A free

evaluation license may be obtained for 30 days. Contact Canoo for

further details on pricing and licensing.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/dokopnik.jpg)

Deividson was born in União da Vitória, PR, Brazil, on

14/04/1984. He became interested in computing when he was still a kid,

and started to code when he was 12 years old. He is a graduate in

Information Systems and is finishing his specialization in Networks and

Web Development. He codes in several languages, including C/C++/C#, PHP,

Visual Basic, Object Pascal and others.

Deividson works in Porto União's Town Hall as a Computer

Technician, and specializes in Web and Desktop system development, and

Database/Network Maintenance.

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

Making a Slideshow-type MPEG1 Video with GNU/Linux

By bhaaluu

This tutorial is for users who are interested in making an MPEG1 video using

GNU/Linux and Free Open Source Software. The process described is partly

automated with bash shell scripts, but otherwise still relies on handcrafting

the video frame by frame. You are expected to know how to compile and install

files from source code, use The Gimp, and use Audacity. The tutorial

will describe when these tools are to be used. A total of six frames are

used to make the video. One is a simple blank screen, filled with black.

Two frames require using The Gimp's Text Tool. Three images are used as

slides. The Gimp is used to scale those images to fit a specific image

size. Audacity is used to edit sound for the video.

Software Tools:

- ImageMagick

- mpeg2encode

- The Gimp

- Audacity

- ffmpeg

- ffplay

- bash shell scripts:

- make_mpeg2encode_parfile

- duplicateFile

- fade

From ImageMagic, the 'convert' and 'mogrify' utilities will be used. 'convert'

needs mpeg2encode in order to make an MPEG1 video. mpeg2encode can be obtained

from: http://www.mpeg.org/MPEG/MSSG/.

You'll need to compile and install it on your computer.

The Gimp is used to make the black screens, title screen, and credits screens.

Audacity is a sound editor, used to edit the sound clips for the video. Audacity

exports different audio file formats, such as WAV, MP3, or Ogg Vorbis.

ffmpeg is a computer program that can record, convert, and stream digital

audio and video in numerous formats.

ffplay is a video player.

Shell scripts are used to automate repetitive tasks, using variables and 'while'

loops. The make_mpeg2encode_parfile script creates a parameter file for the

mpeg2encode program.

In this tutorial, we'll make a slideshow-type video. Several slides will

fade in and fade out in sequence. An animation sequence could easily be

substituted for the slideshow.

It is very helpful to keep the video project organized, so the first thing to do

is create a new directory:

$ mkdir newVideo

Next, create several subdirectories in the newVideo/ directory:

[newVideo]$ mkdir 00black1001-1010 01title1011-1110 02video1111-1410 03credi1411-1510 04black1511-1520 05makeVideo originals

These subdirectories will each hold a different set of files. The numbers are

the number of frames for each set. This video will have a total of 520 frames.

The first ten frames will be black. The title screen will have 100 frames. The

video slideshow will have 300 frames. The credit screen will have 100 frames.

Finally, there will be ten frames of black at the end.

Use a digital camera to take several photos, then download the photos from the

camera to your computer. After the photos are on the computer, edit them in The

GIMP, scaling all the photos so they fit in a 640x480 image size. Fill in

any space around the image with black.

Notes:

- Many movie-making methods expect the image size in x and y to be in

multiples of 16. If not, depending on the version of mpeg_encode, they

will either resize the images, core dump, or complain and not create the

video.

- mpeg2encode expects the files to be numbered sequentially. A correct

numbering sequence is: file0000.jpg, file0001.jpg, ..., file1000.jpg. An

incorrect sequence is: file1, ..., file10, ..., file100 (because using

the wildcard symbol (*) will not sort them correctly).

- Even though the various MPEG encoders can generate movies with various

frame sizes, not all viewers can handle them. The best approach is

to stick with either 640x480 or 320x240.

- Keep your frames uncluttered, especially if you are creating a movie with

small frames (e.g. 320 x 240 movie).

- Avoid thin lines, dark blue lines on a black background, highly saturated

colors, small objects, etc.

- Start with a good image. Any compression scheme will degrade the quality

of the original.

- Don't create your frames using the GIF file format. GIF compresses the

color information to 256 colors. MPEG does not have this restriction.

These notes were taken from

this

HOWTO.

Save the digital photos as image0001.ppm, image0002.ppm, and image0003.ppm in

the newVideo/ directory. PPM is an uncompressed image file that works well with

mpeg2encode.

While you're in The GIMP, make a 640x480 blank image. Fill this with black and

save it as blackScrn.ppm. Next, create the title screen, using the Text tool.

Make the title screen with white letters on a black background and save it as

titleScrn.ppm. Finally, create a credits screen with a black background and

white letters, and save it as crediScrn.ppm.

This slideshow video will be made of these files:

blackScrn.ppm

titleScrn.ppm

image0001.ppm

image0002.ppm

image0003.ppm

crediScrn.ppm

The screens will be resized to 320x240, so make the text large enough to read

after the screens have been resized. To resize the images, use the convert

utility:

$ convert -size 640x480 blackScrn.ppm -resize 320x240 00blackScrn.ppm

$ convert -size 640x480 titleScrn.ppm -resize 320x240 01titleScrn.ppm

$ convert -size 640x480 image0001.ppm -resize 320x240 02image0001.ppm

$ convert -size 640x480 image0002.ppm -resize 320x240 02image0002.ppm

$ convert -size 640x480 image0003.ppm -resize 320x240 02image0003.ppm

$ convert -size 640x480 crediScrn.ppm -resize 320x240 03crediScrn.ppm

$ convert -size 640x480 blackScrn.ppm -resize 320x240 04blackScrn.ppm

Move these working files to their respective subdirectories - i.e.,

[newVideo]$ mv 00blackScrn.ppm 00black1001-1010/

[newVideo]$ mv 01titleScrn.ppm 01title1011-1110/

[newVideo]$ mv 02image000?.ppm 02video1111-1410/

[newVideo]$ mv 03crediScrn.ppm 03credi1411-1510/

[newVideo]$ mv 04blackScrn.ppm 04black1511-1520/

Move the remaining original files to originals/.

[newVideo]$ mv *.ppm originals/

The next step is to duplicate the various files in each directory. This is where

a bash shell script comes in handy.

#!/bin/bash

############################################################################

#name: duplicateFile

#date: 2008-06-20

############################################################################

if [ $# -ne 4 ]

then

echo "Usage: `basename $0` begin_range end_range filename duplicate"

exit 1

fi

# $1 is beginning of range

let num=$1

# $2 is end of range

while [ ${num} -le $2 ]

do

# $3 is filename

# $4 is duplicate

cp $3 $4${num}.ppm

num=$(($num+1))

done

############################################################################

If you put this script in your /home/bin directory, you will be able to execute

it from any directory you are in - assuming your /home/bin directory is in your PATH,

and you have made the file executable. To put your /home/user/bin directory in

your PATH, add this to the .bash_profile file in your home directory:

# set PATH so it includes user's private bin if it exists

if [ -d ~/bin ] ; then

PATH=~/bin:"${PATH}"

fi

Then make the script that is in the ~/bin directory, executable:

[bin]$ chmod u+x duplicateFile

This tutorial expects that the scripts are in the PATH.

Usage of this script requires the beginning and end numbers of the range of

numbers that you want to duplicate, the name of the original file you want to

duplicate, and the name of the duplicate file. The script adds the sequential

number to the name of the file, so all that is needed is the name.

Change to the 00black1001-1010/ subdirectory and execute this command:

[00black1001-1010]$ duplicateFile 1001 1010 00blackScrn.ppm frame

The script creates frame1001.ppm to frame1010.ppm.

Remove 00blackScrn.ppm.

[00black1001-1010]$ rm 00blackScrn.ppm

Change to the 01title1011-1110 subdirectory and repeat the steps you did above,

changing the numbers appropriately.

[00black1001-1010]$ cd ../01<TAB> (Use TAB completion.)

[00black1001-1010]$ duplicateFile 1011 1110 01titleScrn.ppm frame

[02video1111-1410]$ rm 01title.ppm

Next, change to the 02video1111-1410/ subdirectory and do the same thing to each

image file in the directory. Each file, in this case, will be duplicated 100x.

[01title1011-1110]$ cd ../02<TAB> (Use TAB completion.)

[02video1111-1410]$ duplicateFile 1111 1210 02image0001.ppm frame

[02video1111-1410]$ duplicateFile 1211 1310 02image0002.ppm frame

[02video1111-1410]$ duplicateFile 1311 1410 02image0003.ppm frame

[02video1111-1410]$ rm 02image0*.ppm

In the 03credi1411-1510/ directory:

[03credi1411-1510]$ duplicateFile 1411 1510 03crediScrn.ppm frame

[03credi1411-1510]$ rm 03crediScrn.ppm

In the 04black1511-1520/ directory:

[04black1511-1520]$ /duplicateFile 1511 1520 04blackScrn.ppm frame

[00black1001-1010]$ rm 04blackScrn.ppm

That completes making all the needed files for the slideshow video. The next

step is to make some transitions between the various screens. In this tutorial

you'll create a fade in/fade out effect. Here is the script that does it:

#!/bin/bash

############################################################################

#name: fade

#date: 2008-06-20

############################################################################

if [ $# -ne 3 ]

then

echo "Usage: `basename $0` in|out filename fileNumber"

exit 1

fi

if [ $1 = "in" ]

then

num=$3

count=1

while [ $count -le 25 ]

do

fadein=$(($count*4))

mogrify $2$num.ppm -modulate $fadein $2$num.ppm

count=$(($count+1))

num=$(($num+1))

done

fi

if [ $1 = "out" ]

then

num=$3

count=1

while [ $count -le 25 ]

do

fadeout=$(($count*4))

mogrify $2$num.ppm -modulate $fadeout $2$num.ppm

count=$(($count+1))

num=$(($num-1))

done

fi

############################################################################

These are the commands to do the work:

[newVideo]$ cd ../01title1011-1110/

[01title1011-1110]$ fade in frame 1011

[01title1011-1110]$ fade out frame 1110

[01title1011-1110]$ cd ../02video1111-1410/

[02video1111-1410]$ fade in frame 1111

[02video1111-1410]$ fade out frame 1210

[02video1111-1410]$ fade in frame 1211

[02video1111-1410]$ fade out frame 1310

[02video1111-1410]$ fade in frame 1311

[02video1111-1410]$ fade out frame 1410

[02video1111-1410]$ cd ../03credi1411-1510/

[03credi1411-1510]$ fade in frame 1411

[03credi1411-1510]$ fade out frame 1510

The above commands fade the title, each slide, and the credits in and out.

The black screens at the beginning and end of the video don't need the fade-in,

fade-out effect.

Now copy all the PPM files in each subdirectory to 05makeVideo/.

[newVideo]$ cp 00black1001-1010/*.ppm 05makeVideo/

[newVideo]$ cp 01title1011-1110/*.ppm 05makeVideo/

[newVideo]$ cp 02video1111-1410/*.ppm 05makeVideo/

[newVideo]$ cp 03credi1411-1510/*.ppm 05makeVideo/

[newVideo]$ cp 04black1511-1520/*.ppm 05makeVideo/

After copying the files, you should have 520 files in 05makeVideo/.

[newVideo]$ ls 05makeVideo/ | wc -l

520

This version of the tutorial relies on a new bash script which can be found at

this

website.

Change to 05makeVideo and run the make_mpeg2encode_parfile script. This script

makes the parameter file that mpeg2encode relies on.

[newVideo]$ cd 05makeVideo/

[05makeVideo]$ make_mpeg2encode_parfile frame*.ppm

The make_mpeg2encode_parfile script takes the names of the frames as a parameter

and creates the mpeg2encode.par file.

Next, edit mpeg2encode.par in your favorite plain text editor (vi, right?):

lines

2. frame%4d /* name of source files */

7. 2 /* input picture file format: 0=*.Y,*.U,*.V, 1=*.yuv, 2=*.ppm */

8. 520 /* number of frames */

9. 1 /* number of first frame */

13. 1 /* ISO/IEC 11172-2 stream (0=MPEG-2, 1=MPEG-1)*/

14. 0 /* 0:frame pictures, 1:field pictures */

15. 320 /* horizontal_size */

16. 240 /* vertical_size */

17. 8 /* aspect_ratio_information 1=square pel, 2=4:3, 3=16:9, 4=2.11:1 */

18. 3 /* frame_rate_code 1=23.976, 2=24, 3=25, 4=29.97, 5=30 frames/sec. */

Line 2 is the name of the source file, plus space for four numbers.

Line 7 is 2 because of the PPM files.

Line 8 should be the number of frames in the video.

Line 9 is the number of the first frame.

Line 13 is 1 because it is a MPEG1 video.

Line 14 is 0, for frame pictures.

Lines 15 and 16 are the size of the frames in the video.

Line 17 is 8. I don't know why. It works.

Line 18 is 3 for 25fps. This works well for the popular video upload sites.

Next, run mpeg2encode to make the video:

[05makeVideo]$ mpeg2encode mpeg2encode.par video.mpg

Don't worry about the overflow and underflow errors.

The video plays at 25 frames per second. There are 520 frames in the video, so

it lasts a little over 20 seconds. The first 4 seconds are the title screen. The

last 4 seconds are the credits screen. That leaves about 12 seconds of slideshow

screens.

Use Audacity to edit a sound file. You can make your own, or find a free sound

clip on the Internet and use it.

[05makeVideo]$ audacity your_sound_file.ogg

In Audacity, generate 4 seconds of silence before the slideshow, and 4 seconds

of silence after the slideshow. Audacity allows you to fade-in and fade-out the

sound, as well as copy-paste it, delete sections of it, and so forth. When

finished editing the sound file, export it as an Ogg Vorbis file, saved to

the 05makeVideo/ directory: sound.ogg

Finally, add the sound to the video with this command:

[05makeVideo]$ ffmpeg -i sound.ogg -s 320x240 -i video.mpg finishedVideo.mpg

It's time to watch the finished video!

[05makeVideo]$ ffplay finishedVideo.mpg

If you like it, create an account on YouTube, or another video site, upload your

video, and share it with the world!

You should now have a good idea of how to create your own customized slideshow-

type video, with fade-in, fade-out effects and sound, using free, open source

software.

Here is an example of this type of slideshow

video on YouTube.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/bhaaluu.jpg)

bhaaluu has been using GNU/Linux since first installing it on a PC in

late 1995. bhaaluu founded PLUG (Piedmont Linux Users Group) in 1997.

bhaaluu is a hobbyist programmer who enjoys shell scripting and playing

around with Python, Perl, and whatever else can keep his attention. So

much to learn, so little time.

Copyright © 2008, bhaaluu. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 153 of Linux Gazette, August 2008

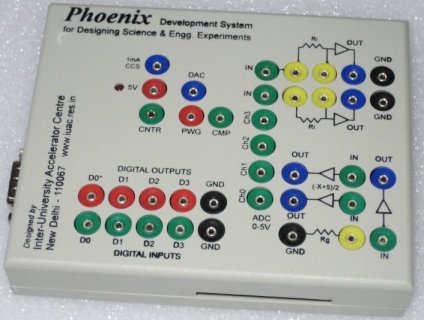

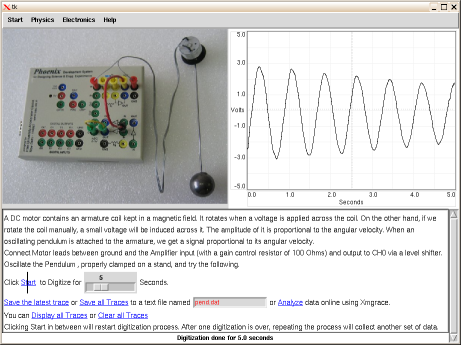

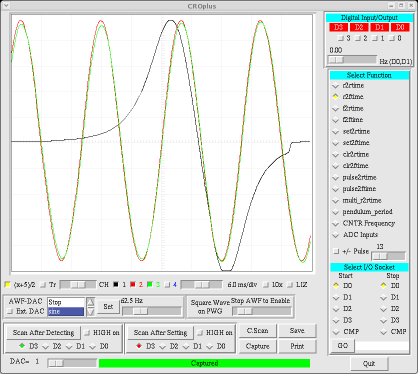

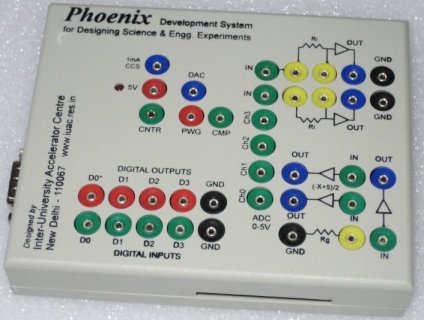

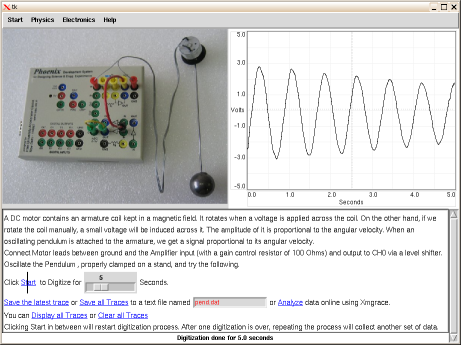

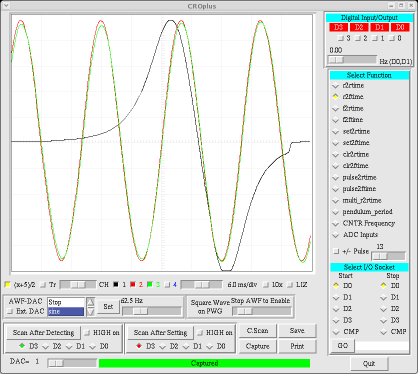

Recent Developments in the Phoenix Project

By Kishore A

Introduction

When you conduct experiments in modern Physics, the equipment involved is

often complicated, and difficult to control manually. Also, precise