...making Linux just a little more fun!

January 2010 (#170):

- Mailbag

- 2-Cent Tips

- News Bytes, by Deividson Luiz Okopnik and Howard Dyckoff

- How to Start a Linux User Group, by Dafydd Crosby

- What's up with Google Public DNS?, by Rick Moen

- The Village of Lan: A Networking Fairy Tale, by Rick Moen

- A Quick Lookup Script, by Ben Okopnik

- The things I do with digital photography on Linux, by Anderson Silva

- The Thin Line Between "Victim" and "Idiot", by Ken Starks

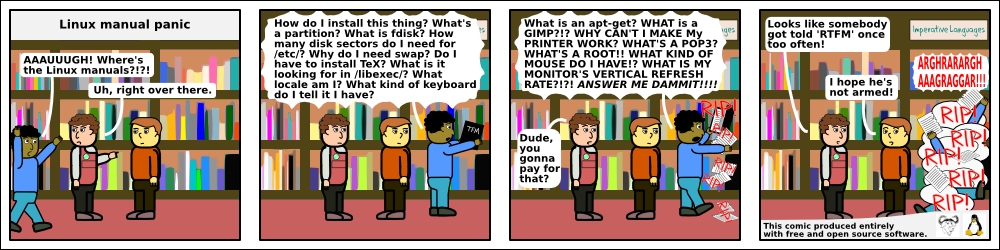

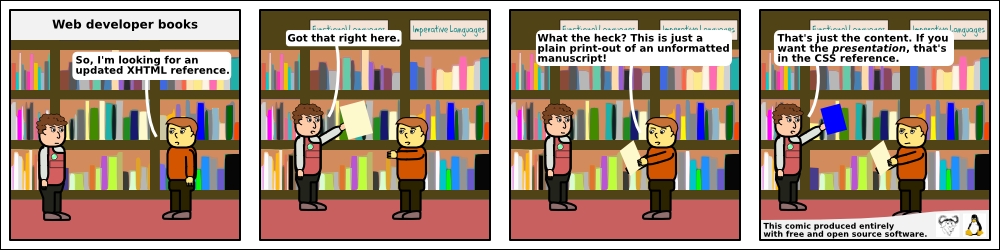

- HelpDex, by Shane Collinge

- XKCD, by Randall Munroe

- Doomed to Obscurity, by Pete Trbovich

- The Linux Launderette

- The Back Page, by Ben Okopnik

Mailbag

This month's answers created by:

[ Anderson Silva, Steve 'Ashcrow' Milner, Amit Kumar Saha, Ben Okopnik, Kapil Hari Paranjape, Karl-Heinz Herrmann, René Pfeiffer, Mulyadi Santosa, Neil Youngman, Paul Sephton, Raj Shekhar, Rick Moen, Suramya Tomar, Thomas Adam ]

...and you, our readers!

Our Mailbag

Multi-process CPU Benchmark

Deividson Okopnik [deivid.okop at gmail.com]

Thu, 5 Nov 2009 15:37:56 -0300

Hello everyone!

Im looking for a multi-process CPU benchmark to be run on linux (CLI

is even better), as I need to test how well a kerrighed cluster is

running. Anyone know of any of those programs?

Thanks

Deividson

[ Thread continues here (4 messages/2.54kB) ]

HOWTO kill client when Xserver dies?

=?ISO-8859-2?Q?Petr_Vav=F8inec?= [pvavrinec at snop.cz]

Tue, 15 Dec 2009 10:44:36 +0100

Hello gurus,

I run a diskless, keyboard-less pc that boots into X11 (just plain twm

as a window manager). The X clients I run remotedly, on a "database

server". When someone switches off the Xserver PC (i.e. flips that big

red switch), the X clients aren't killed - they remain on the "database

server" forever (or at least 24 hours, that's for me the same as

"forever"). Is there something that I could to to force them to die

automa[t|g]ically?

Thanks in advance,

Petr

[ Thread continues here (5 messages/5.55kB) ]

Hevea error

Amit Saha [amitsaha.in at gmail.com]

Mon, 7 Dec 2009 13:08:58 +0530

Hello TAG:

I know there are some 'hevea' users here. Could you help ?

I am trying to convert this TeX article of mine into HTML and I run into:

Giving up command: \text

Giving up command: \@hevea@circ

./octave-p2.tex:227: Error while reading LaTeX:

End of file in \mbox argument

Adios

The relevant lines in my LaTex file are:

226 \begin{align*}

227 Minimize f(x) = c^\text{T}x \\

228 s.t. Ax = b, x \geq 0 \\

229 where b \geq 0

230 \end{align*}

What could be the problem ? I am using version 1.10 from Ubuntu repository.

Thanks a lot!

Best Regards,

Amit

--

Journal: http://amitksaha.wordpress.com,

�-blog: http://twitter.com/amitsaha

[ Thread continues here (3 messages/4.48kB) ]

Ubuntu boot problems

Ben Okopnik [ben at linuxgazette.net]

Mon, 16 Nov 2009 18:49:55 -0500

Earlier today, I had a problem with my almost-brand-new Ubuntu 9.10

install: my netbook suddenly stopped booting. Going into "rescue" mode

showed that it was failing to mount the root device - to be precise, it

couldn't mount /dev/disk/by-uuid/cd4efbe9-9731-40a5-9878-06deff19af06

(normally, a link to "/dev/sda1") on '/'. When the system finally timed

out and dropped me into the "initramfs" environment/prompt, I did an

"ls" of /dev/disk/by-uuid - which showed that the link didn't exist.

Yoiks... had my hard drive failed???

I was quickly comforted by the existence of another device in there, one

that pointed to /dev/sda5, a swap partition on the same drive, but that

could still have meant damage to the partition table. I tried a few

things, none of which worked (i.e., rebooting the system would always

bring me back to the same point)... until I decided to create the

appropriate symlink in the above directory - i.e.,

cd /dev/disk/by-uuid

ln -s ../../sda1 cd4efbe9-9731-40a5-9878-06deff19af06

and exit "initramfs". The system locked up when it tried to boot

further, but when I rebooted, it gave me a login console; I remounted

'/' as 'read/write', then ran "dpkg-reconfigure -plow ubuntu-desktop",

the "one-size-fits-all" solution for Ubuntu "GUI fails to start"

problems, and the problem was over.

NOW, Cometh The REALLY Big Problem.

1) What would cause a device in /dev/disk/by-uuid to disappear? Frankly,

the fact that it did scares the bejeebers out of me. That shouldn't

happen randomly - and the only system-related stuff that I did this

morning was installing a few packages (several flavors of window manager

so I could experiment with the Ubuntu WM switcher.) I had also rebooted

the system a number of times this morning (shutting it down so I could

take it off the boat and to my favorite coffee shop, etc.) so I knew

that it was fine up until then.

2) Why in the hell did changing anything in the "initramfs" environment

- i.e., creating that symlink - actually affect anything? Isn't

"initramfs", as indicated by the 'ram' part, purely temporary?

3) What would be an actual solution to this kind of thing? My approach

was based on knowing about the boot process, etc., but it was part

guesswork, part magical hand-waving, and a huge amount of luck. I

still don't know what really happened, or what actually fixed the

problem.

I'd be grateful for any insights offered.

--

* Ben Okopnik * Editor-in-Chief, Linux Gazette * http://LinuxGazette.NET *

[ Thread continues here (12 messages/27.96kB) ]

Industrial PC booting issues

=?ISO-8859-2?Q?Petr_Vav=F8inec?= [pvavrinec at snop.cz]

Thu, 12 Nov 2009 11:35:44 +0100

Allmighty TAG!

first, sorry for a longish post. I'm almost at my wit's end.

I have an industrial PC, equipped with a touch screen and "VIA Nehemiah"

CPU (that behaves like i386). The PC has no fan, no keyboard, no

harddisk, nor flash drive - only ethernet card. It boots via PXE from my

database server. The /proc/meminfo on the PC says, that the PC has

MemTotal: 452060 kB. I'm booting the "thinstation"

(http://thinstation.sourceforge.net) with kernel 2.6.21.1. The PC uses

ramdisk. I boot Xorg with twm window manager. Then I start client of the

"Opera" browser on my database server and "-display" it on the X server

on the PC. This setup has worked flawlessly for a couple of months.

Then my end-users came with complains, that the PC doesn't boot anymore

after flipping the mains switch. For the moment, I have found following:

1. The PC sometimes doesn't boot at all. The boot process stops with

the message:

Uncompressing linux... OK, booting the kernel.

...and that's all. Usually, when I switch the PC again off and on, it

boots OK, I mean I don't change anything, just flip that big button.

2. When it really does boot all the way into X, the opera browser

isn't able to start properly. I tried to investigate further the matter.

I modified the setup, now I'm booting only into X+twm. This works.

Now I tried to run following test on my database server:

xlogo -display <ip_address_of_the_pc>:0

This works, the X logo is displayed on the screen of the PC.

Now I tried this test on the database server:

xterm -display <ip_address_of_the_pc>:0

Result is this error message on the client side (i.e. on the database

server):

xterm: fatal IO error 104 (Connection reset by peer) or KillClient

on X server "192.168.100.171:0.0"

...and the X server on the PC is really killed (I can't find him anymore

in the process list on the PC).

This is, what I have found in the /var/log/boot.log on the PC:

------------ /var/log/boot.log starts here ----------------------------

X connection to :0.0 broken (explicit kill or server shutdown).

/etc/init.d/twm: /etc/init.d/twm: 184: xwChoice: not found

twm_startup

twm: unable to open display ":0.0"

------------ /var/log/boot.log ends here ------------------------------

...and this is from /var/log/messages on the PC:

[ ... ]

[ Thread continues here (11 messages/16.28kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 170 of Linux Gazette, January 2010

2-Cent Tips

2-cent Tip: using class of characters in grep

Mulyadi Santosa [mulyadi.santosa at gmail.com]

Wed, 16 Dec 2009 22:59:28 +0700

How do you catch a range or group of characters when using grep? Of

course you can always do something like:

$ grep -E '[a-z]+' ./test.txt

to show lines in file "test.txt" that contain at least single

character between 'a' to z in lower case.

But there is other way (and hopefully more intuitive...for some

people). Let's do something like above, but the other way around. Tell

grep to show the name of text files that doesn't contain upper case

characters

$ grep -E -v -l '[[:upper:]]+' ./*

Here, upper is another way of saying [A-Z]. Note the usage of double

brackets! I got trapped once, thinking that I should only use single

bracket and wonder why it didn't work....

There are more classes you can use. Check "info grep" in section 5.1

"Character class"

--

regards,

Mulyadi Santosa

Freelance Linux trainer and consultant

blog: the-hydra.blogspot.com

training: mulyaditraining.blogspot.com

[ Thread continues here (2 messages/2.35kB) ]

2-cent Tip: converting Festival phonesets to Flite

Jimmy O'Regan [joregan at gmail.com]

Tue, 1 Dec 2009 03:29:45 +0000

Obviously, this isn't going to be everyone's cup of tea, but it would

annoy me if I ever have to repeat this, or had to tell someone 'oh, I

did that once, but forgot the details'.

Festival is a fairly well known as a speech synthesiser, but it's

generally better suited as a development platform for voice and

language data; Flite is a lightweight TTS system that uses data

developed with Festival. Flite has some scripts to help the process,

but they're either undocumented or misleadingly documented.

One such script is tools/make_phoneset.scm: the function synopsis

gives 'phonesettoC name phonesetdef ODIR', but the actual synopsis is

'phonesettoC name phonesetdef silence ODIR', where 'name', 'silence'

and 'ODIR' are strings, 'phonesetdef' is a Lisp list of features. I

don't know Scheme or Lisp all that well, so I eventually got it to

work by copying the phoneset to a file like this (myphones.scm):

(phonesettoC "my_phoneset"

'(defPhoneSet

my_phoneset

;;; Phone Features

(

(vc + -)

(vlng s l d a 0)

(vheight 1 2 3 -)

(vfront 1 2 3 -)

(vrnd + -)

(ctype s f a n l t 0)

(cplace l a p b d v 0)

(cvox + -)

)

(

(# - 0 - - - 0 0 -) ;this is the 'silence' phonedef

(a + l 3 2 - 0 0 -)

; snip

)

)

(PhoneSet.silences "#")

"outdir")

and converting it with:

mkdir outdir && festival $FLITEDIR/tools/make_phoneset.scm myphones.scm

leaves a C file suitable for use with Flite in outdir

1 down, ~12 scripts left to figure out...

--

<Leftmost> jimregan, that's because deep inside you, you are evil.

<Leftmost> Also not-so-deep inside you.

2-cent Tip: avoid re-downloading full isos

Rick Moen [rick at linuxmafia.com]

Tue, 1 Dec 2009 11:40:54 -0800

----- Forwarded message from Tony Godshall <[email protected]> -----

Date: Tue, 1 Dec 2009 10:30:26 -0800

From: Tony Godshall <[email protected]>

To: [email protected]

Subject: [conspire] tip: avoid re-downloading full isos

If you have an iso that fails checksum and a torrent is available,

rtorrent is a pretty good way to fix it.

cd /path/to/ubuntu910.iso && rtorrent http://url/for/ubuntu910.iso.torrent

The reason torrent is good for this is that it checksums each block and

fetches only the blocks required.

Tony

conspire mailing list

[email protected]

http://linuxmafia.com/mailman/listinfo/conspire

----- End forwarded message -----

Talkback: Discuss this article with The Answer Gang

Published in Issue 170 of Linux Gazette, January 2010

News Bytes

By Deividson Luiz Okopnik and Howard Dyckoff

|

Contents:

|

Selected and Edited by Deividson Okopnik

Please submit your News Bytes items in

plain text; other formats may be rejected without reading.

[You have been warned!] A one- or two-paragraph summary plus a URL has a

much higher chance of being published than does an entire press release. Submit

items to [email protected]. Deividson can also be reached via twitter.

News in General

New Novell Collaboration Platform for E2.0 Links to Google Wave

New Novell Collaboration Platform for E2.0 Links to Google Wave

In early November, Novell announced its real-time collaboration

platform, Novell Pulse. Pulse combines e-mail, document authoring, and

social messaging tools with robust security and management

capabilities.

Novell Pulse was demonstrated on November 4, 2009, during the

"Integrating Google Wave into the Enterprise" keynote at Enterprise 2.0 in

San Francisco. Beta availability will be early 2010, followed by

general availability in the first half of 2010 via cloud deployment. Novell

Pulse interacts with Google Wave through Wave's federation protocol,

letting Novell Pulse users communicate in real-time with users on any

other Wave provider.

"We designed Google Wave and its open federation protocol to help

people collaborate and communicate more efficiently", said Lars

Rasmussen, software engineering manager for Google Wave. "We are very

excited to see Novell supporting Wave federation in its innovative

Novell Pulse product."

"Security and identity management remain top concerns for

companies considering real-time social solutions", said Caroline Dangson,

analyst at IDC. "Novell has a proven record in security and identity

management, an important strength for its new collaboration platform."

Key features draw on best practices for e-mail, instant messaging,

document sharing, social connections, real-time co-editing, and enterprise

control. These include single sign on, identity management, editable

on-line documents, and a unified inbox.

For more information on Novell Pulse, visit http://www.novell.com/products/pulse/.

ECMA Proposes Revised JavaScript Standard

ECMA Proposes Revised JavaScript Standard

The future of JavaScript - at least for the next 3 years - will be

determined by ECMA's General Assembly in early December. At that

time, ECMA's membership will vote on the ECMAScript 3.1 proposal, a

technology compromise from ECMA's contentious Technical Committee 39.

Factions within TC39 worked on JavaScript revision for several years,

but AJAX's success upped the stakes for v. 4.0 - which is far from

complete - so a smaller set of improvements went into the 3.1 draft.

However, both Adobe and IBM are dissatisfied, and are lobbying to defeat

it.

Doug Crockford, who represents Yahoo on TC39, delivered a keynote on

the draft spec and its importance recently, at QCon in San Francisco.

Doug explained that the sudden explosion in use of JavaScript (formally

called ECMAScript) - both upped the stakes on revisions and intensified

risks. One example is an on-going problem with representing decimal

numbers. Original JavaScript can represent small and large integers,

but often gives erroneous results for decimal arithmetic (e.g., 0.1 +

0.2 != 0.3).

IBM has proposed a revision ("754-r") to the 1970s-era IEEE 754 spec

for floating point processing. However, this change would result in

significant performance penalties on arithmetic unless implemented in

silicon, which IBM has already done in Power CPUs. Speaking with

Linux Gazette, Doug said IBM may be attempting to increase the

value of its Power servers, or may be encouraging Intel to modify its own

chips.

The committee is also discussing a longer-term set of changes to

JavaScript / ECMAScript, with a working title of Harmony. Some additions

could include enhanced functions and inclusion of a standard crypto

library. However, many of those changes could break existing Web

applications; implementations will have to do as little damage as

possible.

Conferences and Events

- 10th ACM/USENIX International Middleware Conference

-

November 30 - December 4, Urbana Champaign, IL

http://middleware2009.cs.uiuc.edu/.

- Gartner Data Center Conference 2009

-

December 1 - 4, Caesar's Palace, Las Vegas

http://www.gartner.com/it/page.jsp?id=851712.

- FUDCon 2009

-

December 5 - 7, Toronto, Canada

http://fedoraproject.org/wiki/FUDCon:Toronto_2009.

- 5th Digital Living Room Summit

-

December 15, Santa Clara, CA

http://www.digitallivingroom.com/.

- MacWorld 2010

-

February 9 - 13, 2010, San Francisco, CA - Priority Code - MW4743

http://www.macworldexpo.com/.

- SCALE 8X 2010

-

February 19 - 21, 2010, Los Angeles, CA

http://www.socallinuxexpo.org/scale8x/.

- 8th USENIX Conference on File and Storage Technologies

-

February 23 - 26, 2010, San Jose, CA

http://usenix.org/events/fast10/.

- ESDC 2010 - Enterprise Software Development Conference

-

March 1 - 3, 2010, San Mateo Marriott, San Mateo, CA

http://www.go-esdc.com/.

- RSA 2010 Security Conference

-

March 1 - 5, 2010, San Francisco, CA

http://www.emc.com/microsites/rsa-conference/2010/usa/.

- Enterprise Data World Conference

-

March 14 - 18, 2010, Hilton Hotel, San Francisco, CA

http://edw2010.wilshireconferences.com/.

- Cloud Connect 2010

-

March 15 - 18, 2010, Santa Clara, CA

http://www.cloudconnectevent.com/.

Distro News

Fedora 12 Released in November

Fedora 12 Released in November

The Fedora Project, Red Hat's community-supported open source group,

announced availability of Fedora 12, the latest version of its free,

open-source distribution. The new release includes robust features for

desktop users, administrators, developers, and open source enthusiasts.

Enhancements include next-generation Ogg Theora video, virtualization

improvements, and new capabilities for NetworkManager.

"Fedora always tries to include cutting-edge features in its

distribution. We believe Fedora 12 stays true to this pattern, packing

a lot of punch across its feature set", said Paul Frields, Fedora Project

Leader at Red Hat. "Our community of global contributors continues to

expand, with 25 percent growth in Fedora Accounts since

Fedora 11. We've also seen more than 2.3 million installations of Fedora

11 thus far, a 20 percent increase over earlier releases."

Notable feature enhancements:

- Theora 1.1, enhancing free video's quality to better meet user

expectations. Ogg Theora video delivers crisp, vibrant media, letting

users stream and download high-definition video, while using 100 percent

free and open software, codecs, and formats.

- NetworkManager, a network configuration tool, has been improved to

make it easier for users to be online using mobile broadband, to

configure servers, and to handle other special situations.

- Virtualization improvements include better virtual disk performance

and storage discovery, hot changes for virtual network interfaces,

reduced memory consumption, and modern network booting infrastructure.

Other improvements are PackageKit command-line and browser plugins;

better file compression; libguestfs, which lets administrators work

directly with virtual guest machine disk images without booting those

guests; SystemTap 1.0, to help developers trace and gather information

for writing and debugging programs, as well as integration with the

widely-used Eclipse IDE; and NetBeans 6.7.1.

The Fedora community held fifteen Fedora Activity Day events across

the globe in 2009. These are mini-conferences where Fedora experts work

on a specific set of tasks, ranging from developing new features and Web

applications to showing contributors how to get involved by working on

simple tasks. During that same period, the Project also held three

Fedora Users and Developers Conferences (FUDCons), in Boston, Porto

Alegre, and Berlin.

The next FUDCon is scheduled for December 5 - 7, 2009, in Toronto,

Ontario, Canada. For more information about FUDCon Toronto 2009, visit http://fedoraproject.org/wiki/FUDCon:Toronto_2009.

For more information about the Fedora Project, visit

http://www.fedoraproject.org.

New Knoppix 6.2 Released

New Knoppix 6.2 Released

"Microknoppix" is a complete rewrite of the Knoppix boot system from

version 6.0 and up, with the following features:

- High compatibility with its Debian base: Aside from

configuration files, nothing gets changed in Debian's standard

installation.

- Accelerated boot procedure: Independently from the usual SysV

bootscripts, multiple tasks of system initialization are run in

parallel, so the user reaches an interactive desktop very quickly.

- LXDE, a very slim and fast desktop with short start time and

low resource requirements, is the desktop environment. Because of

its GTK2 base, LXDE works together nicely with screenreader Orca.

- Installed software in this first CD version has been greatly

reduced, so that custom CD remasters are again possible. The

DVD edition offers KDE4 and GNOME, with many applications

originating from these popular desktops.

- Network configuration is handled by NetworkManager with

nm-applet in graphics mode, and by textual GUIs in text mode

(compatible with Debian's /etc/network/interfaces specification).

- A persistent image for saving personal settings, and additionally

installed programs, KNOPPIX/knoppix-data.img, is supported on the

boot device, optionally encrypted using 256-bit AES. In general,

Knoppix recommends live system installation on flash.

The current 6.2 release has been completely updated from Debian

"Lenny".

Also included is A.D.R.I.A.N.E. (Audio Desktop Reference Implementation

And Networking Environment), a talking menu system, which is supposed to

make work and Internet access easier for computer beginners.

The current public 6.2 beta (CD or DVD) is available

in different variants at the Knoppix Mirrors Web site

(http://www.knopper.net/knoppix-mirrors/index-en.html).

Software and Product News

Intalio Announces Jetty 7

Intalio Announces Jetty 7

Intalio, Inc., has released Jetty 7, a lightweight open source

Java application server. The new release includes features

to extend Jetty's reach into mission-critical environments, and

support deployment in cloud computing.

Jetty 7 brings performance optimizations to enable rich Web

applications. Thanks to small memory footprint, Jetty has become the

most popular app server for embedding into other open source projects,

including ActiveMQ, Alfresco, Equinox, Felix, FUSE, Geronimo,

GigaSpaces, JRuby, Liferay, Maven, Nuxeo, OFBiz, and Tungsten. Jetty is

also the standard Java app server in cloud computing services, such as

Google's AppEngine and Web Toolkit, Yahoo's Hadoop and Zimbra, and

Eucalytpus.

This is the first Jetty release developed in partnership with

Eclipse.org, where the codebase has gone through IP auditing, dual

licensing, and improved packaging for such things as OSGi integration.

In addition, Jetty 7 makes some of the key servlet 3.0 features, such as

asynchronous transactions and fragments, available in a servlet 2.5

container.

Jetty provides both asynchronous HTTP server and asynchronous HTTP

client, thereby supporting both ends of protocols such as Bayeux, XMPP,

and Google Wave, that are critical to cloud computing — and can be

embedded in small and mobile devices: Today, Jetty runs on J2ME and

Google Android, and is powering new smartphones from Verizon and other

manufacturers soon to start production.

"Jetty 7 significantly reorganizes packaging and jars, and makes

fundamental improvements to the engine's underlying infrastructure",

said Adam Lieber, General Manager at IntalioWorks.

The Jetty distribution is available via http://eclipse.org/jetty under

the Apache 2.0 or Eclipse 1.0 licenses, and covered by the

Eclipse Foundation Software User Agreement. The distribution furnishes

core functionality of HTTP server, servlet container, and HTTP client.

More information and technical blogs can be found at http://www.webtide.com/.

One Stop Systems Introduces First GPU/SSD 2U Server

One Stop Systems Introduces First GPU/SSD 2U Server

One Stop Systems, Inc. (OSS), a manufacturer of computer modules and

systems, announced the first server to integrate an AMD-based

motherboard featuring dual "Istanbul" processors and eight GPU boards

providing 10 TeraFLOPS computing power. The server can also accommodate

a combination of GPU boards and SSD (solid state drive) boards.

Four GPU boards and four 640GB SSD boards provides 5TFLOPS GPU

processing and 2.5TB memory, in addition to the dual six-core CPUs'

computing power. In addition, OSS has packed even more storage capacity

into the system, with four hot-swappable hard disk drives and an internal

RAID controller. The server is powered by dual, redundant 1,500 watt

power supplies and housed in a 2U-high chassis tailored for rigorous

environmental demands.

"The Integrated GPU Server represents a combination of One Stop

Systems's areas of expertise; designing and manufacturing

rugged, rackmount computers and PCI Express design", said Steve Cooper,

CEO. "At the heart of this server is the dual Opteron processor board.

With a motherboard based on the SHBe (System Host Board Express) form

factor for industrial computing, it's built for long-life service.

Then, we employed multiple PCIe switches to provide the 80Gb/s bandwidth

to each slot. Using the latest AMD-based GPU boards, we produce

greater than 10 TFLOPS of computing power. The system is then powered

by dual redundant 1,500 watt power supplies with ample cooling."

The system supports two six-core Opteron processors and up to 96GB

DRAM in six DDR2 DIMM slots, plus dual gigabit Ethernet ports, four USB,

PCI Express 2.0, and ATI Radeon graphics. It supports four SATAII/SAS

drives, and includes an LSI RAID controller.

The system can be configured to provide eight PCIe x16 Gen 2 slots for

single-wide I/O cards, or four slots for double-wide cards. While GPUs

offer the greatest amount of compute power, customers who want to mix

other cards in the system can do so. A common example is to install

two or four GPU boards with four SSD (solid state drive) boards.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/dokopnik.jpg)

Deividson was born in União da Vitória, PR, Brazil, on

14/04/1984. He became interested in computing when he was still a kid,

and started to code when he was 12 years old. He is a graduate in

Information Systems and is finishing his specialization in Networks and

Web Development. He codes in several languages, including C/C++/C#, PHP,

Visual Basic, Object Pascal and others.

Deividson works in Porto União's Town Hall as a Computer

Technician, and specializes in Web and Desktop system development, and

Database/Network Maintenance.

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

How to Start a Linux User Group

By Dafydd Crosby

The Linux User Group (also known as a LUG) has been one of the

cornerstones of Linux advocacy ever since its first inception. Bringing

together local Linux lovers, it is the grassroots of Linux - where

neighbours and other townfolks can sit around a table and discuss the best

way to compile the kernel for their machines, or how to get their Slackware

network devices going again. It is also a high bandwidth way to share

knowledge - if you've ever heard of the sneaker-net, you'll

appreciate the advantages of being in a room full of real people who share

your interest in open-source software.

Chances are good that you are already in an area that has a local LUG,

and a simple search online should be able to confirm it. However, if you happen

to be in an area where none currently exists, the process of actually

setting up a group is very easy.[1]

Setting up a group

Your group will be ready to go once you've got a basic framework in place:

- A Web site - This part is easier to do

than ever before. Linux.com allows you

to set up a group page in a simple, straight-forward fashion. It's a lot

like a social network, so other members can easily join and keep informed

of events.

- A mailing list - This is the pulse of

your group, where interested people can ask questions and swap info. With a

simple mailing list, you can coordinate your efforts with other group

members, discuss future meeting ideas, and keep the blood flowing in the

group.

- A meeting spot - Find a spot (preferably a

public one) that is quiet enough to allow speakers and presentations, but

large enough to hold however large your group is. Location is key! Some

good spots that are often free (or very cheap) are schools and university

campuses. You'll want an Internet connection and power sockets, so keep

that in mind when looking for a place.

The first two items shouldn't take longer than a couple of hours to set

up, and will only need minimal maintenance after that. The meeting spot

will take a little longer, but, with the collective effort of your group

(communicating through the mailing list) it shouldn't be too difficult.

Now it's just a matter of getting a few people interested. It's alright

if the first members are your friends - that's how the ball gets rolling!

The LUG will take on a life of its own, once you have outsiders coming in,

so find ways of getting the word out around town. Postering universities

and cafes (be sure to ask permission) is a great way to get other

like-minded folks to check out your Web site and meetings.

Having fun and keeping the LUG interesting

Keeping things interesting isn't something that is easily done

spontaneously, so you'll want to keep note of ideas for presentations and

events for future use. It's essential to keep the creative pump primed,

otherwise it will be hard keeping members coming to meetings.

Fortunately, your members will be a great resource in letting you know

what they're interested in - after all, they want to have fun, too! When

someone is bursting with passion over the new distro he or she just installed,

that enthusiasm can be infectious. Encourage this passion, because these

people will help keep the group moving along.

Occasionally, there's the meeting where a talk or the scheduling has

fallen through. This is a great time to be generating ideas and keeping

those creative juices flowing. Keeping the meet-ups consistently fun and

engaging will keep everyone showing up time and time again.

Reaching out to the community

The greatest benefits of a LUG is what it can do for the local community.

It can be donating Linux-loaded machines to non-profit groups, install-fests

to help people start using Linux, or connecting people from different

technology groups who wouldn't normally interact.

One of the beauties of Linux and F/OSS software is that there are many

ways that people from different technology backgrounds and groups can

contribute. Java programmers, hardware hackers, and theoretical scientists

can all sit at the same table and meet common ground at a LUG, when given a

good topic to think over. Therefore, keep connected with all the local

groups which could potentially have a few Linux users (or future Linux

users).

The long road ahead

A lot of groups get side-tracked by things like dues, group bylaws, and

other organizational structures that are necessary to keep the group viable

year-to-year. Transparency is key, but don't let the meetings get bogged

down in administrative matters - keep it short, keep it simple, and keep it

civil. If an issue can be relegated to the mailing list, that's the best

spot for it. That way everyone has a chance to speak up, and it's all

available on the public record.

Starting a LUG is a rewarding experience, and can be a great experience

for everyone involved. With so many successful groups running now, it has

never been easier to start your own, so start organizing one today!

Resources

Wikipedia entry

on Linux User Groups

UniLUG

The Linux

Documentation Project's LUG HOWTO

[1] Rick Moen comments:

My rule of thumb in the LUG HOWTO, and other writings

on the subject, is that a successful LUG seems to need a core of at least

four active members -- who need not be particularly knowledgeable.

As you say, not a lot else is strictly necesssary.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/crosby.jpg)

Dafydd Crosby has been hooked on computers since the first moment his

dad let him touch the TI-99/4A. He's contributed to various different

open-source projects, and is currently working out of Canada.

Copyright © 2010, Dafydd Crosby. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 170 of Linux Gazette, January 2010

What's up with Google Public DNS?

By Rick Moen

A few days ago, out of nowhere, Google, Inc. announced that it was

offering a new free-of-charge service to the public: robust recursive

DNS nameservice from highly memorable IP addresses 8.8.8.8 and

8.8.4.4, with extremely fast responses courtesy of anycast IP

redirection to send the query to the closest network cluster for that

service (like what Akamai and similar CDNs do for Web content).

This service needs some pretty considerable funding for hardware and

bandwidth, especially if its usage scales upwards as Google intends.

Reportedly, it also developed a totally new recursive-only nameserver

package, name not disclosed, in-house. According to my somewhat casual

research, the software was probably written by Google developer Paul S.

R. Chisholm, who doesn't seem to have any prior record of writing such

software.

That is remarkable, to begin with, because recursive nameservers are

notoriously difficult to write correctly, such that the history of open

source DNS servers is littered with failed recursive-nameserver

projects. (By contrast, the other types, authoritative, iterative, and

forwarder, are much easier to write. Please see my separate article, "The Village of Lan, A Networking Fairy Tale", about the

differences.)

The obvious question is, what's in it for Google? At first, I guessed

it to be a research/prestige project, an opportunity for Chisholm

and other technical staff to try their hand at managing major network

infrastructure. It also already serves as DNS foundation for some

internal Google services, such as the WiFi network for visitors to the

Google campus, and for the free public WiFi network Google offers to

residents of Mountain View, California, near its headquarters.

That didn't really seem sufficient to justify the outlay required in

expenses and technical resources, though.

My friend Don Marti suggested an alternative idea that sounds more

promising: It's a "spamtenna", a distributed data-collection system that

classifies DNS clients: those doing high numbers of DNS lookups of

known spam-related hostnames, and regular DNS clients that don't. Then,

other hostnames resolved more often by the first group than the second

are noted as also probably spam-involved. Google can use that

database of suspect hostnames in anti-spam service, to aid GMail

antispam, etc., a usage model consistent with Google's privacy policy.

Is Public DNS an attractive offering? Does it have privacy problems? Yes and

no.

For most people, probably even most Linux Gazette readers,

DNS is just something automatically taken care of, which means it tends

to get referred out to the local ISP's recursive nameservers. I

shouldn't be unkind to ISPs, who do their best, but ISP recursive

servers tend to be pretty bad. In general, they have

spotty performance, often play tricks such as redirecting queries or

ignoring time-to-live timeouts on DNS data, are security liabilities (on

account of pervasive "cache poisoning" by malign parties elsewhere on

the Internet), and of course are an obvious point where data collects

about all of your DNS queries -- what you and your users ask about, and

what is replied. Of course, your ISP already sees all all your incoming

and outgoing packets -- but, unlike Google, are contractually obliged to

deal with you in good faith and look after your interests.

That is one of the problems with Google's Public DNS offering, and,

likewise, with its other free-of-charge services such as GMail (and, for

that matter, with pretty much any unpaid "Web 2.0" service): Any

contractual commitment to users is either non-existent or extremely weak.

They say they have a strong privacy policy, with information about which of

your IP addresses queried their servers being erased after 24-48 hours, but

the fact is that you're just not a paying customer, so policies don't mean

much, completely aside from the possibility of them being changed on a

whim.

Another problem I've already alluded to: What software does it use, and

is it reliable and correct? Google is telling the public nothing about

that software, not even its name, except that it was developed in-house

and is not to be open source in the foreseeable future. Most of the

really good recursive nameserver packages were developed as open source

(in part) to get the benefit of public debugging and patches. The rest

took a great deal of time and money to get right.

One common comparison is to David Ulevich's OpenDNS free recursive

service, which is competently run and keeps users away from

malware/phishing hostnames. Unless you use the paid version, however,

OpenDNS deliberately breaks Internet standards to return the IP of its

advertising Web server whenever the correct answer is "host not found"

(what is called NXDOMAIN in DNS jargon). Google Public DNS doesn't

suffer the latter problem.

A third alternative: None of the above. Run your own recursive

nameserver. For example, NLnet Labs's open-source Unbound is very easy

to set up, requires no maintenance or administration, takes only about

11 MB RAM, and runs on Windows, Linux, or Mac OS X. It would be silly

to run such a network service on every machine: Instead, run

it on one of the machines of your home or office's LAN, and have all of

the machines' DNS client configurations -- /etc/resolv.conf on Linux

machines -- point to it. That way, there's a single shared cache of

recently queried DNS data shared by everyone in the local network.

(Note: Most people have their machines' "resolv.conf" contents, along

with all other network settings, populated via DHCP from a local network

appliance or a similar place, and configure that to point clients' DNS to

the nameserver machine's IP. For that reason, the nameserver machine

should be one to which you've assigned a static IP address, and left

running 24/7.

Hey, OpenWRT wireless-router

firmware developers! Make me happy and put Unbound into OpenWRT, rather

than just Dnsmasq, which is a mere forwarder, and Just Not Good Enough.

Dnsmasq forwarding to Unbound, however, would be really good, and

putting recursive DNS in the same network appliance that hands out DHCP

leases is the ideal solution for everyone. Thanks!)

It seems that hardly anyone other than us networking geeks bothers to

have real, local nameservice of that sort -- a remarkable example of

selective blindness, given the much snappier network performance, and

greater security and control, that doing it locally gains you for almost

no effort at all.

In fact, what's really perplexing is how many people, when I give

them the above suggestion, assume either that I must be exaggerating the

ease of running a recursive nameserver, or that "professional" options

like their ISP, OpenDNS, or Google Public DNS must actually be faster

than running locally, or both. Let's consider those: (1) When I say

there's nothing to administer with recursive nameserver software, I mean

that quite literally. You install the package, start the service, it

runs its cache by itself, and there are no controls (except to run it or

not). It's about as foolproof as network software can get. (2) When

your DNS nameserver is somewhere on the far side of your ISP uplink,

then all DNS queries and answers must traverse the Internet both

ways, instead of making a local trip at ethernet speeds. The only

real advantage a "professional" option has is that its cache

will be already well stocked, hence higher percentage of cache hits

relative to a local nameserver when the latter first starts, but then

there will be essentially no difference and the local service will

thereafter win because it's local.

Why outsource to anyone, when you can do a better job locally, at

basically no cost in effort?

Recursive Nameserver Software for Linux

BIND9: Big, slow, kitchen-sink package that does recursive

and authoritative service, and even includes a resolver library (DNS

client lib).

http://www.isc.org/index.pl?/sw/bind/

Licence: Simple permissive licence with warranty disclaimer.

dnscache (from djbdns): Fast, threaded, secure, needs

to be updated with various packages, which you can get in one of four

downstream maintained variants of the 2001 abandonware djbdns package:

zinq-djbdns, Debian djbdns, RH djbdns, or LolDNS.

http://cr.yp.to/djbdns.html,

but also see my links to further information at

http://linuxmafia.com/faq/Network_Other/dns-servers.html#djbdns

Licence: Asserted to be "public domain".

MaraDNS: general-purpose, fast, lightweight,

authoritative, caching forwarder, and recursive server, currently being

rewritten to be even better.

http://www.maradns.org/

Licence: Two-clause BSD licence.

PowerDNS Recursor: Small and fast. Has been a little

slow in fixing possible security risks, e.g., didn't take measures

against the ballyhooed vulnerability Dan Kaminsky spoke of until March

2008 -- but is excellent otherwise.

http://www.powerdns.com/en/products.aspx

Licence: GNU GPLv2.

Unbound fast, small, modular caching, recursive server,

from the same people (NLnet Labs) who produced the excellent NSD

authoritative-only nameserver.

http://unbound.net/

Licence: BSD.

Late Addendum: The Real World Sends Rick a Memo

This might be you: You have one or more workstation or laptop on either a

small LAN or direct broadband (or dialup) connection. You're (quite

rightly) grateful that all the gear you run gets dynamic IP configuration

via DHCP. The only equipment you have drawing power on a 24x7 basis is

(maybe) your DSL modem or cable modem and cute little network gateway

and/or Wireless Access Point appliance (something like a Linksys WRT54G).

You don't have any "server" other than that.

Mea culpa: Being one of those oddballs who literally run servers

at home, I keep forgetting you folks exist; I really have no excuse.

Shortly after I submitted the first version of this article to

LG, a friend on my local Linux user group mailing list reminded

me of the problem: He'd followed my advice and installed Ubuntu's Unbound

package on his laptop, but then (astutely) guessed it wasn't being

sent queries, and asked me how to fix that. His house had the usual

network gateway appliance, which (if typical) runs Dnsmasq on embedded Linux

to manage his Internet service, hand out DHCP leases on a private IP

network, and forward outbound DNS requests to his ISP nameserver.

As I said above, it would be really nice, in such cases, to run a

recursive nameserver (and not just Dnsmasq) on the gateway appliance,

forwarding queries to it rather than elsewhere. That's beyond the scope

of my friend's immediate needs, however. Also, I've only lately, in

playing with OpenWRT on a couple of actual WRT54G v.3 devices received

as gifts, realised how thin most such appliances' hardware is: My units

have 16 MB total RAM, challenging even for RAM-thrifty Unbound.

My friend isn't likely to cobble together a static-IP server machine

just to run a recursive nameserver, so he went for the only alternative:

running such software locally, where the machine's own software can

reach it as IP 127.0.0.1 (localhost). The obstacle, then, becomes DHCP,

which by design overwrites /etc/resolv.conf (the DNS client

configuration file) by default every time it gets a new IP lease,

including any "nameserver 127.0.0.1" lines.

The simple and elegant solution: Uncomment the line "#prepend

domain-name-servers 127.0.0.1;" that you'll find already present in

/etc/dhcp3/dhclient.conf . Result: The local nameserver will be

tried first. If it's unreachable for any reason, other nameserver

IPs supplied by DHCP will be attempted in order until timeout. This

is probably exactly what people want.

(If, additionally, you want to make sure that your DHCP client never requests

additional "nameserver [IP]" entries from the DHCP server in the first

place, just edit the "request" roster in /etc/dhcp3/dhclient.conf to

remove item "domain-name-servers".)

Second alternative: Install package "resolvconf" (a utility that aims

to mediate various other packages' attempts to rewrite /etc/resolv.conf),

and add "nameserver 127.0.0.1" to /etc/resolvconf/resolv.conf.d/head so

that this line always appears appears at the top of the file. This is

pretty nearly the same as the first option.

Last, the time-honoured caveman-sysadmin solution: "chattr +i

/etc/resolv.conf", i.e., setting the file's immutable bit (as the root

user) so that neither your DHCP client nor anything else can alter the

file until you remove that bit. It's hackish and unsubtle, but worth

remembering for when only Smite with Immense Hammer solutions will

do.

Talkback: Discuss this article with The Answer Gang

Rick has run freely-redistributable Unixen since 1992, having been roped

in by first 386BSD, then Linux. Having found that either one

sucked less, he blew

away his last non-Unix box (OS/2 Warp) in 1996. He specialises in clue

acquisition and delivery (documentation & training), system

administration, security, WAN/LAN design and administration, and

support. He helped plan the LINC Expo (which evolved into the first

LinuxWorld Conference and Expo, in San Jose), Windows Refund Day, and

several other rabble-rousing Linux community events in the San Francisco

Bay Area. He's written and edited for IDG/LinuxWorld, SSC, and the

USENIX Association; and spoken at LinuxWorld Conference and Expo and

numerous user groups.

Rick has run freely-redistributable Unixen since 1992, having been roped

in by first 386BSD, then Linux. Having found that either one

sucked less, he blew

away his last non-Unix box (OS/2 Warp) in 1996. He specialises in clue

acquisition and delivery (documentation & training), system

administration, security, WAN/LAN design and administration, and

support. He helped plan the LINC Expo (which evolved into the first

LinuxWorld Conference and Expo, in San Jose), Windows Refund Day, and

several other rabble-rousing Linux community events in the San Francisco

Bay Area. He's written and edited for IDG/LinuxWorld, SSC, and the

USENIX Association; and spoken at LinuxWorld Conference and Expo and

numerous user groups.

His first computer was his dad's slide rule, followed by visitor access

to a card-walloping IBM mainframe at Stanford (1969). A glutton for

punishment, he then moved on (during high school, 1970s) to early HP

timeshared systems, People's Computer Company's PDP8s, and various

of those they'll-never-fly-Orville microcomputers at the storied

Homebrew Computer Club -- then more Big Blue computing horrors at

college alleviated by bits of primeval BSD during UC Berkeley summer

sessions, and so on. He's thus better qualified than most, to know just

how much better off we are now.

When not playing Silicon Valley dot-com roulette, he enjoys

long-distance bicycling, helping run science fiction conventions, and

concentrating on becoming an uncarved block.

Copyright © 2010, Rick Moen. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 170 of Linux Gazette, January 2010

The Village of Lan: A Networking Fairy Tale

By Rick Moen

There was a time, about a year ago, when I was trying to tell people

on a LUG mailing list why they should consider using a genuine,

full-service recursive nameserver on their local networks, rather

than just the usual forwarders and such. One of the leading lights of

that LUG countered with his own suggestion: "Run a caching-only or

caching-mostly nameserver". Argh! People get so mislead by such

really bad terminology.

The concept of a "caching" DNS nameserver really doesn't tell you

anything about what the nameserver really does. All

nameservers cache; that's not a name that conveys useful

information.

What I needed, to clarify the matter, was a compelling and vivid

metaphor, so I conjured one up. Here's what I posted back to the LUG

mailing list, reproduced for the possible entertainment of Linux

Gazette readers:

Although it's true that some recursive-nameserver configurations are

(sloppily) referred to as "caching", e.g., by RHEL/Fedora/CentOS, that's

a really bad name for that function -- because caching is orthogonal to

recursion.

Theoretically, you could write a nameserver that does recursive service

but doesn't cache its results. (That would be a bit perverse, and I

don't know of any.) Conversely, nameserver packages that cache but know

nothing about how to recurse and instead do less-helpful alternative

iterative service

iterative service

are common: dnsmasq, pdnsd, etc.

Last, it's also not unheard-of to create a really modest caching

nameserver package that doesn't do even iterative service of its own,

but is purely a forwarder that knows the IP of a real nameserver --

or leaves that job up to the machine's resolver library (DNS client).

I believe dproxy and nscd would be good examples.

And, whether they cache or not (and pretty much everything caches

except OS client resolver libraries), nameservers that aren't

just forwarders offer either recursive or merely iterative

service, which makes a big difference.

I know it's not your fault, but terms like "caching-only" and

"caching-mostly" obscure the actual important bits. Fortunately, I

think I've just figured out how to fully de-obfuscate the topic. Here:

The mythical village of Lan has some talkative people (programs) in it.

Oddly, they spend most of their time talking about DNS data ;-> , but

all have quirks about their respective conversational styles.

-

Larry, the local DNS library (the crummy BIND8-derived code bundled

into GNU libc), is the guy with all the questions. He's the guy

who goes around asking other people about DNS records. Larry has

a really awful memory (no cache), so he ends up asking the same

questions over and over.

-

Nick (nscd, the nameservice caching daemon) is a kid whom Larry

has ride on his shoulders occasionally. Although he doesn't

accept queries himself, exactly, he listens to answers Larry

gets from others, remembers them, and whispers them in Larry's

ear when the forgetful old guy is about to go bother others about

them yet again. Larry's glad to carry Nick around, to help

compensate for his memory problem. On the downside, Nick's a

bit sloppy and doesn't bother to commit to memory the data's

spoiled-by dates. (He doesn't cache TTLs.)

(I hear that the Linux nscd implementation's rather severe fault of

not caching TTLs is slated to soon be fixed. Meanwhile, smart people

don't use its host caching functions.)

-

Frank is a forwarder (a copy of dproxy). He's not really bright,

but has a pretty good memory. If you ask him a DNS question, he

either says "Dunno, so I'll ask Ralph", or he says "Hey, someone

asked me that just yesterday, and I got the answer via Ralph.

Let's see if I still have the paper... yes. Here's your IP (or

other requested datum -- and you're in luck, as it still has 6

days remaining in its TTL)." Thus, he forwards all queries he

receives -- always to Ralph only, never anyone more suited to the

query -- and caches whatever Ralph tells him.

When Larry gets such answers, he might also seek some context

information from Frank. (Remember, Larry has sucky short-term

memory.) "Hey, Frank, thanks. Is that answer something you know

from your own knowledge?" "No, man, it's just what I hear."

-

Nils is an authoritative nameserver (running the "nsd" software),

charged ONLY with answering questions of the form "What is

[foo].lanvillage.com?" When Larry asks him such a question, he

answers and adds that it is from his own knowledge (authoritative).

If you ask him about anything outside lanvillage.com, he

frowns and say "Dunno, man."

-

Ralph is a recursive nameserver (an instance of PowerDNS

Recursor). Ralph is a born researcher: He doesn't have any

DNS knowledge of his own (offers no authoritative service), but

absolutely lives for finding out what DNS data can be unearthed

from guys in other villages. If you ask him a DNS question,

he'll figure out whom to ask, go there regardless of where it

takes him, if necessary chase around the state following leads,

and only bother you when he has the final answer. (This is

the "recursive" part. Compare with the iterative approach,

below.) Like everyone else in this village except Larry, he

has a good memory (caching).

Let's say, Larry asks Ralph, "What's uncle-enzo.linuxmafia.com?"

In the worst case, if Ralph is just back from vacation (has a

depleted cache), Ralph goes off for a while, and says: "I

looked on my desk to see if the answer were there (in cache),

but it wasn't. So, I checked to see if answers to the question

'Where are nameservers for the linuxmafia.com domain?' were there,

but they weren't either. So, I check to see if answers to the

question 'Where are nameservers for the .com top-level domain?'

were there, but they weren't either. So, I finally fell back

on my Rolodex's list of 13 IP addresses of root nameservers,

picked one, and visited it. I asked it where I can get answers

to .com questions. It gave me a list of .com nameserver IPs.

I picked one, visited it, and asked who knows about linuxmafia.com

DNS matters. It gave me a list of linuxmafia.com IPs, I picked

one, visited it, asked it where uncle-enzo.linuxmafia.com is,

brought the answer back, and here y'are. Oh, and I've made a

note who knows where .com information is, where specifically

linuxmafia.com information is, and also where

uncle-enzo.linuxmafia.com is, just in case I'm asked again."

Larry's fond of Ralph, because Ralph does all the work and

doesn't come back until he can give either the answer or

"Sorry, this took too long", or "There's no such host", or

something similarly dispositive.

-

Dwayne is a nameserver offering iterative service only

(running dproxy). You can get any DNS answer out of him

eventually, but he's not willing to bounce around the state

following leads. A conversation with Dwayne is sort of

punctuated:

"Do you know where uncle-enzo.linuxmafia.com is?" "No."

"Well, do you know who knows?" "No."

"Well, do you know who knows where to find .com nameservers?" "No."

"Well, can you ask the root nameserver where to find .com

nameservers?" "OK." [Gives list of 13.]

"Can you ask .com nameserver #1 who answers for linuxmafia.com?"

"OK." [Gets list of five nameserver IPs.]

"Can you ask linuxmafia.com nameserver #1 where

uncle-enzo.linuxmafia.com is?" "OK." [Queries and gets answer.]

Next time Dwayne is asked any of the first three questions, his

answer is no longer "No", because he is able to answer from memory

(cache).

Notice that Dwayne, although not as helpful and resourceful as

Ralph, is at least going about things a bit more intelligently

than Frank does: Dwayne is able to look up the chain of people

outside to query (iteratively) to find the right person, whereas

Frank's idea of research is always "Ask Ralph."

At the outskirts of the Village of Lan is a rickety rope-bridge to the

rest of the state. (Think "Indiana Jones and the Temple of Doom", or

"Samurai Jack and the Scotsman".) All of Lan's commerce has to go over

that bridge (an analogy to, say, a SOHO network's overburdened DSL

line). Frank or Dwayne's bouncing back and forth across that bridge

continually gets to be a bit of a problem, because of bridge congestion.

Ralph's better for the village, because he tends to go out, wander

around the state, and then come back -- minimising traffic. And all of

these guys with functional short-term recall (everyone but Larry)

is helping by avoiding unnecessary bridge crossings.

-

On the far side of the (slow, rickety, congested) ropebridge is

Ollie (the OpenDNS server). Ollie is willing to do recursive

service the way Ralph does, but instead of saying "I checked,

and there is no such DNS datum" where that is the correct answer,

it says "guide.opendns.com" (IP 208.67.219.132), which is the

IP of a OpenDNS Web server, advertising-driven, that tries to

give you helpful Web results (plus ads) -- something that's

less than useful, and in fact downright harmful, if what's

attempting to use DNS isn't a Web browser. (Gee, ever heard

of e-mail, for example?)

I don't know about you, but I think "That place doesn't exist"

is a better answer than "guide.opendns.com, IP 208.67.219.132",

when "That place doesn't exist" is in fact the objectively

correct answer. Ollie says:

$ dig i-dont-exist.linuxmafia.com @resolver2.opendns.com +short

208.67.219.132

By contrast, my own nameserver gives the actually correct answer:

$ dig i-dont-exist.linuxmafia.com @ns1.linuxmafia.com +short

$

(Specifically, it says "NXDOMAIN", which means "That place doesn't

exist.")

Getting back to Larry: Larry can outsource all of his queries across

the rickety, thin, congested ropebridge to Ollie -- and spend time

waiting for Ollie and watching him barge continually across it every

time there's a query -- and know that Ollie gives bullshit answers every

time the correct answer should be "That place doesn't exist."

Larry can use Dwayne's services -- something of an exercise in

frustration but better than nothing. He can tap Frank, which is

barely better than nothing. Or, he can use Ralph.

Regardless of whom he asks, he can let Nick ride on his shoulders and

serve as his aide-memoire -- with the drawback that Nick carelessly

disregards something really, really important (TTLs).

Personally, if I were Larry, I'd give Frank, Dwayne, and Ollie a pass,

dump Nick, and give Ralph a call.

Talkback: Discuss this article with The Answer Gang

Rick has run freely-redistributable Unixen since 1992, having been roped

in by first 386BSD, then Linux. Having found that either one

sucked less, he blew

away his last non-Unix box (OS/2 Warp) in 1996. He specialises in clue

acquisition and delivery (documentation & training), system

administration, security, WAN/LAN design and administration, and

support. He helped plan the LINC Expo (which evolved into the first

LinuxWorld Conference and Expo, in San Jose), Windows Refund Day, and

several other rabble-rousing Linux community events in the San Francisco

Bay Area. He's written and edited for IDG/LinuxWorld, SSC, and the

USENIX Association; and spoken at LinuxWorld Conference and Expo and

numerous user groups.

Rick has run freely-redistributable Unixen since 1992, having been roped

in by first 386BSD, then Linux. Having found that either one

sucked less, he blew

away his last non-Unix box (OS/2 Warp) in 1996. He specialises in clue

acquisition and delivery (documentation & training), system

administration, security, WAN/LAN design and administration, and

support. He helped plan the LINC Expo (which evolved into the first

LinuxWorld Conference and Expo, in San Jose), Windows Refund Day, and

several other rabble-rousing Linux community events in the San Francisco

Bay Area. He's written and edited for IDG/LinuxWorld, SSC, and the

USENIX Association; and spoken at LinuxWorld Conference and Expo and

numerous user groups.

His first computer was his dad's slide rule, followed by visitor access

to a card-walloping IBM mainframe at Stanford (1969). A glutton for

punishment, he then moved on (during high school, 1970s) to early HP

timeshared systems, People's Computer Company's PDP8s, and various

of those they'll-never-fly-Orville microcomputers at the storied

Homebrew Computer Club -- then more Big Blue computing horrors at

college alleviated by bits of primeval BSD during UC Berkeley summer

sessions, and so on. He's thus better qualified than most, to know just

how much better off we are now.

When not playing Silicon Valley dot-com roulette, he enjoys

long-distance bicycling, helping run science fiction conventions, and

concentrating on becoming an uncarved block.

Copyright © 2010, Rick Moen. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 170 of Linux Gazette, January 2010

A Quick Lookup Script

By Ben Okopnik

Over the years of using computers, as well as in the process of growing

older and more forgetful, I've discovered the value of having quick,

focused ways of accessing important information. As an example, I have a

password-protected, encrypted file containing my passwords and all the

information related to them (i.e., hostname, username, security questions,

etc.), as well as a script that allows me to look up the information and

safely edit it; I also have a quotations file that I've been assembling for the

last 20-plus years, and a script that looks up the quotations and lets me add

new ones easily. In this article, I'll show yet another, similar script -

one that lets me keep track of often-used commands and various tips and

tricks that I use in Linux, Solaris, and so on.

For those familiar with database terminology, this is a minimal version of

a CRUD

(create, read, update, and delete) interface, minus the 'delete' option -

as an old database administrator, I find even the thought of data deletion

distasteful, anyway.  Updating is a

matter of adding a new entry with a distinct search string - and if you've added

something that you decide you don't need later, all you have to do is not look

it up.

Updating is a

matter of adding a new entry with a distinct search string - and if you've added

something that you decide you don't need later, all you have to do is not look

it up.

Keeping shell scripts from blowing up

One of the things I've found out in writing and using this type of script

is that there are pitfalls everywhere: any time you write something that

takes human-readable information and tries to parse it, you're going to run

into interface problems. Not only are the data items themselves suspect -

although that's not an issue in this case, where the information is just a

textual reminder - but also the user interaction with the script has edge

cases all over the place. As a result, 99% of this script is tests for

wrong actions taken by the user; the actual basic function of the script is

only about a dozen lines. The most amusing part of how things can break is

what you get after a while of not using the script and forgetting its

quirks: even I, its author, have managed to crash it on occasion! These

were always followed by half-amused, half-frustrated muttered curses and

fixes - until there wasn't anything left to fix (at least, based on the

problems that I could stumble across, find, imagine, or create.) As always,

I'd appreciate feedback, if anyone discovers a problem I haven't found!

Usage

On my system, the script is simply called 'n' - as I recall, I was

originally thinking of 'note' at the time that I named it. You can download

the latest version from here,

although the one on the LG

server isn't likely to go stale any time soon; this script hasn't

changed in quite a while.

The run options for this script are deceptively simple, and can be seen by

invoking it with '-h' or '--help' as an argument:

ben@Jotunheim:~$ n -h

n [-h|--help] | [section] [comment_search_string]

If invoked without any argument, asks for the section and entry text to

be added to the data file. If an argument is provided, and matches an

existing section, that entire section is displayed; if two arguments

are supplied, displays the first entry matching arg[2] in the section

matching arg[1].

Here's an example of adding a new entry:

ben@Jotunheim:~$ n

Current sections:

awk

date

find

grep

mencoder

misc/miscellany/miscellaneous/general

mount

mp3/ogg/wav

mplayer

netstat

perl

shell/bash/ksh

sort

tcpdump

vi/vim

whois

Please enter the section to update or a new section name: perl

Please type in the entry to be added to the 'perl' section:

# Interactive Perl debugger

perl -d scriptname

ben@Jotunheim:~$

Note that each entry requires a comment as its first line, and is

terminated by a blank line. The comment may be continued on the subsequent

lines, and is important: the default action for lookup (this may be changed

via a setting in the script) is to search only the comment when the script

looks for a specific entry in a given section. In other words, if I wanted

to find the above entry, I'd search for it as

n perl debug

This would return every entry in the 'perl' section that contained 'debug'

in the comment. If you wanted a "looser" type of search, that is, one which

would match either the comment or the content, you could change the

'search_opt' variable near the top of the script as described there.

Personally, I prefer the "comment-only" search - but, in this, I'm taking

advantage of my "Google-fu", that is, my ability to formulate a good search

query. The trick is that I also use the same thing, the same mode of thinking,

when I create the comment for an entry: I write comments that are

likely to match a sensibly-formed query even if I have absolutely no memory

of what I originally wrote.

So far, so simple - but that's not all there is to it. The tricky part is

the possibly-surprising features that are a natural consequence of the

tools that I used to write the script. That is, since I used the regex

features of 'sed' to locate the sections and the lines, the arguments

provided to the script can also be regexes - at least, if we take care to

protect them from shell expansion by using single quotes around them. With

those, this script becomes much more useful, since you can now look for

more arbitrary strings with more freedom. For example, if I remember that I

had an entry mentioning 'tr' in some section, I can tell 'n' to look for

'tr' in the comment, in all the sections:

n '.*' tr

However, if the above search comes back with too many answers (as it will,

since 'trailing', 'matrix',

'control', and so on will all match), I can tell 'n' to

look for 'tr' as a word - i.e., a string surrounded by

non-alphanumeric characters:

n '.*' '\<tr\>'

This returns only the match I was looking for.

I can also look for entries in more than one section at once, at least if I

remember to use 'sed'-style regular expressions:

n 'perl\|shell' debug

This would search for any entry that has 'debug' in the comments, in both

the 'perl' and 'shell' sections.

This can also go the other way:

n perl 'text\|binary'

Or even:

n 'awk\|perl' 'check\|test'

One of the convenient options, when creating a new section, is to use more

than one name for it - that is, there may be more than one section name

that would make sense to me when I'm thinking of looking it up. E.g., for

shell scripting tips and tricks, I might think of the word 'shell' - but I

might also think of 'bash', 'ksh', or even 'sh' first. (Note that case

isn't an issue: 'n' ignores it when looking up section names.) What to do?

Actually, this is quite easy: 'n' allows for multiple names for a given

section as long as they are separate words, meaning that they are delimited

by any non-alphanumeric character except an underscore. So, if you name a

section 'KSH/sh/Bash/shell/script/scripting', any one of these specified as

a section name will search in the right place. Better yet, when you add a

new entry, specifying any of the above will add it in the right place.

Since the data is kept in a plain text file, you can always edit it if you

make a mistake. (The name and the location of the file, '~/.linux_notes',

is defined at the top of the script, and each user on a system can have one

of their own - or you could all share one.) Just make sure to maintain the

format, which is a simple one: section names start with '###' at the

beginning of the line, comments start with a single '#', and entries and

sections are separated by blank lines. If you do manage to mess it up

somehow, it's not a big deal - since the file is processed line by line,

every entry stands on its own, and, given the design of the script,

processing is fairly robust. (E.g., multiple blank lines are fine; using

multiple hashes in front of comments would be OK; even leaving out blank

lines between entries would only result in the 'connected' entries being

returned together.) Even in the worst case, the output is still usable and

readable, and any necessary fixes are easy and obvious.

'n' as a template

One of the things I'd like the readers of LG to take home from

this article is the thought of 'n' as a starting place for their own future

development of this type of scripts. There are many occasions when you'll

want an "update and search interface", and this is a model I've used again

and again in those situations, with only minimal adjustments in all cases.

Since this script is released under the GPL,

please feel free to tweak, improve, and rewrite it however you will. Again,

I'd appreciate being notified of any interesting improvements!

Talkback: Discuss this article with The Answer Gang

Ben is the Editor-in-Chief for Linux Gazette and a member of The Answer Gang.

Ben was born in Moscow, Russia in 1962. He became interested in electricity

at the tender age of six, promptly demonstrated it by sticking a fork into

a socket and starting a fire, and has been falling down technological

mineshafts ever since. He has been working with computers since the Elder

Days, when they had to be built by soldering parts onto printed circuit

boards and programs had to fit into 4k of memory (the recurring nightmares

have almost faded, actually.)

His subsequent experiences include creating software in more than two dozen

languages, network and database maintenance during the approach of a

hurricane, writing articles for publications ranging from sailing magazines

to technological journals, and teaching on a variety of topics ranging from

Soviet weaponry and IBM hardware repair to Solaris and Linux

administration, engineering, and programming. He also has the distinction

of setting up the first Linux-based public access network in St. Georges,

Bermuda as well as one of the first large-scale Linux-based mail servers in

St. Thomas, USVI.

After a seven-year Atlantic/Caribbean cruise under sail and passages up and

down the East coast of the US, he is currently anchored in northern

Florida. His consulting business presents him with a variety of challenges

such as teaching professional advancement courses for Sun Microsystems and

providing Open Source solutions for local companies.

His current set of hobbies includes flying, yoga, martial arts,

motorcycles, writing, Roman history, and mangling playing

with his Ubuntu-based home network, in which he is ably assisted by his wife and son;

his Palm Pilot is crammed full of alarms, many of which contain exclamation

points.

He has been working with Linux since 1997, and credits it with his complete

loss of interest in waging nuclear warfare on parts of the Pacific Northwest.

Copyright © 2010, Ben Okopnik. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 170 of Linux Gazette, January 2010

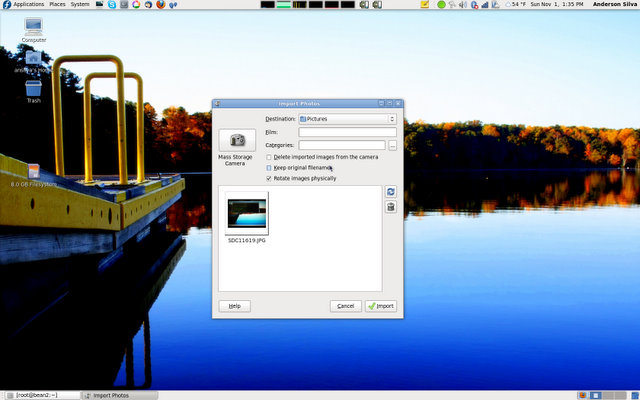

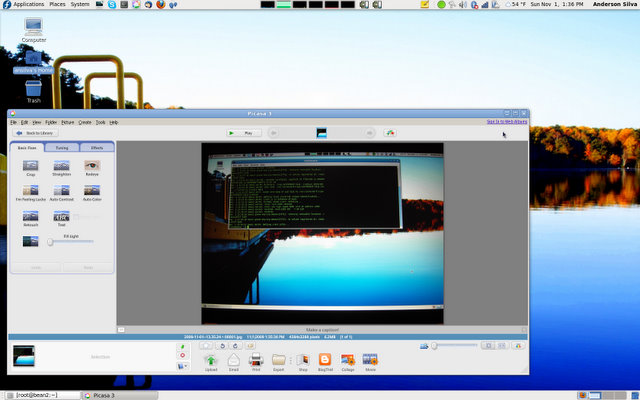

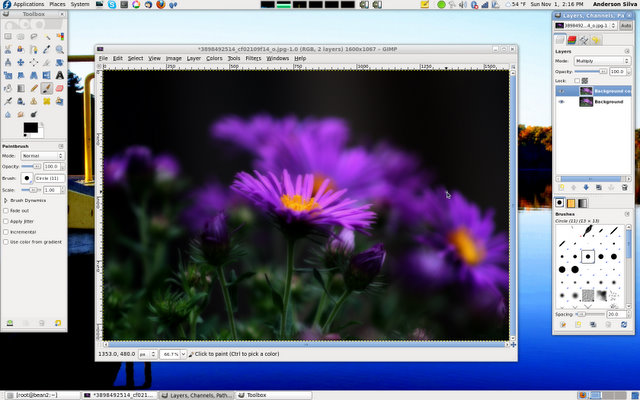

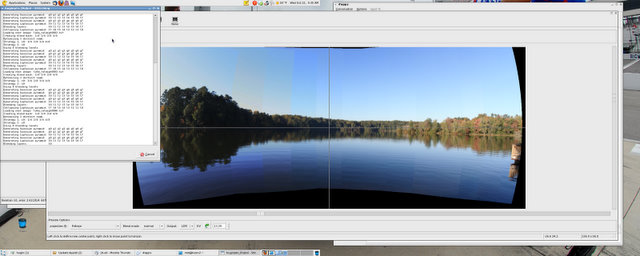

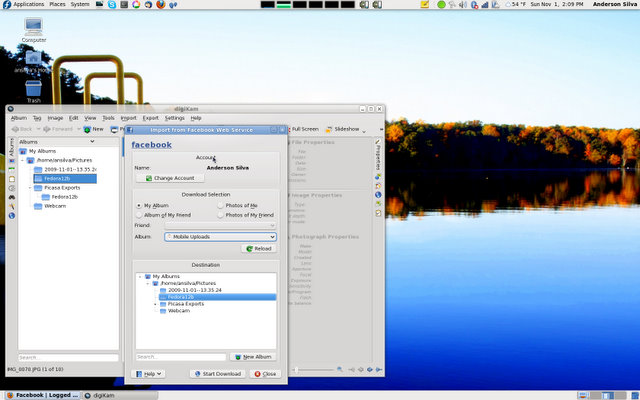

The things I do with digital photography on Linux

By Anderson Silva

I've been a Linux user for almost fifteen years, and a digital

photography enthusiast for about ten. I bought my first digital camera back

in the late 90s while in college, which I used mostly for sharing pictures

of my family with my parents, who live in Brazil. About five or six years