...making Linux just a little more fun!

October 2009 (#167):

- Mailbag

- Talkback

- 2-Cent Tips

- News Bytes, by Deividson Luiz Okopnik and Howard Dyckoff

- QQ on Linux, by Silas Brown

- Away Mission - SecureWorld Expos, by Howard Dyckoff

- Away Mission - Upcoming in October, by Howard Dyckoff

- A Quick-Fire chroot Environment, by Ben Okopnik

- Two is better than one!, by S. Parthasarathy

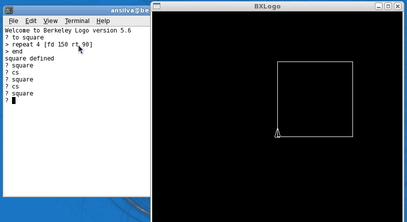

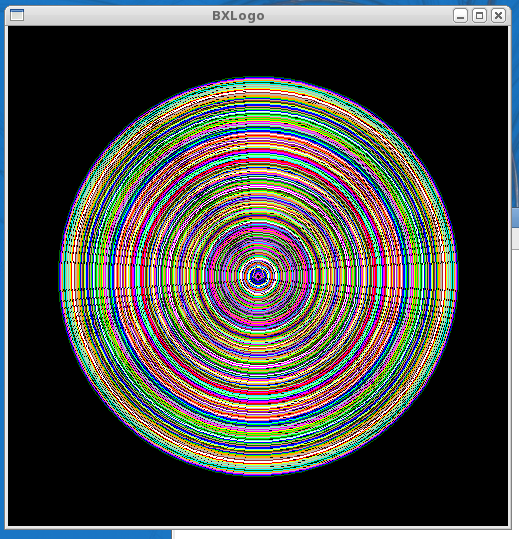

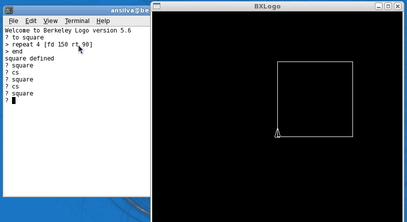

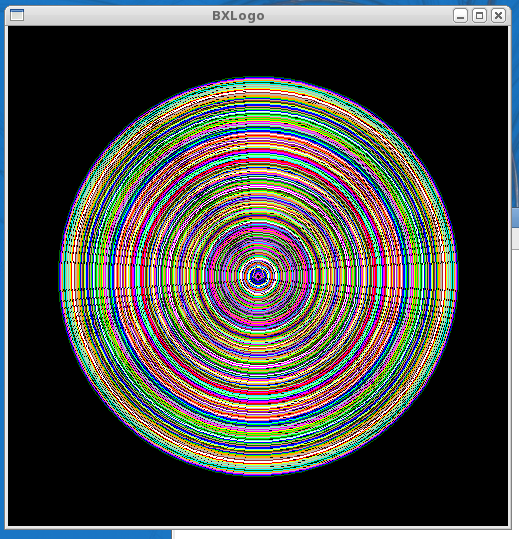

- Using Linux to Teach Kids How to Program, 10 Years Later (Part II), by Anderson Silva

- XKCD, by Randall Munroe

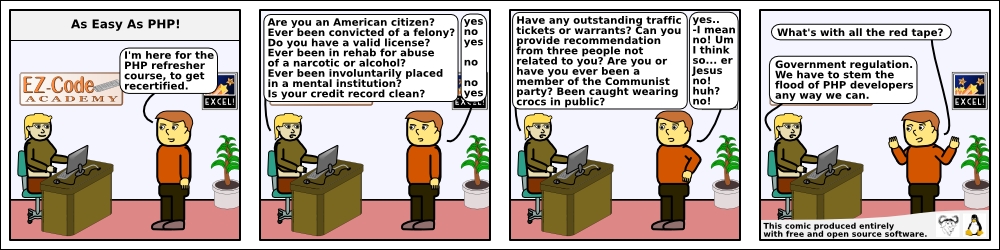

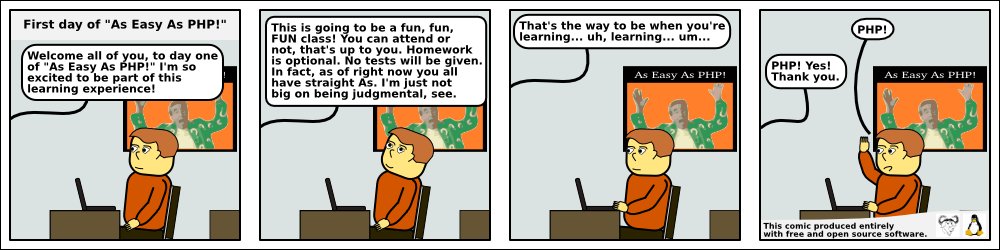

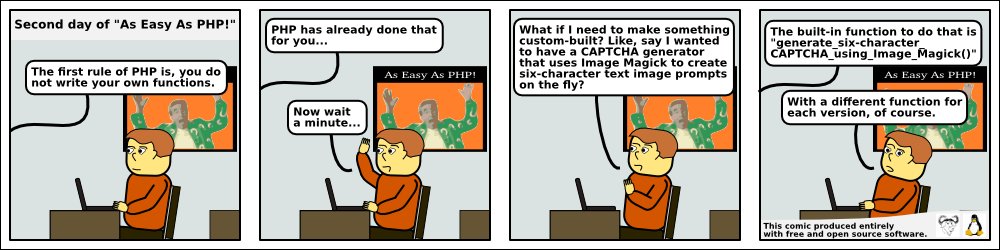

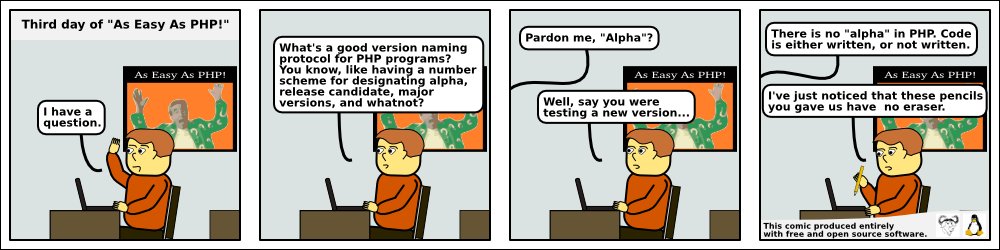

- Doomed to Obscurity, by Pete Trbovich

- The Linux Launderette

Mailbag

This month's answers created by:

[ Anderson Silva, Amit Kumar Saha, Ben Okopnik, Kapil Hari Paranjape, Mulyadi Santosa, Rick Moen, Suramya Tomar, Thomas Adam ]

...and you, our readers!

Gazette Matters

Small grammar correction on my article

Anderson Silva [afsilva at gmail.com]

Wed, 2 Sep 2009 01:15:24 -0400

The line:

Where 90 and 270 is how many degrees the turtle should turn given the

right or left command.

Should probably read:

Where 90 and 270 are how many degrees the turtle should turn given the

right or left command.

Apologies for not catching that earlier.

--

http://www.the-silvas.com

[ Thread continues here (7 messages/4.82kB) ]

[OT] Meetup?

Jimmy O'Regan [joregan at gmail.com]

Tue, 15 Sep 2009 14:03:22 +0100

I'll be in San Francisco for a couple of days next month (Google

Mentor Summit). Will anyone else be around?

[ Thread continues here (3 messages/1.69kB) ]

a bit misleading sentence in Back Page of LG #166:)

Mulyadi Santosa [mulyadi.santosa at gmail.com]

Wed, 2 Sep 2009 22:50:26 +0700

Hi all

Accidentally I click the back page of LG #166

(http://linuxgazette.net/166/lg_backpage.html). There, it is written

"special thanks are due to Mulyadi Santosa, who brought Pete

Trbovich's "Doomed to Obscurity" trenchantly funny cartoons to our

attention"

I checked my Sent mail folder of Gmail and I found no such message

indicating I told about that cartoon. perhaps Kat was mistakenly

giving credit to me?  I just don't want to steal someone else's

credit

I just don't want to steal someone else's

credit

Thanks in advance for your attention...

--

regards,

[ Thread continues here (5 messages/4.35kB) ]

Linux Gazette in Serbian language

[zarko at googlux.com]

Sun, 13 Sep 2009 13:54:44 -0400 (EDT)

Hi all

Would like to introduce myself and this site, that I hope will become

popular place for Linux Gazette translations on Serbia language.

Most of my work evolves around Solaris Administration, but sometimes I

also need to do Linux work and Gazette is great source of helpful tips.

Despite my extremely busy professional (and personal) schedule, I'll try

to find time for translation, but I also encourage people from former

Yugoslavia to contact me and submit their translations. They all know that

there is no much difference between languages like Serbian, Croatian,

Bosnian, etc, so I am willing to publish all translation (both in Cyrillic

or Latin alphabet) .

Cheers

Zarko

http://www.googlux.com/linuxgazette/

[ Thread continues here (2 messages/2.43kB) ]

Still Searching

iptables configuration question

J. Bakshi [j.bakshi at unlimitedmail.org]

Thu, 3 Sep 2009 21:39:36 +0530

Hello list,

Hope you all are well. It is nearly 2 weeks I am working with iptables

configuration and stuck poorly at a very interesting point. Among

several others feature of my firewall, one I like to implement is

limit_total_connection_of_a_service. There is already rate-limiter like

a user can't get more than 2 ftp connection per min from the same source

IP. I have used hashlimit for this. It can also be extended to restrict

*all total* 2 connection per min .

- But what to do to restrict all total 2 ftp connection from a particular source ?

- And to restrict all together 5 ftp connection to the server ?

Could any one suggest a iptables configuration or iptables module to

achieve these two objectives ? Thanks

PS: Please CC me.

[ Thread continues here (2 messages/2.09kB) ]

Regarding How can Define congestion windows in Fedora

Ben Okopnik [ben at linuxgazette.net]

Fri, 18 Sep 2009 23:06:39 -0500

----- Forwarded message from brahm sah <[email protected]> -----

Date: Thu, 17 Sep 2009 11:41:36 +0530 (IST)

From: brahm sah <[email protected]>

Subject: Regarding How can Define congestion windows in Fedora

To: [email protected]

Respected Sir.

I am Mr. Brahm Deo Sah, I am Master of Technology (Computer Science) student in

M.G.M,'s College of Engnieering Nanded (Maharashtra), India. I have selected

one ═IEEE Transaction papers ═"Accumulation based congestion control" as my

dessertation topic. It would be really great if you can help me out in

implementation of this paper.

i have some proble to define the═

═1.Congestion ═ windows Size 2.algorithms. in NS-2 with Linux(Fedora)

Thanking you.

Mr. Brahm Deo sah

India

Our Mailbag

Apache -- Rewrite on hiding Webapp context from URL

Britto I [britto_can at yahoo.com]

Sun, 6 Sep 2009 04:06:55 -0700 (PDT)

Folks:

I would like to have some configuration advice from you guys for apache.

I have Apache 2.2.8 running in RHEL box.

I have multiple webapps running in tomcat and connected through apache

via ajp proxy.

Now we have the Rewrite rules..like

RewriteRule ^/Context1$ /Context1/ [R]

RewriteRule ^/Context2$ /Context2/ [R]

RewriteRule ^/$ /DefaultContext/ [R]

Here Context1,Context2, DefaultContext all are different webapps hosted

by tomcat.

When somebody calls for www.mysite.com it goes to

www.mysite.com/DefaultContext/ now.

How can I disable or hide the Defaultcontext being displayed in the URL.

So that if there is some hit at www.mysite.com/DefaultContext/DoAction

it should display the user www.mysite.com/DoAction

Any advice guys ..

Thanks & regards,

Britto

[ Thread continues here (7 messages/3.38kB) ]

failed to boot into RAID-5 root filesystem in Ubuntu 8.04

Mulyadi Santosa [mulyadi.santosa at gmail.com]

Fri, 4 Sep 2009 21:46:28 +0700

Hi Gang...

Today I try to setup a server using Ubuntu 8.04. This PC has 3 hard

disks and I plan to bond them as RAID 5 device.

To achieve that, during setup stage, I create 6 partitions. 3 of them

are clustered as RAID 5 and mounted as /, while the rest are also made

as RAID 5 device and formatted as swap.

There was a warning telling me that those new RAID devices won't be

recognized until I reboot the machine. I hit the Continue button and

proceed with package installation etc. Everything seems OK and finally

the installer ask me to remove the DVD and reboot.

Here comes the trouble, the freshly installed Ubuntu won't boot. My

partner told me that it could due to unfinished RAID synchronization.

So again I boot the Ubuntu DVD, this time I pick "rescue mode" and go

into shell (making /dev/md0 the root fs). In this shell, I did "cat

/proc/mdstat" and found out the sync is still on the way. So I wait

until it hit 100% and reboot the machine again. But still, no luck.

Thus, I wonder, is this because I pick RAID 5 device as root fs? Is

this a known bug (or limitation of GRUB)? I came to this conclusion

after reading http://advosys.ca/viewpoints/2007/04/setting-up-software-raid-in-ubuntu-server/

(the 8th comment).

Advices are greatly appreciated. Thank you in advance for your attention .....

--

regards,

[ Thread continues here (7 messages/7.58kB) ]

Calling Linux Application From a Windows Application

Rennie Johnson [renniejohnson at adelphia.net]

Sat, 5 Sep 2009 09:34:34 -0700

Oh great Linux Gurus:

I read your article on VMWare about running Linux apps in windows.

[[[ He probably means Jimmy O'Regan's article, here:

http://linuxgazette.net/106/oregan.html -- Kat ]]]

I'm writing a monster OpenGL application on Windows. I want to be able

to call a Linux shell application from within my program, is it is not

available on Windows. I use Visual C++ 2008.net.

Any ideas?

Best regards,

Rennie Johnson

[ Thread continues here (4 messages/5.42kB) ]

asking for recommendation for Linux compatible external DVD RW drive

Mulyadi Santosa [mulyadi.santosa at gmail.com]

Mon, 7 Sep 2009 22:41:26 +0700

Hi Gang...

Googling here and there, I found very little web sites that talks

about recommended Linux-compatible DVD RW drive. So, I try my luck

here...perhaps you have recommendation? Preferrably below US$ 100?

FYI I am currently using Fedora 9 and kernel 2.6.30.4

Thanks in advance for your help and attention...

--

regards,

[ Thread continues here (3 messages/2.60kB) ]

Can anyone with an Indian locale test these for me?

Suramya Tomar [security at suramya.com]

Fri, 11 Sep 2009 07:27:13 +0530

Hi Jimmy,

> One of our users is complaining that our transliterator doesn't work;

> the few of us who have tested it all find that it works for us. Now,

> he says it only works because our locales are wrong(?). I'm just

> wondering if anyone other than him can get the incorrect result: i.e.,

> that 'ा' fails to transliterate to 'ા' -- I reproduced the same test

> using standard tools belo, because they do the same thing as our

> transliterator.

Just saw this email, so tried it out on my system. This is what I got:

suramya@Wyrm:/usr/lib/locale$ echo $LANG

en_IE.UTF-8

suramya@Wyrm:/usr/lib/locale$ echo राम|tr 'राम' 'રામ'

રામ

suramya@Wyrm:/usr/lib/locale$ echo राम|sed -e 's/र/ર/g'|sed -e 's/ा/ા/g'

|sed -e 's/म/મ/g'

રામ

suramya@Wyrm:/usr/lib/locale$ echo राम|perl /home/suramya/Temp/test.pl

રામ

suramya@Wyrm:/usr/lib/locale$

It seems to be working fine for me... Hope this helps.

- Suramya

[ Thread continues here (3 messages/3.34kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 167 of Linux Gazette, October 2009

Talkback

Talkback:166/lg_tips.html

[ In reference to "2-Cent Tips" in LG#166 ]

Ben Okopnik [ben at linuxgazette.net]

Mon, 7 Sep 2009 08:37:11 -0500

----- Forwarded message from Rob Reid -----

Date: Thu, 3 Sep 2009 22:04:21 -0400

From: Rob Reid <[email protected]>

To: [email protected]

Subject: tkb: Re: 2-cent Tip: Conditional pipes

Hi Ben,

I'm glad to see the nitty-gritty of shells and terminals being dealt with in

Linux Gazette. One thing that might have been unclear to the reader is that

the

LESS=FX less

syntax is (ba|z)sh code for "run less with the LESS variable set to FX for this

time only". As you probably know, you could alternatively put

export LESS="-FRX" # The R is for handling color.

in your ~/.profile to have less nicely customized everytime you run it, with

less typing.

--

Rob Reid http://www.cv.nrao.edu/~rreid/

Assistant Scientist at the National Radio Astronomy Observatory

Isn't it a bit unnerving that doctors call what they do "practice?"

- Jack Handey

[ Thread continues here (2 messages/3.18kB) ]

Talkback:166/ziemann.html

[ In reference to "Internet Radio Router" in LG#166 ]

Mulyadi Santosa [mulyadi.santosa at gmail.com]

Wed, 2 Sep 2009 22:27:37 +0700

This article reminds me to the day I made my first crude webmail using

perl CGI. Not too good, but also not so bad considering the fact "It

Just Works" and I made it using Perl while most of my friends would

prefer using PHP to do such thing

Bash scripting for CGI....ouch that's just great! and old sk00lz too!

Thanks Mr Ziemann for sharing your works....

Thanks Mr Ziemann for sharing your works....

--

regards,

[ Thread continues here (21 messages/23.00kB) ]

Talkback:166/kachold.html

[ In reference to "Linux Layer 8 Security" in LG#166 ]

Thomas Johnson [tommyj27 at gmail.com]

Wed, 2 Sep 2009 08:22:54 -0500

There is a typo in one of the Matahari URLs in this article. The URL

points at sourceforce.net, rather than sourceforge.net.

[ Thread continues here (3 messages/1.24kB) ]

Talkback:165/forsberg.html

[ In reference to "Software Development on the Nokia Internet Tablets" in LG#165 ]

Kapil Hari Paranjape [kapil at imsc.res.in]

Mon, 31 Aug 2009 06:07:40 +0530

Hello,

Thanks for a nice article explaining how to setup and use Scratchbox

for developing on the Nokia tablets.

Since the N900 has just be announced by Nokia you can expect a lot of

links to this issue of LG in a short while!

Regards,

Kapil.

--

Talkback:166/lg_mail.html

[ In reference to "Mailbag" in LG#166 ]

Jimmy O'Regan [joregan at gmail.com]

Wed, 2 Sep 2009 12:43:56 +0100

We got to the bottom of the transliteration problem: on Vineet's

system, there was a flaw in the locales -- matras were defined as

'punct' instead of 'alpha'.

2009/8/6 Jimmy O'Regan <[email protected]>:

> 2009/8/6 Vineet Chaitanya <[email protected]>:

>> All the three tests suggested by you passed successfully (using unmodified

>> en_IE.UTF-8 locale).

>>

>>

>> But if I modify "i18n" file so that "matraas" are declared as "alpha"

>> instead of "punct" then lt-proc -t also works correctly.

>>

>> In any case I would like to have a look at your "i18n" file and would

>> like to know the reason why it differs from the usual one.

>

>

> in 'i18n', I guess this comment from 'LC_CTYPE' agrees with you:

> ``

> % - All Matras of Indic and Sinhala are moved from punct to alpha class/

> % - Added Unicode 5.1 charctares of Indic scripts/

> % DEVANAGARI/

> <U0901>..<U0939>;<U093C>..<U094D>;/

> <U0950>..<U0954>;<U0958>..<U0961>;/

> <U0962>;<U0963>;<U0972>;<U097B>..<U097F>;/

> ''

>

> So yes, the matras are in the 'alpha' class on my system, not 'punct'.

>

> $ apt-cache show locales

> Package: locales

> Priority: required

> Section: libs

> Installed-Size: 8796

> Maintainer: Martin Pitt <[email protected]>

> Architecture: all

> Source: langpack-locales

> Version: 2.9+cvs20090214-7

>

Talkback:165/laycock.html

[ In reference to "GNOME and Red Hat Linux Eleven Years Ago" in LG#165 ]

Jakub Safar [mail at jakubsafar.cz]

Sun, 30 Aug 2009 20:03:34 +0200

Hi,

thank you for the article about GNOMEs history. Looking back made me

happy, nevertheless, I doubt that ... "GNOME development was announced

in August 1977" ;-)

http://linuxgazette.net/165/laycock.html

Jakub

[ Thread continues here (3 messages/3.03kB) ]

Talkback:157/anonymous.html

[ In reference to "Keymap Blues in Ubuntu's Text Console" in LG#157 ]

eric stockman [stockman.eric at gmail.com]

Sat, 08 Aug 2009 22:57:00 +0200

On my intrepid ibex 8.10 system the keymaps are

in /usr/share/rdesktop/keymaps/

[ Thread continues here (2 messages/1.02kB) ]

Talkback:issue75/lg_tips.html#tips/8

[ In reference to "/lg_tips.html" in LG#issue75 ]

Jim Cox [jim.cox at idt.net]

Mon, 31 Aug 2009 09:37:04 -0400

Another option, though I'm not sure if it fits the OP's constraint of

"plain shell methods", is stat with a custom format:

prompt$ stat -c %y /tmp/teetime.log

2009-08-31 09:30:11.000000000 -0400

I stumbled across this while looking for filesizes in scripts, seemed a

bit cleaner v. cut against ls output:

prompt$ stat -c %s /tmp/teetime.log

7560497

[ Thread continues here (4 messages/2.90kB) ]

Talkback: Discuss this article with The Answer Gang

Published in Issue 167 of Linux Gazette, October 2009

2-Cent Tips

1-cent Tip: passing one line commands to at

Lew Pitcher [lew.pitcher at digitalfreehold.ca]

Mon, 21 Sep 2009 10:37:40 -0400

Hi guys,

On September 21, 2009 01:08:09 Mulyadi Santosa wrote:

[snip]

> Don't worry, you don't need to use GUI automation tool such as dogtail

> for doing so. Simply use gnome-power-cmd.sh command to do that. Note:

> make sure atd daemon is running first. Then do:

> $ at now + 10 minutes

> at> gnome-power-cmd.sh suspend

> at> (press Ctrl-D)

[snip]

This post reminded me that at(1) reads it's commands from stdin. Not that I

had forgotten that fact, but that I usually avoid it. I rarely need to enter

multi-line commands into at-scripts, and avoid having to cope with at's stdin

by using the echo command.

For instance, I would have written the above at(1) invocation as

echo gnome-power-cmd.sh suspend | at now + 10 minutes

Trivial, I know. That's why I called it a "1 cent tip"

--

Lew Pitcher

Master Codewright & JOAT-in-training | Registered Linux User #112576

http://pitcher.digitalfreehold.ca/ | GPG public key available by request

---------- Slackware - Because I know what I'm doing. ------

[ Thread continues here (2 messages/2.71kB) ]

2-cent Tip: Free beginners books

Oscar Laycock [oscar_laycock at yahoo.co.uk]

Mon, 14 Sep 2009 14:06:30 +0000 (GMT)

I recently came across some old notes listing the books I read when starting to use Linux.

At first, I wasn't sure what Free Software was, so I read the following:

- Free as in Freedom, Richard Stallman's Crusade for Free Software, 2002

http://oreilly.com/openbook/freedom/

- Open Sources: Voices from the Open Source Revolution, 1999

http://oreilly.com/catalog/opensources/book/toc.html

I especially liked the chapter 'Freeing the Source - The Story of Mozilla'

- The Cathedral and the Bazaar, Eric S. Raymond

http://www.catb.org/~esr/writings/cathedral-bazaar/

I particularly enjoyed reading the section 'A Brief History of Hackerdom'

- http://www.gnu.org/philosophy/

This has various articles about free software

I also read these Unix books and articles:

- The Art of Unix Programming, Eric S. Raymond

http://www.catb.org/esr/writings/taoup/html/

- The UNIXHATERS Handbook

http://web.mit.edu/~simsong/www/ugh.pdf

This reminded me that Unix is not the only way of doing things.

- Why Pascal is Not My Favorite Programming Language, Brian W. Kernighan, 1981

http://www.cs.virginia.edu/~evans/cs655-S00/readings/bwk-on-pascal.html

I must have read almost every entry in 'The Jargon File' at 'http://www.catb.org/jargon/'.

I always wanted to know about networking, so I read 'The Linux Network

Administrator's Guide' on the Linux Documentation projects website,

'http://tldp.org/guides.html'. On the same site I found two old books:

'The Linux Programmer's Guide' and 'The Linux Kernel' by David Rusling.

I know a bit of C which helped. I also went through most of the series

of 'Anatomy of...' articles at

'http://www.ibm.com/developerworks/linux/'. I even browsed through the

'Intel 64 and IA-32 Architectures Software Developer's Manuals' at

'http://www.intel.com/products/processor/manuals/'.

I enjoyed the 'The Bastard Operator From Hell' articles. You can google

for them. There are some at 'http://members.iinet.net.au/~bofh/'. Also

try searching for lists of how 'You know you've been hacking too long

when...'. I also liked the 'Real Programmers Don't Use Pascal' and 'The

Story of Mel' posts. You can find discussions of them on wikipedia.

I learnt about Linux distro's from reading the Distrowatch Weekly at

'http://distrowatch.com/'.

[ ... ]

[ Thread continues here (1 message/3.52kB) ]

2-cent Tip: Suspending and Hibernating from CLI ala GNOME

Mulyadi Santosa [mulyadi.santosa at gmail.com]

Mon, 21 Sep 2009 12:08:09 +0700

In some cases, you might want to hibernate or suspend your Linux

system solely from command line i.e because you want to schedule it

via "at".

Don't worry, you don't need to use GUI automation tool such as dogtail

for doing so. Simply use gnome-power-cmd.sh command to do that. Note:

make sure atd daemon is running first. Then do:

$ at now + 10 minutes

at> gnome-power-cmd.sh suspend

at> (press Ctrl-D)

will do suspend-to-RAM 10 minutes from now. Replace "suspend" with

"hibernate", "shutdown" or "reboot" to do the respective actions.

--

regards,

Talkback: Discuss this article with The Answer Gang

Published in Issue 167 of Linux Gazette, October 2009

News Bytes

By Deividson Luiz Okopnik and Howard Dyckoff

|

Contents:

|

Selected and Edited by Deividson Okopnik

Please submit your News Bytes items in

plain text; other formats may be rejected without reading.

[You have been warned!] A one- or two-paragraph summary plus a URL has a

much higher chance of being published than an entire press release. Submit

items to [email protected]. Deividson can also be reached via twitter.

News in General

Red Hat Introduces DeltaCloud Project

Red Hat Introduces DeltaCloud Project

Redhat engineers have initiated the DeltaCloud project "To enable an

ecosystem of developers, tools, and applications which can interoperate

across the public and private clouds".

Currently, each Infrastructure-as-a-Service (IaaS) cloud has a unique

API that developers and ISVs write to in order to consume the cloud

service. The DeltaCloud effort hopes to create a common, REST-based

API which developers can write to once and manage anywhere and

everywhere. This requires a middleware layer with drivers that map APIs

to all public clouds like EC2, and private virtualized clouds based on

VMWare and Red Hat Enterprise Linux with integrated KVM.

The long term goal is for the middleware API to be test driven

with a self-service web console, which will be another part of the

DeltaCloud effort.

Among its goals, DeltaCloud aims to give developers:

- a REST API (with any-platform access);

- Support for EC2, RHEV-M; VMWare ESX, RackSpace coming soon;

- Backward compatibility across versions, for long-term use of

scripts, tools and applications.

One level up, DeltaCloud Portal will provide a web UI in front of the

DeltaCloud API to:

- View image status and stats across clouds, all in one place;

- Migrate instances from one cloud to another;

- Manage images locally and provision them on any cloud.

DeltaCloud API and Portal are free and open source (LGPL, GPL). For

more info, see: http://deltacloud.org.

Red Hat, JBoss Innovators of the Year Announced

Red Hat, JBoss Innovators of the Year Announced

At the co-located Red Hat Summit and JBoss World in Chicago in

September, Red Hat gave out their 2009 Innovator of the Year Awards

which features major customers and how they put Red Hat and JBoss

products and technologies to use.

In its third year, theInnovation Awards highlighted six category

finalists for 2009:

- Management Excellence:

Red Hat: Whole Foods Market

JBoss: American Family Insurance

- Optimized Systems:

Red Hat: Verizon Communications Inc.

JBoss: Harvard Business Publishing + Rivet Logic

- Superior Alternatives:

Red Hat: Union Bank, N.A.

JBoss: GEICO

- Carved Out Costs:

Red Hat: Discount Tire Company

JBoss: Allianz Australia Ltd

- Extensive Ecosystem:

Red Hat: Hilti Corporation

JBoss: Optaros + Massachusetts Convention Center Authority

- Outstanding Open Source Architecture:

Voting was open to the entire open source and Red Hat community to

select the two overall Innovation Award winners - one Red Hat and one

JBoss winner. This year, Red Hat Innovator of the Year Award went to

Whole Foods Market. The company was selected for its use of Red Hat

Satellite that resulted in reduced costs, reallocated resources and

the ability of the Whole Foods IT department to focus on strategic

business initiatives.

The JBoss Innovator of the Year went to Ecommerce + Vizuri for

developing Imperia, a data center Web services hosting platform that

can reduce operational costs and increase customer reliability using

Red Hat, JBoss, Hyperic, and EnterpriseDB solutions.

Oracle Re-affirms Sun and Solaris Commitment

Oracle Re-affirms Sun and Solaris Commitment

Oracle tried to reassure its new-found Sun customers by taking out an

ad on the front page of the Wall Street Journal in mid-September.

The ad had 4 simple bullet-points addressed to Sun Customers, many of

whom are in the financial sector of the economy, and a short quote

from Larry Ellison saying he looked forward to competing with IBM. The

bullets said the Oracle intends to:

- Spend more money developing SPARC than Sun does now;

- Spend more money developing Solaris than Sun does now;

- Save more than twice as many hardware specialists selling and

servicing Sparc/Solaris systems than Sun does now;

- Dramatically improve Sun's hardware performance by tightly

integrating Oracle software with Sun hardware

Oracle made good on the last point when it announced its improved

Exadata V2 DB machine. (See article below.) The ad did not mention

Java or the MySQL database, both of which Oracle now owns.

Oracle Unveils Hefty Exadata Version 2 - on Sun Hardware

Oracle Unveils Hefty Exadata Version 2 - on Sun Hardware

The OLTP Database Machine was unveiled in September by Oracle

Chief Executive Officer Larry Ellison and Sun Executive Vice

President John Fowler. The Exadata Database Machine Version 2, made by

Sun and Oracle, is said to be the world's fastest machine for both data

warehousing and online transaction processing (OLTP).

Built using industry standard hardware components plus FlashFire

technology from Sun, Oracle Database 11g Release 2 and Oracle Exadata

Storage Server Software Release 11.2, the Sun Oracle Database Machine

V2 is twice as fast as Version 1 for data warehousing. The Sun Oracle

Database Machine goes beyond data warehousing applications with the

addition of the huge Exadata Smart Flash Cache based on Sun FlashFire

technology to deliver extreme performance and scalability for OLTP.

Bottom line: with the Sun Oracle Database Machine, customers can store

more than 10x the amount of data and search data more than 10x faster

without any changes to applications.

"Exadata Version 1 was the world's fastest machine for data warehousing

applications," said Oracle CEO Larry Ellison, with characteristically

limited modesty. "Exadata Version 2 is twice as fast as Exadata V1 for data

warehousing, and it's the only database machine that runs OLTP

applications. Exadata V2 runs virtually all database applications much

faster and less expensively than any other computer in the world." Ellison

compared ExaData 2 to Teradata systems and high-end IBM 595 Power RISC

machines. "IBM has nothing like this," Ellison said after noting that only

IBM mirrors disks while Exadata 2 is fully fault tolerant with redundant

hardware.

Ellison also noted the modular ExaData 2 can be installed and

ready in a single day. "It will be working by evening ... just load

and run," Ellison said.

Better Hardware Specs from Sun:

- Sun' FlashFire memory cards enable high performance OLTP;

- 80% Faster CPUs -Intel Xeon 5500 Nehalem processors;

- 50% Faster Disks -600 GB SAS Disks at 6 Gigabits/second;

- 200% Faster Memory -DDR3 memory;

- 25% More Memory -72 Gigabytes per database server;

- 100% Faster Networking -40 Gigabits/second on InfiniBand;

- Raw disk capacity of 100 TB (SAS) or 336 TB (SATA) per rack;

Software Optimization from Oracle:

- Hybrid columnar compression for 10-50 times data compression;

- Scans on compressed data for even faster query execution;

- Storage Indexes to further reduce disk I/Os;

- Offloading of query processing to storage controllers using Smart

Scans;

- Applications running on the Sun Oracle Database Machine achieve up

to 1 Million I/O operations per second with Flash Storage.

Exadata Version 2 is available in four models: full rack (8 database

servers and 14 storage servers), half-rack (4 database servers and 7

storage servers), quarter-rack (2 database servers and 3 storage

servers) and a basic system (1 database server and 1 storage server).

All four Exadata configurations are available immediately.

ARM Announces 2GHz Cortex-A9 Dual Core Processor Designs

ARM Announces 2GHz Cortex-A9 Dual Core Processor Designs

Gunning to displace Intel's netbook-dominant "Atom" processor, ARM

announced development of dual Cortex-A9 MPCore hard macro

implementations for the 40nm-G process developed with its partner,

TSMC (Taiwan Semiconductor Manufacturing Co.). Silicon manufacturers

now have a rapid route to silicon for high-performance, low-power

Cortex-A9 processor-based devices. The speed-optimized hard macro

implementation will enable devices to operate at frequencies greater

than 2GHz with a power efficiency up to 8x greater than Intel's

current Atom offerings.

The dual core hard macro implementations use advanced techniques to

increase performance while lowering overall power consumption. Running

at 2-GHz, the Cortex-A9 delivers about 10,000 MIPS with a power demand

of about 2 watts. The low power design runs at 800 Mhz, consumes a

half watt of power and delivers 4000 MIPS.

In many thermally-constrained applications such as set-top boxes,

DTVs, printers and netbooks, energy efficiency is of paramount

importance. The Cortex-A9 can deliver a peak performance of 4000 DMIPS

while consuming less than 250mW per CPU core.

ARM announced it was developing quad-core and eight-core Cortex-A9

processor designs. In separate news, ARM also joined the Linux

Foundation in September.

The Cortex-A9 speed-optimized and power-optimized implementations are

available for license now with delivery in the fourth quarter of 2009.

ARM' 40G physical IP platform is also available at

http://designstart.arm.com.

More information on ARM is available at http://www.arm.com.

Conferences and Events

- Open World Forum 2009

-

October 1 - 2, Paris, France

http://openworldforum.org.

- Silicon Valley CodeCamp 09 - Free

-

October 3 - 5, Foothill College, Los Altos, CA

http://www.SiliconValley-CodeCamp.com/Register.aspx.

- Adobe MAX 2009

-

October 4, Los Angeles, CA

http://max.adobe.com/.

- TV 3.0 Summit & Expo

-

October 6 - 7, Four Seasons, Los Angeles, CA

http://www.imsconferences.com/television/.

- Interop Mumbai

-

October 7 - 9, Bombay Exhibit Center, Mumbai, India

http://www.interop.com/mumbai/.

- RHCE Day - New York City

-

October 8, 9am - 1pm, Rubin Museum, New York, NY

http://www.redhat.com/rhceloopback.

- Oracle OpenWorld 2009

-

October 11 - 15, San Francisco, CA

http://www.oracle.com/openworld/2008/.

- Agile Open California

-

October 15 - 16, Fort Mason, San Francisco, CA

http://www.agileopencalifornia.com/registrationcampaign/promo001/.

- Germany Scrum Gathering 2009

-

October 19 - 21, Hilton Munich City, Munich, Germany

http://www.scrumgathering.org/.

- Web 2.0 Summit 2009

-

October 20 - 22, San Francisco, CA

http://www.web2summit.com/web2009.

- 7th SGI User Group (SGIUG) Conference

-

October 21 - 23, San Antonio, TX

http://www.sgiug.org/SGIUG2009.php.

- 1st Annual Japan Linux Symposium

-

October 21 - 23, Tokyo, Japan

http://events.linuxfoundation.org/events/japan-linux-symposium/.

- CSI 2009

-

October 24 - 30, Washington, DC

http://www.csiannual.com/.

- eComm Fall 2009 Europe

-

October 28 - 30, Westergasfabriek, Amsterdam, the Netherlands

http://www.amiando.com/ecomm2009-europe.html.

- Seattle Secure World 2009

-

October 28 - 29, Meydenbauer Convention Center, Seattle, WA

http://secureworldexpo.com/.

- LISA '09 - 23rd Large Installation System Administration Conference

-

November 1 - 6, Marriott Waterfront Hotel, Baltimore, MD

http://www.usenix.org/lisa09/proga.

- Cloud Computing & Virtualization 2009

-

November 2 - 4, Santa Clara Convention Center, Santa Clara, CA

https://www3.sys-con.com/cloud1109/registernew.cfm.

- iPhone Developer Summit

-

November 2 - 4, Santa Clara, CA

http://www.iphonedevsummit.com.

- VoiceCon-SF 2009

-

November 2 - 5, San Francisco, CA

http://www.voicecon.com/sanfrancisco/.

- 2nd Annual Linux Foundation End User Summit

-

November 9 - 10, Jersey City, NJ

http://events.linuxfoundation.org/events/end-user-summit.

- Interop New York

-

November 16 - 20, New York, NY

http://www.interop.com/newyork/.

- Web 2.0 Expo New York

-

November 16 - 19, New York, NY

http://en.oreilly.com/webexny2008.

- QCon Conference 2009

-

November 18 - 20, Westin Hotel, San Francisco, CA

http://qconsf.com/sf2009/.

- 10th ACM/USENIX International Middleware Conference

-

November 30 - December 4, Urbana Champaign, IL

http://middleware2009.cs.uiuc.edu/.

- Gartner Data Center Conference 2009

-

December 1 - 4, Caesar's Palace, Las Vegas, NV

http://www.gartner.com/us/datacenter.

Distro News

Linpus' Launches Linpus Linux Lite 1.2 at OSIM World

Linpus' Launches Linpus Linux Lite 1.2 at OSIM World

At OSIM World in mid-September, Linpus Technologies, Inc. released its

new version of Linpus Linux Lite, its flagship netbook and nettop

product aimed to improve a user' online experience.

This new version of Linpus Linux Lite, through its LiveDesktop

application, improves the user experience by gathering important

information in one place. LiveDesktop has six modules with thumbnail

and text summaries, and an option for large previews. The social

networking module allows access into Twitter, Flickr, Myspace and

LastFM accounts. There are two modules for email - one for desktop

mail and one that delivers mail from Web mail accounts. Additionally,

there are modules for most recent websites and opened files -and a

module for favorite programs or shortcuts.

LiveDesktop has also been designed so that each module has an extra 3

or 4 objects, found by tapping the arrow at the side, meaning you can

for example, actually quickly see six recent Web sites rather than just

three.

Linpus Linux Lite also has a number of key improvements:

- Clutter graphic technology is used throughout to improve look and

feel;

- A network manager with support for 3G, WLAN, and LAN connectivity;

- A power manager with auto-suspend support;

- Dual booting enhancements and decreased boot-time to 3 seconds.

Software and Product News

Oracle Enhances VM Template Builder, Oracle Enterprise Linux JeOS

Oracle Enhances VM Template Builder, Oracle Enterprise Linux JeOS

In August, Oracle announced Oracle VM Template Builder, an open

source graphical utility that makes it easy to use Oracle Enterprise

Linux "Just enough OS" (JeOS) and scripts for developing pre-packaged

virtual machines for Oracle VM.

Oracle VM Template Builder uses "JeOS" to facilitate building an

operating system instance with only the absolute minimum packages

needed for an Oracle VM Template, helping to reduce the disk footprint

by up to 2GB per guest virtual machine and to improve security and

reliability.

Oracle Enterprise Linux JeOS is backed by 24x7, enterprise-class

support and has been tailored specifically to facilitate the creation

of Oracle VM Templates for production enterprise use.

Oracle VM Templates are virtual machines with pre-installed and

pre-configured enterprise software that can be used by ISVs or

end-users to package and distribute their applications. Oracle VM

Templates simplify application deployments, reduce end-user costs and

can make ISV software distribution more profitable.

This also allows end-users and ISVs the option to develop their Oracle

VM Templates by using JeOS-based scripts directly or via the

graphical Oracle VM Template Builder.

The graphical Oracle VM Template Builder utility is based on the core

technology of Oracle's small footprint Oracle Enterprise Linux JeOS

operating system image that is designed specifically for Oracle VM

Template creation and build-process integration. Oracle offers this

combination of enterprise class operating system and virtualization

software for free download and free distribution. (O-VM is based on

Xen virtualization technology.)

Oracle VM supports both Oracle and non-Oracle applications. Oracle

VM Templates are available today for Oracle' Siebel CRM, Oracle

Database, Oracle Enterprise Manager, Oracle Fusion Middleware, and

more. See a complete list of templates at:

.

Oracle VM Template Builder is distributed as software packages via the

Oracle Unbreakable Linux Network (ULN) and Oracle Technology Network.

More informaton on Oracle virtualization offerings are available here:

http://www.oracle.com/virtualization.

More info on Oracle's JEOS Linux is available here:

http://www.oracle.com/technology/software/products/virtualization/vm_jeos.html.

Toshiba Links vPRO Technology With TCG Self-Encrypting Drives

Toshiba Links vPRO Technology With TCG Self-Encrypting Drives

Toshiba's Storage Device Division (SDD), a division of Toshiba America

Information Systems, Inc., showcased its Trusted Computing Group

(TCG) Opal-compliant Self Encrypting Drives (SEDs) in a demonstration

of secure access using the Intel's vPro technology during the Intel

Developer Forum (IDF) in San Francisco during September.

As corporate governance requirements, government regulations and other

industry mandates for stronger data protection increase, TCG

Opal-compliant SEDs will become an increasingly important component in

the data protection equation. Unlike earlier SED offerings, TCG

Opal-compliant SEDs provide standards-based protocols to facilitate

widespread adoption by disk drive vendors, security management

software providers, system integrators and security-conscious end

users.

The IDF demonstration was the first time SEDs were paired with Intel

vPro technology to securely deliver down-the-wire access

authentication. Management console applications can use Intel vPro

technology to securely authenticate and access TCG Opal-compliant SEDs

on remote PCs, even if the computer is powered off. This capability

allows remote perform software updates, security audits and

maintenance tasks in an enterprise domain.

SEDs greatly enhance the data security on any PC, especially laptops,

which are at greater risk of being lost or stolen. The TCG

Opal-specification provides a framework for delivering centrally managed

encryption solutions. TCG Opal-compliant SEDS offer the benefits of

stronger security through industry standardization of security protocols on

the hard drive, providing easier use and lower cost of ownership with

decreased management complexity. Should a PC be lost or stolen, an IT

administrator can take action to remotely disable the PC and instantly

erase the encrypted data and applications on the SED, through Intel

Anti-Theft Technology, which is available on some Intel vPro

technology-enabled notebooks. Intel vPro technology enhances the

manageability of PCs equipped with Opal-compliant SEDs by providing the

mechanism through which IT administrators can authenticate and remotely

access the unattended PC.

Software updates and other administrative tasks can then be performed

from virtually anywhere in the world, using a secure out-of-band

communications channel to access and manage PCs that incorporate TCG

Opal-compliant SEDs.

Toshiba plans to commercially introduce TCG Opal-compliant SEDs in

2010. For more information on Toshiba' line of mobile HDDs, please

visit http://www.toshibastorage.com.

Danube Releases ScrumWorks Pro 4, Enterprise-ready Scrum Tool

Danube Releases ScrumWorks Pro 4, Enterprise-ready Scrum Tool

Danube Technologies, a leader in project management tools and training

for the Scrum methodology, announced in September that ScrumWorks Pro

4, the latest release of the only enterprise-capable tool designed

exclusively to reinforce the principles of the Scrum framework, was now

available for purchase and upgrade. New features added to this release

were driven by customer feedback and address the growing demands of

enterprise customers. With release 4, large groups organized to meet

complex development needs now have a flexible system to model features

and milestones across multiple development groups.

"As the popularity of Scrum continues to grow, so has the demand for a

project management tool that is sophisticated enough to scale for the

largest, most complex Scrum development environments," said Victor

Szalvay, Danube CTO and the Product Owner of ScrumWorks Pro. "This

latest release gives users a powerful combination of functionality and

flexibility to effectively model features and milestones across

multiple related development groups."

New to ScrumWorks Pro 4:

- Program Management. Coordinate multiple related development groups

with cross-cutting releases and feature goals. Track through to

completion with high-level roll-ups of progress in a single cohesive

interface.

- High-level Feature Decomposition with Epics.

Epics are the stated

high-level features or goals associated with any milestone. Define

Epics and track progress at the project or program level.

- Release Planning View.

The release planner view provides a focused,

high-level perspective of a release milestone. User stories are

grouped by Epics and progress is front-and-center with embedded

roll-up reports.

- Flexible Modeling of Development Organizations.

New Program

capabilities in release 4 are flexible enough to accommodate and model

complex structures such as shared component projects, where a single

project group supplies core components to multiple end-products.

- Enterprise Reporting.

Track feature and release milestone progress

across multiple products. Forecast completion and compare progress of

contributing products in a Program release. Monitor the scope of

features at the program level along with progress breakdown.

"ScrumWorks Pro has always been driven by customer feedback," said

Szalvay. "For large-scale Scrum deployments, release 4 will help

coordinate multiple related product backlogs, all of which can work

toward common release dates and feature goals."

ScrumWorks Pro 4 is currently available and can be purchased directly

from Danube. Pricing is offered through an on-site, per-seat

subscription license that starts at $289 per-user, per-year, or less

than $25 per-user, per-month, as well as an on-site, per-seat fully

paid license for $500. For more information on ScrumWorks Pro, visit

http://www.danube.com/scrumworks/pro.

VMware vCenter 4 Drives Business Agility

VMware vCenter 4 Drives Business Agility

At VMworld 2009, VMware unveiled a new management model for IT, with

the introduction of the VMware vCenter Product Family, a set of

solutions for policy-based, service-driven management and business

agility. VMware vCenter Product Family builds on the capabilities of

VMware vSphere 4 to simplify management, reduce operating costs, and

deliver flexible IT services.

Features of vCenter include:

Infrastructure Management:

- VMware vCenter AppSpeed provides service level reporting and

proactive performance management for multi-tier applications,

including virtualized and physical elements;

- VMware vCenter CapacityIQ enables adequate capacity to be available

to virtual machines, resource pools, and entire datacenters by

modeling the effect of capacity changes;

- VMware vCenter ConfigControl will enable compliance of

configuration state in a virtual environment;

- VMware vCenter Site Recovery Manager automates the recovery process

and simplifies management of disaster recovery plans by making

disaster recovery an integrated element of virtual datacenters.

Service Delivery Management:

- VMware vCenter Lifecycle Manager manages the lifecycle of virtual

machines;

- VMware vCenter Chargeback enables accountability across the

business by reporting on costs;

- VMware vCenter Lab Manager simplifies development and QA

environments by giving end users on-demand access to common system

configurations while administrators maintain control over policies.

For more information about virtualization management solutions from

VMware and the VMware vCenter Product Family,

http://www.vmware.com/products/vcenter/.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/dokopnik.jpg)

Deividson was born in União da Vitória, PR, Brazil, on

14/04/1984. He became interested in computing when he was still a kid,

and started to code when he was 12 years old. He is a graduate in

Information Systems and is finishing his specialization in Networks and

Web Development. He codes in several languages, including C/C++/C#, PHP,

Visual Basic, Object Pascal and others.

Deividson works in Porto União's Town Hall as a Computer

Technician, and specializes in Web and Desktop system development, and

Database/Network Maintenance.

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

QQ on Linux

By Silas Brown

Here are some pointers that might help learners of Chinese

to communicate with their Chinese friends using the QQ protocol

which is popular in China.

Preliminary notes

- If you can get your friends to use something other than

QQ, do. Dealing with QQ is messy, much more so than Skype,

MSN etc.

- To sign up for a QQ account you will need assistance

from a Chinese person with good eyesight, as QQ's

CAPTCHA

relies on human recognition of distorted Chinese

characters, and they're not beginners' characters.

- libpurple (as used in Pidgin and Adium) has QQ protocol support; you

likely have to install the latest version (not the one in your package

manager), and in the account details select the Advanced tab and choose the

most recent version of the protocol (QQ's servers no longer allow the 2005

version, for example). However libpurple is playing catchup with

QQ; every so often QQ does something that's not supported by libpurple, and

things can happen like all your incoming messages getting silently dropped, or

people trying to send you screen-shots and not realising you're not receiving

them.

QQ for Linux

- Download from

im.qq.com/qq/linux

(1.0 Beta 1 has links to a .deb for Ubuntu 7.10+, a distribution-independent

.tgz, etc)

- QQ 1.0 beta does not work well with GTK themes. Some

colours will be taken from the theme and others will not; the result is

usually unreadable. Additionally, you cannot simply change the HOME

environment variable to point to a directory that lacks .gtkrc; QQ

will find your real home directory anyway.

You have to do something like

mv .gtkrc g0 ; (sleep 5;mv g0 .gtkrc) & qq

- The login window includes the expected fields for username and password,

a remember-password checkbox, and a sign-in button (the left-hand part of

which can be used to change status).

- QQ bypasses the window manager a lot.

If clicking on a QQ window to raise it does not work,

try switching into it via Alt-Tab or equivalent.

- The three tabs at the top of the main window are

"Friends", "Groups", and "Recently chatted with".

The colour button above that lets you choose a colour and picture for the QQ

windows' title bars, and above this is a status button and an area where you

can click to type a status message.

The round button at the bottom is a menu.

The spanner lets you change your account options

(friendly name, etc).

The other buttons are to view recently-downloaded files,

and to find/add more QQ contacts.

- Click to expand a list of contacts (friends, strangers, or other),

double-click to start a chat, right-click to bring up a context menu, the

items of which are Send Message, Send File, Delete Contact, View Profile,

Annotate Contact (i.e. set an alias), and whether or not to show these

aliases.

- QQ 1.0 beta will crash if you try to type in an

annotation that is longer than 16 characters. (There is no bounds-checking on the

array until the C library sees corruption after free().

It's reasonable to assume that Chinese-character aliases won't be longer than 16

characters, but Latin-character aliases might well be.)

- QQ can also forget some of your annotations from time to time; I'm not sure

why. You'd be advised to keep a separate copy.

- In the chat window, press Control+Enter to send

(Enter just adds a new line).

The icons in the chat window are:

Emoticon,

History (toggles display of the most recent part of the conversation you

had before this one),

Send picture (lets you choose a picture file and pastes the picture directly

into the conversation),

Take screenshot (this button darkens the screen, and you then use the mouse to

drag out the rectangle that you want to send, and double-click on it; it

will then appear in the typing box and you can add words around it),

and Send file.

- Voice and video chat are not

available in the 1.0 beta Linux version.

QQ in WINE

QQ 2008 (with voice chat) can be set up in WINE as

follows, but it is unstable.

mkdir qq && cd qq &&

wget http://dl_dir.qq.com/qqfile/qq/QQ2008stablehij/QQ2008.exe &&

HOME="$(pwd)" LANG=zh_CN.gb18030 wine QQ2008.exe

then click the button marked with an (I), then click the first radio box,

then Next, then Next, then wait for installation, then Finish. The "what's

new" file will appear in Notepad, and QQ will launch; you may now fill in

your number and password (and choose to remember

password).

You then need to close QQ and do this:

killall TXPlatform.exe QQ.exe explorer.exe winedevice.exe rundll32.exe services.exe

rm .wine/drive_c/Program\ Files/Tencent/QQ/TXPlatform.exe

Then run QQ with

HOME="$(pwd)" LANG=zh_CN.gb18030 \

WINEDLLOVERRIDES="mfc42,msvcp60,riched20,riched32=n,b;mmdevapi=" \

wine .wine/drive_c/Program\ Files/Tencent/QQ/QQ.exe

Concluding Notes:

(adapted from the Chinese-language thread at

http://www.linuxdiyf.com/bbs/thread-109610-1-1.html,

with some experimentation to improve stability)

Most characters should be displayed if your system has the zh_CN.gb18030 locale and some Chinese TTF

fonts, although some menus may not display.

Voice chat works for me on Ubuntu Hardy's WINE package

(version 1.0.0-1ubuntu4~hardy1).

Newer WINE packages (1.1.0, 1.1.24)

get an assertion failure about

(elem)->type == SND_MIXER_ELEM_SIMPLE

in the ALSA code, or if you use winecfg to set

OSS audio then sound works only one way.

Some Chinese people will tell you that there exists an English

version of QQ. However, that was released in 2005 and uses a version

of the protocol that QQ no longer supports. There is a 2008 English

version of their simpler "Tencent Messenger" product, but I was not

able to get that to work with WINE.

With thanks to Jessy Li for some translation help.

Talkback: Discuss this article with The Answer Gang

![[BIO]](../gx/authors/brownss.jpg)

Silas Brown is a legally blind computer scientist based in Cambridge UK.

He has been using heavily-customised versions of Debian Linux since

1999.

Copyright © 2009, Silas Brown. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 167 of Linux Gazette, October 2009

Away Mission - SecureWorld Expos

By Howard Dyckoff

The SecureWorld Expo series of regional conferences has become an

annual event in many parts of the US. SecureWorld aims to foster

communication between security professionals and technology leaders on

issues of best practices, and to encourage a public/private

partnership with government. These are vest-pocket security

conferences, with many local speakers and a small vendor expo. They

are held in multiple regions, one or two a month, organized by

SecureWorldExpo.com in Portland, OR.

2009's Secure World was the fourth one held in the Bay Area.

The first SecureWorld Expo was held in Seattle in 2001.

Close to 50% of conference attendees, not the expo attendees, hold a

CISSP certification or a similar professional security credential.

The current list has 9 Expos in the spring and fall, and they will add

another region to their calendar later this year:

- Bay Area, September 16 - 17, 2009

- Detroit, September 29 - 30, 2009

- Seattle, October 28 - 29, 2009

- Dallas, November 4 - 5, 2009

- Houston, February 10 - 11, 2010

- Boston, March 23 - 24, 2010

- Atlanta, April 27 - 28, 2010

- Philadelphia, May 12 - 13, 2010

- Washington, D.C., April 13 - 14, 2010

The event is structured into keynotes, presentation sessions and

panels, invitation-only sessions (see pricing section below), and Expo

breaks with the afternoon snacks. Here are links to the agendas for

2008 and 2009:

2008: http://secureworldexpo.com/events/conference-agenda.php?id=255

2009: http://secureworldexpo.com/events/conference-agenda.php?id=269

These agendas have a good mix of topics, and some very qualified

speakers. Unfortunately, presentation materials are hard to come by.

The organizers at the conference say that presenters are not required

to post their slides. They also do not commit to a schedule of

posting materials, but say that attendees will eventually get an

e-mail with the location of those materials they have. Although I

could find several conference e-mail reminders from 2008, I did not

find an e-mail with info on the 2008 conference slides. That was

disappointing.

I actually wrote to JoAnna Cheshire, the Director of Content at Secure

World Expos to get the scoop on this. She explained: "Due to our

privacy policy with our speakers, we do not post any of the archived

slides. If an attendee is interested in a slide presentation, we

encourage them to e-mail us, and we will contact the presenter directly

to obtain the slides for them. It is up to each individual presenter

whether or not to provide the requested slides."

I did like the presentation this year on WiFi vulnerabilities and

hacker/cracker attacks. It was actually titled "What hackers know

that you don't" and presented by Matt Siddhu of Motorola's AirDefense

group (purchased by Motorola in the past year), and I suspect you

could e-mail him for a copy or search the AirDefense web site.

Siddhu gave a good summary of the problems with wireless access

points, especially rogue APs with makeshift directional antennas

having ranges of 100s to 1000s of feet. He also included a good

summary of rogue AP detection. This includes traffic injection, SNMP

look-up, and RF fingerprinting. A security professional may need to

use a combination of wired, wireless, and forensic analysis plus the

historical record of traffic when detecting rogue traffic. He

recommended the use of wireless ACLs for both inside and outside

access, including the possibility of jamming specific rogue APs with

TCP resets and also jamming clients who use outside APs.

The closing session was a presentation by FBI Cyber Division

Special Agent John Bennett on the work of Federal agencies in

opposing cyber-crime. Federal agencies opposing cyber-crime and

terrorism include the FBI, the Secret Service, ICE, and Inspectors

General at varied agencies like NASA.

Bennett discussed botnets and national security attacks by state actors.

(He was sorry that his slides could not be released without filling out

dozens of forms.) He said that new targets now include smaller financial

institutions and law firms that generally hold large amounts of

confidential information in unsecured and unencrypted states.

Beside the normal attack vector list that includes cross-site

scripting (XSS) and SQL injection, Bennett noted an increased use of

steganography (hiding data in data), which is being seen more on VOIP

streams as a means of leaking data invisibly.

2009's Bay Area event was significantly smaller than 2008's, both in

attendance and in vendor participation. There also were fewer

folks at the end of expo prize drawings - the so-called "Dash for

Prizes" - during the afternoon break.

I saw fewer than 200 people at the lunch and AM keynotes. I think

there were closer to 300 at the 2008 event. With 8 vendors and 10

prize items, chances seemed fairly good. So why didn't I win

something?

As in 2008, there were several invitation-only sessions, some by

professional associations or for industry verticals. In fact, local

ISSA and Infragard chapters were local partners and held meetings.

The sessions for "standard" attendees were fewer this year, and didn't

run as late in the day.

The conference was still priced below $300 - in fact, $245 this year and

$195 last year - except for "SecureWorld +" attendees, who had more

in-depth sessions during both days' mornings. "SecureWorld +" attendees

could go to the professional group events, and were charged $695 both

this year and last year. They also could also receive 16 CPE credits.

There was a discount code sent by e-mail for $200 off the "plus" rate,

making it $495. In any case, these are bargain conferences,

especially considering the hot lunch buffets included.

As I've said, this is a small conference at a small price, so you have

to accept the lack of certain amenities. To hold down costs, there are

no conference bags, no CDs of presentation slides, and... no

conference WiFi.

I think every other conference I've attended at the Santa Clara

Convention Center has had free WiFi, and that goes back over a decade.

Instead, there was a stack of 2"x4" papers explaining how to

sign-up for a day pass on the paid network. That was $13.95 this year.

I also asked JoAnna Cheshire about the WiFi issue and got the

following reply: "We find that the number of attendees who ask for

wi-fi at our conference are a very small percentage, and for us to pay

for WiFi to cover the entire conference would impose a significant

cost on us."

I think it would have been better if the daily conference e-mail,

preceding each of the two days of SecureWorld, had mentioned this fact.

I could have printed out a list of nearby WiFi cafes and libraries. So

I had to be resigned to not having an e-mail tether, which isn't all

that bad. Of course, your situation may differ.

In summary, the conference is useful for security professionals, and

should grow again after the economic downturn. I was a bit

disappointed that there was little conference material on

virtualization security, something I expect to change in the future.

Cloud computing security was discussed in a keynote and a panel.

However, if you attend an upcoming Secure World, be prepared to pay for

WiFi or get a cellular card from your cellphone provider. I'd also

recommend carrying business cards to request copies of any presentations

you may want: They won't be archived after the conferences.

Talkback: Discuss this article with The Answer Gang

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

Copyright © 2009, Howard Dyckoff. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 167 of Linux Gazette, October 2009

Away Mission - Upcoming in October

By Howard Dyckoff

Silicon Valley CodeCamp 09 -- Free

Oct 3-5, Foothill College, Los Altos, CA

http://www.SiliconValley-CodeCamp.com/Register.aspx

It's been running for several years and it's always been free.

Lunch is provided by the sponsors, as well.

I confess that I haven't gone to the CodeCamp before (time conflicts),

but have been to the related Product Manager's Camp, and it was worth

the price of admission. I do know people who have gone before, and all

report a positive experience.

Using the now-familiar unconference format, volunteer speakers sign

themselves up for sessions and some slots are open for spontaneous

topics. That makes the agenda a little fluid but you'd only be risking

some weekend time.

Oracle OpenWorld 2009

Oct 11-15, San Francisco, CA

http://www.oracle.com/us/openworld/

The Oracle behemoth keeps acquiring both major application brands, as

well as open source companies. Previously, it released an

Oracle-supported version of Red Hat Enterprise Linux. This year, it

swooped up all of Sun Microsystems with its many open source gems,

including Java, Solaris, and MySQL. What will be Oracle's next foray

into the open source universe?

In September, ahead of Oracle OpenWorld, it announced the world's

fastest database machine based on high-end Sun Microsystems X86

hardware. See a summary of its impressive performance in the News

Bytes column, this month.

So far, Oracle has promised to keep Java free and open, and to also

support MySQL. It now distributes both a Linux distribution and a version

of Unix. It's been a major contributor to the btrfs file system, and

now owns ZFS. What will Oracle do next??

The last Oracle OpenWorld handled logistics well, for an event with over

40K attendees, and offered a lot of content. It also offers a lot of

value, with its Expo pass that includes keynotes, the expo, and the

end-of-conference reception. The main conference is expensive, unless

you can get a customer or partner discount.

See the review of Oracle OpenWorld at

http://linuxgazette.net/159/dyckoff.html.

Agile Open Northern California

Oct 15-16, Fort Mason, San Francisco, CA

http://www.agileopencalifornia.com/registrationcampaign/promo001/

This event is organized by Dayspring, Inc., and is a very low-cost

event at $250: two days of Agile theory and practice with 32 sessions,

lunch, and a networking reception. Also organized like an unconference,

this event uses the Open Space Technology framework to organize

sessions.

Here's a link to the wiki for the 2008 proceedings:

http://agileopencalifornia.com/wiki/index.php?title=Proceedings2008

eComm Fall 2009 Europe

Oct. 28-30, Westergasfabriek, Amsterdam, NL

http://www.amiando.com/ecomm2009-europe.html

This is the first eComm conference in Europe, but the two preceding

eComm events in the US have been stellar.

The mix of speakers goes from major telecoms to the very open, very

alternative developers on the fringe. It's a challenging environment for

both speakers and audience, with short 15 min. sessions and even

shorter 5 min. bullet sessions. There is hardly a dull moment at eComm,

and some conversations are just short of amazing. If you are anywhere

near Amsterdam, consider checking this one out.

Here are links to content from the most recent eComm:

http://www.slideshare.net/eComm2008/slideshows

http://ecomm.blip.tv/posts?view=archive

My earlier review is here: http://linuxgazette.net/159/dyckoff.html

Talkback: Discuss this article with The Answer Gang

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard maintains the Technology-Events blog at

blogspot.com from which he contributes the Events listing for Linux

Gazette. Visit the blog to preview some of the next month's NewsBytes

Events.

Copyright © 2009, Howard Dyckoff. Released under the

Open Publication License

unless otherwise noted in the body of the article. Linux Gazette is not

produced, sponsored, or endorsed by its prior host, SSC, Inc.

Published in Issue 167 of Linux Gazette, October 2009

A Quick-Fire chroot Environment

By Ben Okopnik

As a teacher in the computer field, I often get invited to teach at various

companies which are not necessarily prepared for the requirements of a

computer class - at least not in the finer details. In this article, I'd like

to share one of the software tools I use to handle some of the unexpected (or

should I say, the "expected unexpected"?) situations.

I recall visiting a well-known company, several years ago, that lost track

of the time the class was supposed to start... as well as the fact that I

would need to get through their security. And that the students needed the

books that had been ordered for them (these books were actually in the very

next room, but the people who delivered them didn't manage to route the

information to the people responsible for the class.) And their computers

were set up with Windows - despite the fact that the class was called

"Introduction to Unix" - and the Unix servers they were supposed to connect

to were not available (something about firewalls, and the only sysadmin who

could configure them being out that day.) Oh, and there was no Internet

access from there, either.

That, my friends, is what you might call a scorched-earth disaster area. At

the very least, that's a bad, bad situation to walk into on a fine Monday

morning, with a long week of teaching stretching out into forever ahead of

you.

So, what happened? Well, I not only survived that one, I got excellent

reviews from all the participants. Yes, I'm fast on my feet. Yes, I carry a

lot of what I know in my head instead of being completely dependent on a

book. Yes, I can take charge and handle crazy situations

while other people are reeling and writhing and fainting in coils [1]. But one of

the major factors that saved my bacon that day was that I could quickly and

safely set up a bunch of login accounts - i.e., give each of my

students an individual "sandbox" to play in, loaded with a bunch of basic

Unix/Linux tools - without compromising my system, or letting them damage

or destroy it by accident while mangling everything they could lay their

hands on (as new students, or anyone experimenting without restraint,

will.)

I did all that by configuring a chroot environment: i.e., one

where the root ('/') of the login system resides somewhere other than my

own system's root does (e.g., '/var/chroot'). Since, as you're probably

aware, there's no way [2] to go above the root directory, the users

are effectively "trapped" inside the created environment - and you have a

safe "sandbox" that only contains the tools that you put in there. It's a

very handy tool - actually, a set of tools - if you suddenly need to do

this kind of thing.

Thinking about the process

In order to have a usable Linux environment, there are several basic items

that are necessary. For one, you need a minimal directory structure

(/etc for the required configuration files; /dev

for the devices; /lib and /usr/lib for all the

required libraries for the programs that expect to find them there;

/bin, /usr/bin, and /usr/sbin for

all the required programs, and so on.) For another, you need the

configuration files, the libraries, and all the external files used by all

the programs you want to run - this actually becomes quite involved,

especially if you want the man pages that go along with these programs!

Then, of course, you also need the devices, the log files, the actual

config files (/etc/passwd, /etc/shadow, and so

on)... and then you need the user accounts and any files you want to

provide for those users.

Whew. This is beginning to sound complicated. Is there an end to this, or

does it just keep getting bigger and bigger?

Rejoice! Linux is at hand!

Fortunately, there is indeed an end to it - and it's not that far off. Not

much more than I described above, in fact. Also, with a bit of thought (and

the amazing tools provided with Linux), much of this can be automated.

Here's the script that I use to build the whole thing. It takes an optional

argument specifying how many login accounts to create (it defaults to 20 if

no argument is given.) I'll intersperse my comments throughout; the running

version, along with the other tools that I'll describe below, is available

here.

#!/bin/bash

# Created by Ben Okopnik on Thu Mar 22 22:50:21 CDT 2007

[ "$UID" -eq 0 ] || { echo "You need to run this as root."; exit 1; }

You have to be root to do this stuff, of course; many of the files and

directories you need to copy are only readable by the root user.

# If a number has been specified as a command-line arg, use it; otherwise,

# create 20 accounts.

if [ -n "$1" ]

then

if ! [[ "$1" =~ ^[0-9]+$ ]] || [ "$1" -le 0 -o "$1" -gt 100 ]

then

echo "If used, the # of accounts to create must be 1-100. Exiting..."

exit 1

fi

fi

# Default to 20 accounts unless some other number has been specified

number=${1:-20}

I limited it to a max of 100 users because that's way, way more people than

I'd ever teach in one class - plenty of safety margin. You're welcome to

modify that number, but you should consider carefully if there will be any

adverse unintended consequences from doing so.

source .chrootrc

This is where I get the variables that tell me where to set up the

environment, what IP to use for accessing it, and what netmask to use with

that IP. (See the "chrootrc" file in the tarball if the method is less than

obvious.)

echo "Creating the basic dir structure"

mkdir -p $dir/{bin,dev,etc,lib,proc,tmp,var}

mkdir -p $dir/usr/{bin,lib,local,sbin,share}

mkdir -p $dir/usr/local/share

mkdir -p $dir/var/log

That's all the directory structure that's necessary for now. Later, we'll

add more stuff as needed - see the section on program installation.

echo "Creating devices in $dir/dev"

mkdir $dir/dev/pts

mknod -m 666 $dir/dev/null c 1 3

mknod -m 666 $dir/dev/zero c 1 5

mknod -m 666 $dir/dev/full c 1 7

mknod -m 655 $dir/dev/urandom c 1 9

mknod -m 666 $dir/dev/ptyp0 c 2 0

mknod -m 666 $dir/dev/ptyp1 c 2 1

mknod -m 666 $dir/dev/ptyp2 c 2 2

mknod -m 666 $dir/dev/ptyp3 c 2 3

mknod -m 666 $dir/dev/ttyp0 c 3 0

mknod -m 666 $dir/dev/ttyp1 c 3 1

mknod -m 666 $dir/dev/ttyp2 c 3 2

mknod -m 666 $dir/dev/ttyp3 c 3 3

mknod -m 666 $dir/dev/tty c 5 0

mknod -m 666 $dir/dev/ptmx c 5 2

The required devices, of course...

# Create the 'lastlog' file

touch $dir/var/log/lastlog

...as well as the one required logfile (if I recall correctly, you either

can't log in or SSH in without it.)

echo "Copying the basic toolkit [this takes a while]"

cp -a /etc/ssh $dir/etc/ssh

# cp -a /bin/{bash,cat,chmod,cp,date,ln,ls,more,mv,rm} $dir/bin

# cp -a /usr/bin/{clear,env,groups,id,last,perl,perldoc,a2p,pod2man,cpan,splain} $dir/usr/bin

cp -a /usr/sbin/sshd $dir/usr/sbin/

cp -a /usr/lib/perl* $dir/usr/lib

cp -a /usr/lib/man-db $dir/usr/lib

cp -a /usr/share/perl* $dir/usr/share

cp -a /usr/local/share/perl* $dir/usr/local/share

ln -s $dir/bin/bash $dir/bin/sh

echo "echo chroot$dir" > $dir/bin/hostname

chmod +x $dir/bin/hostname

Here, I manually copy in both the required files and the ones that I use in

my Linux intro, shell scripting, and Perl classes. This is a little crude,

at least now that I have an automated procedure for copying not only the

programs but all their required libs, ini files, man pages, and so on (see

the discussion of this below) - but that's no big deal, since I designed

this whole thing to be highly redundancy-tolerant. This section is actually

a hold-over from the first few versions of the script, but it definitely

does no harm, and is possibly (I haven't tested for this) a requirement for

keeping the entire process going. Anyone who wants to experiment, feel free

to rip out this section and give it a shot.

echo "Installing the required libs for the toolkit progs"

# Different versions of 'ldd' produce several variations in output.

# Debian's includes the paths to the libraries, so deciding what to copy

# where is easy; if yours does not, you'll have to check if it exists in

# the standard paths and copy it to the appropriate place in the chroot

# tree.

for lib in `ldd $dir/bin/* $dir/usr/bin/* $dir/usr/sbin/*|\

perl -walne'print $1 if m#(\S*/lib\S+)#'|sort -u`

do

# Extract the original lib directory

d=${lib%/*}

# Cut the leading / from the above

ld=${d#/}

# Check if the dir exists in the chroot and create one if not

[ -d "$ld" ] || mkdir -p $dir/$ld

[ -e ${lib#/} ] || cp $lib $dir/$ld

done